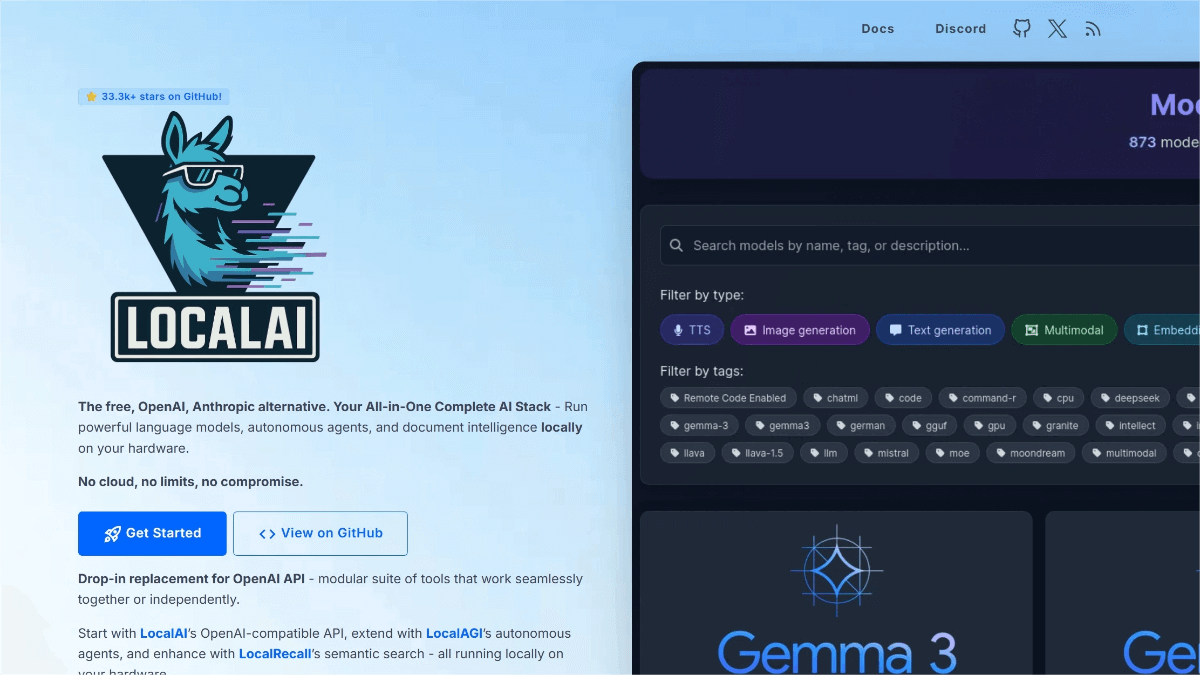

What is LocalAI?

LocalAI is an open-source local AI inference framework that provides a fully localized AI solution. It supports multimodal models—including text generation, image generation, and speech processing—and is compatible with the OpenAI API. With a strong focus on privacy, all data processing is performed locally and never uploaded to the cloud. LocalAI supports a wide range of hardware configurations, from CPU-only setups to NVIDIA, AMD, and Intel GPUs. It accommodates various model formats such as GGML, GGUF, and GPTQ, giving users flexible deployment options. LocalAI also includes components like LocalAGI and LocalRecall for autonomous AI agents and semantic search. Its multimodal capabilities make it suitable for projects requiring diverse AI functions.

Key Features of LocalAI

Multimodal Support:

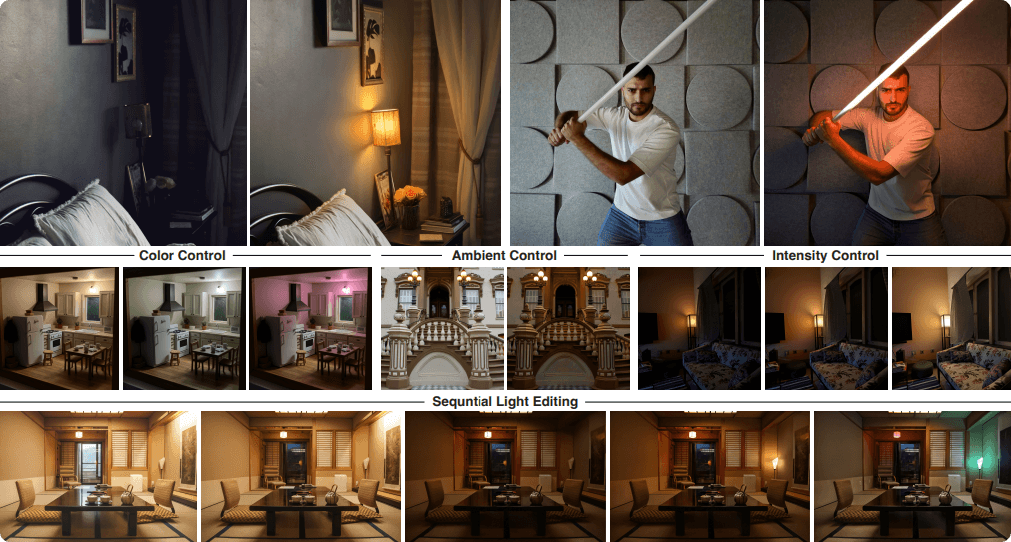

LocalAI supports text generation, image generation, speech-to-text, text-to-speech, and more. For example, with its image-generation interface, experimental data can be automatically converted into visual charts.

OpenAI API Compatibility:

LocalAI offers REST endpoints compatible with the OpenAI API, enabling users to seamlessly replace OpenAI services in local environments. Existing applications can be self-hosted without code modifications.

Local Inference & Privacy Protection:

A major advantage of LocalAI is that all inference happens locally. Sensitive data never leaves the device, ensuring strong privacy guarantees.

Hardware Compatibility:

LocalAI runs on diverse hardware configurations, including CPU-only environments, and supports NVIDIA, AMD, and Intel GPUs.

WebUI Support:

A built-in Web-based interface allows users to manage models, handle files, and engage in real-time AI interactions easily.

Model Management & Loading:

Through a gRPC interface, LocalAI enables model loading and management. Frequently used models can be preloaded into memory, substantially reducing first-token latency.

Tool Calling & Function Integration:

LocalAI supports function calling and embedding services, allowing developers to integrate AI capabilities into their existing systems.

Technical Principles of LocalAI

Open-Source Library Integration:

LocalAI integrates with multiple open-source libraries—for example, llama.cpp and gpt4all.cpp for text generation, whisper.cpp for audio transcription, and Stable Diffusion for image generation.

OpenAI API Specification Compatibility:

By adhering to the OpenAI API specification, LocalAI ensures seamless integration with existing systems. Users can switch from OpenAI to LocalAI without modifying their codebase.

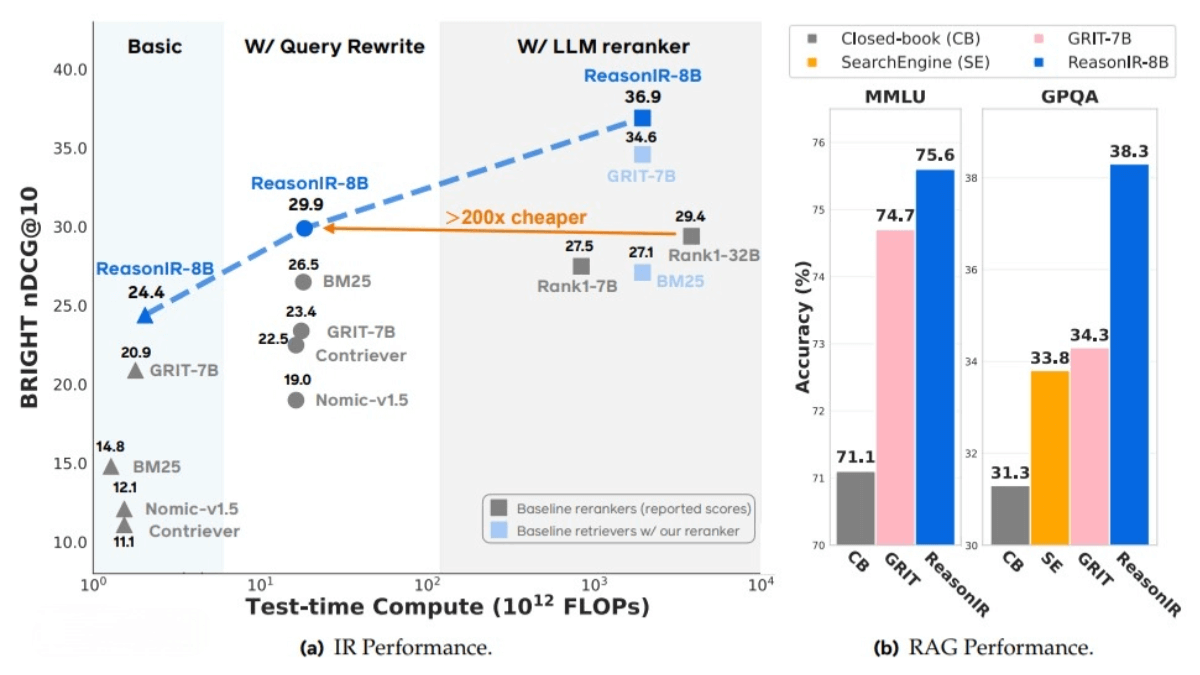

Multi-Hardware Support:

It supports CPUs as well as NVIDIA, AMD, and Intel GPUs. Using quantization techniques such as ggml/q4_0, LocalAI can efficiently run large models on consumer-grade hardware.

Distributed Inference Architecture:

LocalAI implements a P2P-based distributed inference system that splits workloads across multiple nodes. Node discovery uses mDNS and DHT, while communication is encrypted through the libp2p stack.

Dynamic Task Scheduling:

In distributed inference, LocalAI uses a decentralized task-scheduling mechanism that allocates tasks based on CPU/memory usage and network latency. Tasks are sent to the least-loaded nodes, improving availability and fault tolerance.

Project Links

Official Website: https://localai.io/

GitHub Repository: https://github.com/mudler/LocalAI

Use Cases of LocalAI

Enterprise Intranet Environments:

Build private AI assistants for internal document processing, code review, and knowledge Q&A—while keeping all data within the company’s network.

Personal Development Setup:

Use LocalAI as a local AI coding assistant with fast responses and no network latency.

Offline AI Applications:

Enable AI capabilities in environments without internet access—for research, programming, and content creation.

Autonomous AI Agents:

Build and deploy local autonomous agents for task automation and complex workflows without relying on the cloud.

Semantic Search & Memory:

Create local knowledge bases with semantic search to enhance contextual awareness and persistent memory.

Multimodal Content Generation:

Generate text, images, and audio locally—ideal for creative projects, marketing, and multimedia production.