SenseNova-SI — SenseTime’s open-source Spatial Intelligence large model

What is SenseNova-SI?

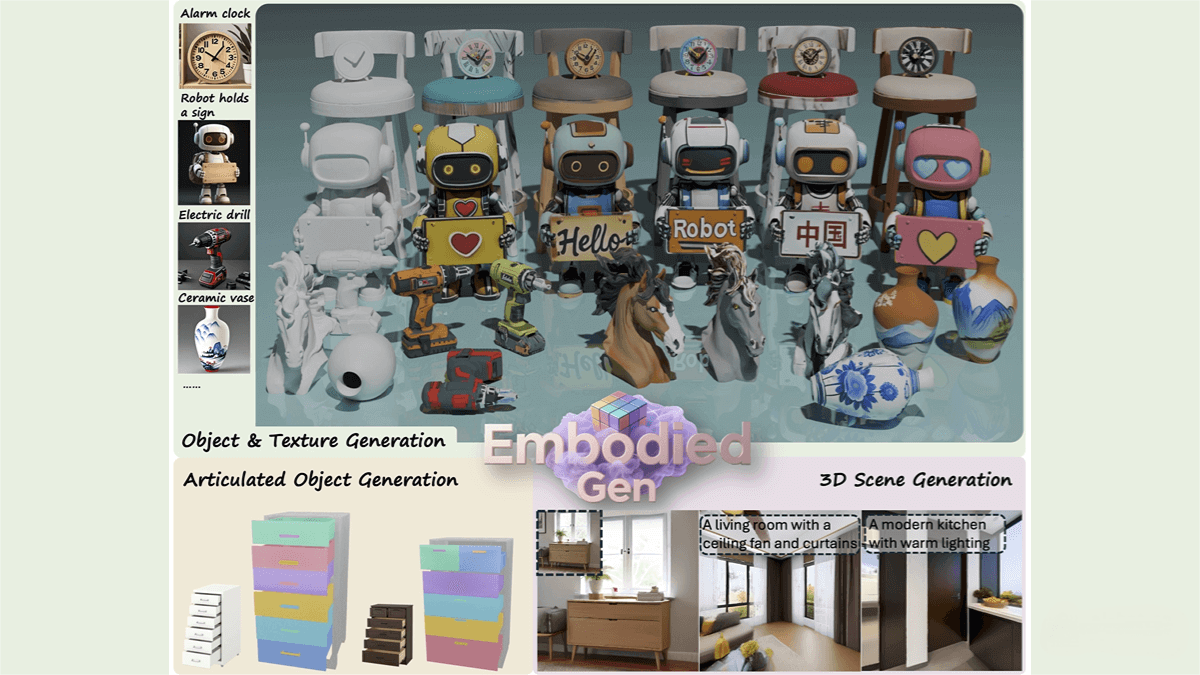

SenseNova-SI is SenseTime’s open-source spatial intelligence large model, designed specifically to enhance spatial understanding. Trained on large-scale, high-quality spatial datasets, the model significantly improves its capabilities in core dimensions such as spatial measurement, relational understanding, and viewpoint transformation.

In multiple authoritative benchmarks, SenseNova-SI outperforms open-source models of similar size and even surpasses top-tier closed-source models like GPT-5. The project provides detailed installation and usage guides to help developers get started quickly, advancing embodied AI and world-model research while laying the foundation for AI systems that can understand the 3D world.

Key Features of SenseNova-SI

-

Spatial measurement and estimation:

Accurately estimates object dimensions, distances, and other quantitative spatial attributes. -

Spatial relationship understanding:

Understands relative positions, orientations, and spatial layouts between objects. -

Viewpoint transformation:

Handles changes in scene appearance from different viewpoints and infers the effects of viewpoint shifts. -

Spatial reconstruction and deformation:

Understands 3D structures of objects and maintains spatial awareness after deformation or reconstruction. -

Spatial reasoning:

Performs logical reasoning based on spatial information, such as predicting object movement or layout changes. -

Multimodal fusion:

Integrates image, text, and other modalities to better comprehend complex spatial scenes.

Technical Principles of SenseNova-SI

-

Scale effect:

Through training on massive amounts of high-quality spatial data, SenseTime validates the “scale effect”—increased data volume leads to significant improvements in spatial cognition. This is the core driver behind SenseNova-SI’s performance jump. -

Systematic training methodology:

SenseTime defines a classification framework for spatial capabilities and expands the dataset based on it, applying a systematic training approach that improves all dimensions of spatial intelligence in a consistent manner. -

Multimodal fusion architecture:

Built on architectures such as InternVL, SenseNova-SI effectively fuses visual and textual information to enhance understanding of complex scenes.

Project Links

-

GitHub repository: https://github.com/OpenSenseNova/SenseNova-SI

-

HuggingFace model collection: https://huggingface.co/collections/sensenova/sensenova-si

Application Scenarios of SenseNova-SI

-

Autonomous driving:

With accurate spatial measurement and viewpoint transformation, vehicles can better interpret road environments, predict object movement, and improve safety and reliability. -

Robotic navigation and manipulation:

Spatial relationship understanding and reasoning enable robots to navigate complex environments and operate objects with greater precision. -

Virtual reality and augmented reality:

Enhances the realism of virtual spaces and enables more natural user interaction in VR/AR environments. -

Intelligent security systems:

Analyses surveillance footage with spatial intelligence to quickly detect anomalies or changes in object positions, improving the efficiency and accuracy of security monitoring. -

Architecture and spatial planning:

Assists designers with 3D spatial layout planning and rapidly generates or optimizes design schemes using spatial reconstruction capabilities.

Related Posts