DeepEyesV2 — the multimodal agent model open-sourced by Xiaohongshu (RED)

What is DeepEyesV2?

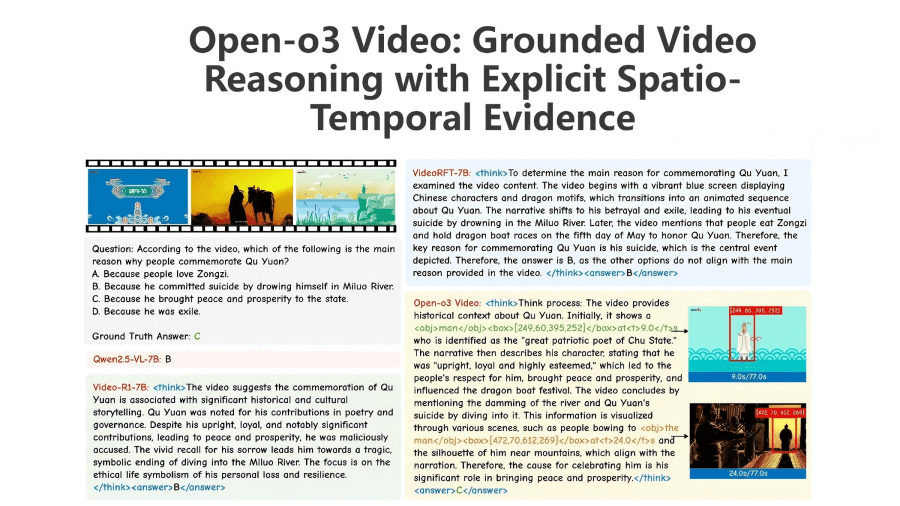

DeepEyesV2 is a multimodal agent model developed by the Xiaohongshu (RED) team. Using a two-stage training framework, it achieves strong tool-use capabilities and multimodal reasoning. The model can understand both images and text, proactively call external tools such as code execution environments or web search engines, and integrate tool outputs into its reasoning process to solve complex real-world problems.In the first stage, supervised fine-tuning teaches the model the basic concepts of tool usage. In the second stage, reinforcement learning further enhances the model’s efficiency and generalization in tool calling. DeepEyesV2 demonstrates excellent multi-skill coordination on the newly proposed RealX-Bench benchmark.

Key Features of DeepEyesV2

-

Multimodal understanding:

Processes both text and image inputs to interpret complex multimodal content. -

Proactive tool calling:

Actively invokes external tools—such as code execution or web search—when needed to obtain additional information or perform complex tasks. -

Dynamic reasoning and decision-making:

Integrates tool outputs into an iterative reasoning process to progressively solve problems. -

Task adaptiveness:

Automatically selects the most suitable tools based on task type (perception, reasoning, etc.), improving efficiency and accuracy. -

Complex task solving:

Combines tool use and iterative reasoning to handle tasks requiring coordinated multimodal skills, such as perception–search–reasoning pipelines.

Technical Principles Behind DeepEyesV2

Cold Start Stage

-

Supervised Fine-tuning (SFT):

The model is fine-tuned on large-scale datasets containing tool-use trajectories (e.g., perception tasks, reasoning tasks, long chain-of-thought tasks), helping it form a foundational understanding of how to use tools. -

Data Design:

Training data covers diverse task types to ensure the model learns tool strategies applicable to different scenarios.

Reinforcement Learning Stage

-

Policy Optimization:

Building on the cold start stage, reinforcement learning optimizes the model’s tool-calling policy to improve efficiency and generalization. -

Objectives:

Reduce unnecessary tool calls while enabling creative tool combinations in unseen complex scenarios, boosting flexibility and adaptiveness.

Project Links

-

Project website: https://visual-agent.github.io/

-

GitHub repository: https://github.com/Visual-Agent/DeepEyesV2

-

arXiv paper: https://arxiv.org/pdf/2511.05271

Application Scenarios of DeepEyesV2

-

Intelligent Q&A and information retrieval:

Users can upload images and ask questions; DeepEyesV2 combines visual recognition and web search to deliver precise answers. -

Education and learning assistance:

Supports students by interpreting images and providing reasoning-based study help. -

Content creation and editing:

Analyzes image content to provide editing suggestions or generate related text. -

Customer service and tech support:

Uses image recognition and web search to diagnose issues and assist users. -

Healthcare:

Helps doctors interpret medical images and provides health information or preliminary assessments using combined perception and search.

Related Posts