RynnEC – The World Understanding Model launched by Alibaba DAMO Academy

What is RynnEC?

RynnEC is a World Understanding Model (MLLM) developed by Alibaba DAMO Academy, specifically designed for embodied cognition tasks. The model can comprehensively analyze objects in a scene from 11 dimensions such as position, function, and quantity. It supports object understanding, spatial understanding, and video object segmentation. RynnEC builds continuous spatial perception based solely on video sequences without requiring 3D models, enabling flexible interaction. RynnEC provides powerful semantic understanding capabilities for embodied intelligence, helping robots better comprehend the physical world.

Main Features of RynnEC

-

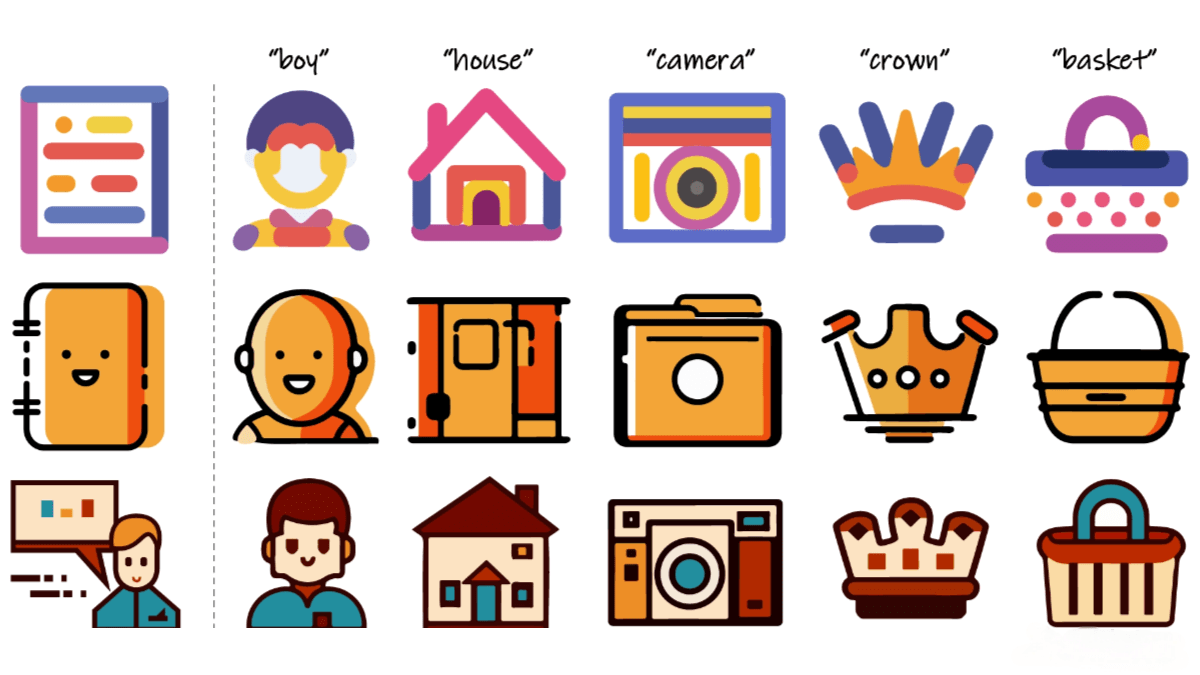

Object Understanding: RynnEC can analyze objects in a scene from multiple dimensions (such as position, function, quantity) and supports detailed description and classification of objects.

-

Spatial Understanding: Based on video sequences, it establishes continuous spatial perception, supports 3D perception, and understands spatial relationships between objects.

-

Video Object Segmentation: Implements object segmentation in videos according to text instructions, allowing precise annotation of specific areas or objects.

-

Flexible Interaction: Supports natural language-based interaction, enabling users to communicate with the model in real time and receive feedback.

Technical Principles of RynnEC

-

Multimodal Fusion: Combines video data (including images and video sequences) with natural language text through multimodal fusion technology, allowing the model to process both visual and linguistic information. Video features are extracted using video encoders such as SigLIP-NaViT, followed by semantic understanding with a language model.

-

Spatial Perception: The model builds continuous spatial perception based on video sequences without additional 3D models. It uses temporal sequence information and spatial relation modeling to understand object locations and movements in space.

-

Object Segmentation: Uses text-instruction-guided video object segmentation technology, enabling the model to recognize and segment specific targets in the video based on user commands. Masking and region annotation techniques are employed for precise segmentation of specific areas in video frames.

-

Training and Optimization: RynnEC is trained on large-scale annotated datasets, including image question answering, video question answering, and video object question answering in various formats. It employs a staged training strategy to gradually optimize the model’s multimodal understanding and generation capabilities. It supports LoRA (Low-Rank Adaptation) technology to further enhance model performance by merging weights.

Project Repository

Application Scenarios of RynnEC

-

Home Service Robots: Helps home robots understand commands and accurately locate and manipulate objects in the home environment, such as “pick up the remote control,” enhancing home automation.

-

Industrial Automation: Assists robots in industrial settings to recognize and operate objects on production lines, completing complex tasks such as “place the red part on the blue tray,” improving production efficiency.

-

Intelligent Security: Enables real-time tracking of targets through video surveillance, such as “monitor red vehicles,” enhancing the intelligence and responsiveness of security systems.

-

Medical Assistance: Allows medical robots to understand instructions and perform tasks like “deliver medicine to ward 302,” improving accuracy and efficiency in medical services.

-

Education and Training: Supports teaching through video segmentation technology, for example, “display cell structures,” enhancing students’ understanding of complex concepts and learning experience.

Related Posts