OneCAT – a unified multimodal model jointly launched by Meituan and Shanghai Jiao Tong University

What is OneCAT?

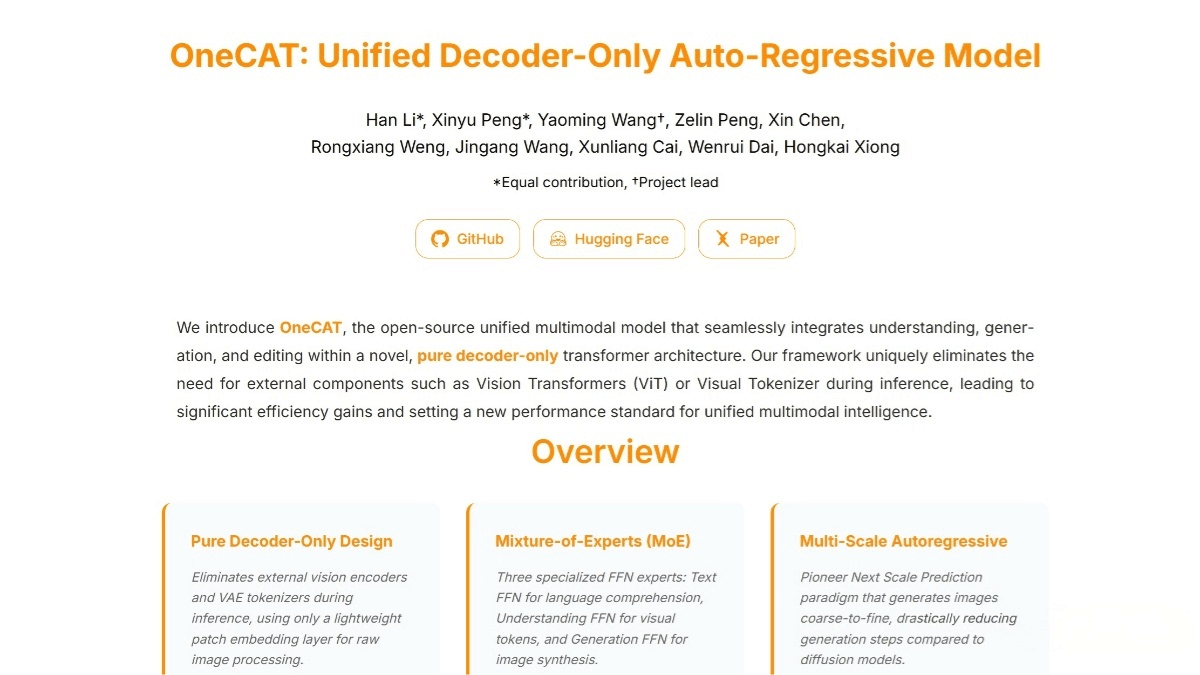

OneCAT is a new unified multimodal model launched by Meituan. Built on a pure decoder architecture, it seamlessly integrates multimodal understanding, text-to-image generation, and image editing. Unlike traditional multimodal models that rely on external vision encoders and tokenizers, OneCAT adopts a modality-specific Mixture of Experts (MoE) structure and a multi-scale autoregressive mechanism to achieve efficient multimodal processing. It demonstrates strong performance when handling high-resolution image inputs and outputs. Through its innovative scale-aware adapters and multimodal multi-functional attention mechanism, OneCAT further enhances visual generation capabilities and cross-modal alignment.

OneCAT’s Key Functions

-

Multimodal Understanding: Efficiently handles multimodal tasks involving both images and text. Without relying on external vision encoders or tokenizers, it achieves deep understanding of text-image content directly within its pure decoder architecture.

-

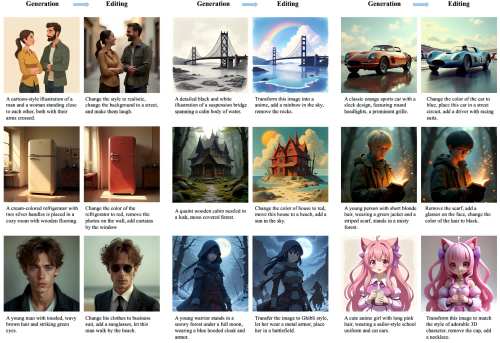

Text-to-Image Generation: Generates high-quality images from text descriptions. Leveraging the multi-scale autoregressive mechanism, it progressively predicts visual tokens from low to high resolution, enabling efficient generation with excellent results.

-

Image Editing: Supports instruction-based image editing by conditioning the visual generation process on reference images and editing instructions. This requires no additional architectural modifications and enables powerful conditional generation for precise local and global image adjustments.

Technical Principles of OneCAT

-

Pure Decoder Architecture: OneCAT is built on a pure decoder autoregressive Transformer model, eliminating the need for external vision components such as vision transformers (ViTs) or visual tokenizers. This significantly simplifies the model design, reduces computational overhead, and provides efficiency advantages, especially for high-resolution inputs.

-

Modality-Specific Mixture of Experts (MoE) Structure: The model includes three specialized feed-forward network (FFN) experts to handle text tokens, continuous visual tokens, and discrete visual tokens. These are used for language understanding, multimodal understanding, and image synthesis. All query, key, value (QKV), and attention layers are shared across modalities and tasks, which improves parameter efficiency and strengthens cross-modal alignment.

-

Multi-Scale Visual Autoregressive Mechanism: Introduced into the large language model (LLM), this mechanism generates images hierarchically from coarse to fine, predicting visual tokens step by step from the lowest to the highest resolution. This dramatically reduces decoding steps while maintaining state-of-the-art performance.

-

Multimodal Multi-Functional Attention Mechanism: Based on PyTorch FlexAttention, this design allows the model to flexibly adapt to multiple modalities and tasks. Text tokens are processed with causal attention, continuous visual tokens with full attention, and multi-scale discrete visual tokens with block causal attention.

Project Links for OneCAT

-

Official Website: https://onecat-ai.github.io/

-

GitHub Repository: https://github.com/onecat-ai/onecat

-

HuggingFace Model Hub: https://huggingface.co/onecat-ai/OneCAT-3B

-

arXiv Technical Paper: https://arxiv.org/pdf/2509.03498

Application Scenarios of OneCAT

-

Intelligent Customer Service and Content Moderation: With strong multimodal understanding, OneCAT can efficiently process image and text content. It can be applied to customer service systems to understand user-uploaded multimodal inputs and provide accurate responses, or in content moderation to automatically detect and filter out inappropriate text-image content.

-

Creative Design and Digital Content Creation: Its text-to-image generation capability allows the production of high-quality images from textual descriptions. This helps designers and creators generate ideas quickly, producing tailored visual content for advertising design, film special effects, game development, and early-stage concept design.

-

Advertising Design and Marketing: OneCAT can generate image materials directly from ad copy, improving design efficiency. It can also produce personalized advertising content by generating visuals tailored to different target audiences.

-

Film Post-Production: OneCAT’s image editing capabilities can be applied in film post-production tasks such as image restoration, style transfer, and special effects, helping production teams rapidly realize creative effects and improve workflow efficiency.

-

Education and Learning: In education, OneCAT can generate images relevant to teaching content, helping students better understand and memorize knowledge. For example, it can create illustrations for scientific concepts or generate scene images based on descriptions of historical events.

Related Posts