veCLI – A Command-Line AI Tool Released by ByteDance Volcano Engine

What is veCLI?

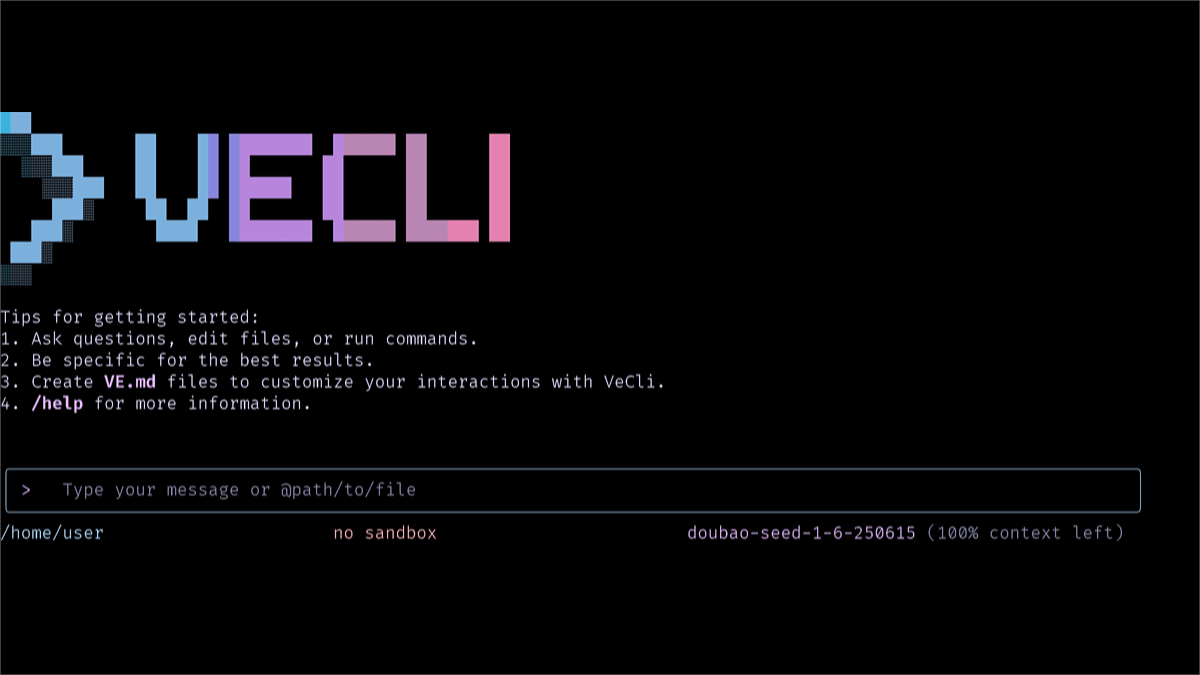

veCLI is a command-line AI tool launched by ByteDance’s Volcano Engine to boost development efficiency. It seamlessly integrates models such as Doubao LLM 1.6 and others, enabling developers to interact via natural language, quickly generate code, and deploy locally without memorizing complex commands. veCLI supports key-based authentication for security, allows flexible model switching, and is integrated with Volcano Engine cloud services, making everything from building to deployment more convenient. It also offers extensibility to further enhance programming efficiency.

Key Features of veCLI

-

Seamless LLM Integration: Deep integration with Doubao LLM 1.6, while also supporting third-party models such as Kimi-K2 and DeepSeek v3.1. Developers can directly access Volcano Ark LLMs and Volcano Cloud products from the terminal.

-

Multi-Step Reasoning & Problem Solving: Utilizes the “Think–Act” cycle (ReAct) mechanism, enabling the AI assistant to perform multi-step reasoning and problem solving like a developer.

-

Natural Language Code Generation: Developers can use natural language prompts in the terminal to quickly generate code and deploy locally, without the need to remember complex commands.

-

Secure Key-Based Authentication: Login is completed with Volcano Engine AK/SK credentials, ensuring enterprise-level identity verification and authorization, while securing access and operations.

-

Flexible Model Switching: Developers can easily switch between different models through commands to best match task requirements.

-

Cloud Service Integration: Integrated with Volcano Engine MCP Server, helping users conveniently invoke cloud services from building to deployment, improving AI application development efficiency on the cloud.

-

Extensibility: By configuring Feishu MCP in the settings.json file, veCLI supports free extensibility, further boosting development productivity.

How to Use veCLI

-

Access the Platform: Visit the official veCLI page at npmjs.com.

-

Install: Run

npx @volcengine/vecli@latestfor quick execution, or install globally withnpm install -g @volcengine/vecli. -

Login: Run

vecli loginand follow the prompts to log in with your Volcano Engine account. -

Use: Enter

vecliin the terminal to start interacting with the AI assistant via natural language for tasks such as code generation and problem solving. -

Switch Models: Use the

vecli modelcommand to switch to different models for various tasks. -

Configure Extensions: Edit the settings.json file to configure extensions such as Feishu integration, enhancing workflow efficiency.

Application Scenarios of veCLI

-

Code Generation & Optimization: Developers can describe their needs in natural language to quickly generate code snippets or entire projects, reducing repetitive coding and boosting efficiency.

-

Troubleshooting & Problem Solving: When encountering issues during development, veCLI can provide solutions or fix suggestions based on problem descriptions, helping developers resolve issues faster.

-

Cloud Service Deployment: With Volcano Engine cloud service integration, developers can deploy code directly from the terminal, achieving one-stop service from development to production and simplifying deployment workflows.

-

Documentation Generation & Query: Automatically generates code comments, API documentation, and more, facilitating team collaboration and future maintenance. It can also query related technical docs to provide instant learning support.

-

Multi-Model Task Switching: Developers can switch between models based on task requirements—for instance, choosing a more precise text generation model when needed—meeting diverse development needs.

Related Posts