Wan2.2-Animate – Alibaba Tongyi’s open-source motion generation model

What is Wan2.2-Animate?

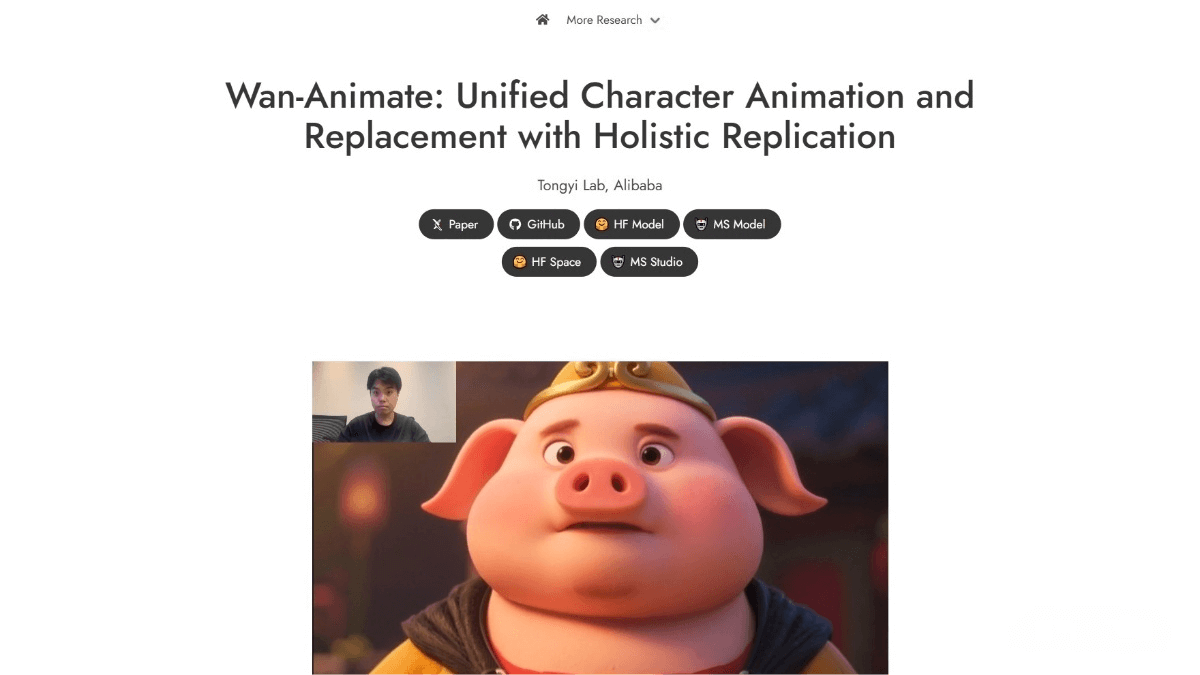

Wan2.2-Animate is a motion generation model developed by Alibaba’s Tongyi team. The model supports both motion imitation and character replacement modes. Based on a performer’s video, it can precisely replicate facial expressions and body movements to generate highly realistic animated character videos. It can seamlessly replace characters in the original video while perfectly matching the scene’s lighting and tone.

Built on the Wan model, it controls body movements through spatially aligned skeletal signals and recreates expressions using implicit facial features extracted from the source image, enabling highly controllable and expressive character video generation. The Wan2.2-Animate model is now available for online experience on the Tongyi Wanxiang official website.

Key Features of Wan2.2-Animate

-

Motion imitation: By inputting a character image and a reference video, the model transfers the movements and expressions from the video onto the input image character, bringing static images to life.

-

Character replacement: While retaining the original video’s motion, expressions, and environment, the model replaces the character with the input image character, achieving seamless integration.

Technical Principles of Wan2.2-Animate

-

Input paradigm: The Wan model’s input paradigm is adapted for character animation tasks by unifying reference image input, temporal frame guidance, and environmental information into a common symbolic representation.

-

Body movement control: Spatially aligned skeletal signals are used to replicate body actions. These signals describe body movements precisely and, when combined with character images, enable accurate control of character body motion.

-

Facial expression control: Implicit facial features extracted from the source image serve as driving signals to reproduce facial expressions, capturing subtle expression changes for highly realistic performance.

-

Environmental integration: To improve blending with new environments during character replacement, an auxiliary Relighting LoRA module is introduced. It maintains character appearance consistency while matching lighting and tone to the target environment, achieving seamless integration.

How to Use Wan2.2-Animate

-

Visit the Tongyi Wanxiang official website and log in.

-

Upload a character image and a reference video.

-

Choose either motion imitation or character replacement mode.

-

Click generate, and the model will automatically process and output the result.

Project Links

-

Official site: https://humanaigc.github.io/wan-animate/

-

HuggingFace: https://huggingface.co/Wan-AI/Wan2.2-Animate-14B

-

arXiv paper: https://arxiv.org/pdf/2509.14055

Application Scenarios of Wan2.2-Animate

-

Video editing: Replace characters in videos with animated characters that seamlessly blend into the original environment, creating natural visual effects.

-

Game development: Generate real-time character animations from players’ motion capture data, making character movements more natural and immersive.

-

Virtual and augmented reality (VR/AR): Create realistic virtual characters that interact naturally with users, enhancing immersion and authenticity.

-

Education and training: Introduce animated characters as teaching assistants, using expressive actions and emotions to engage learners and increase interactivity.

Related Posts