KAT-Dev-32B – Code Large Language Model Released by Kuaishou’s Kwaipilot

What is KAT-Dev-32B?

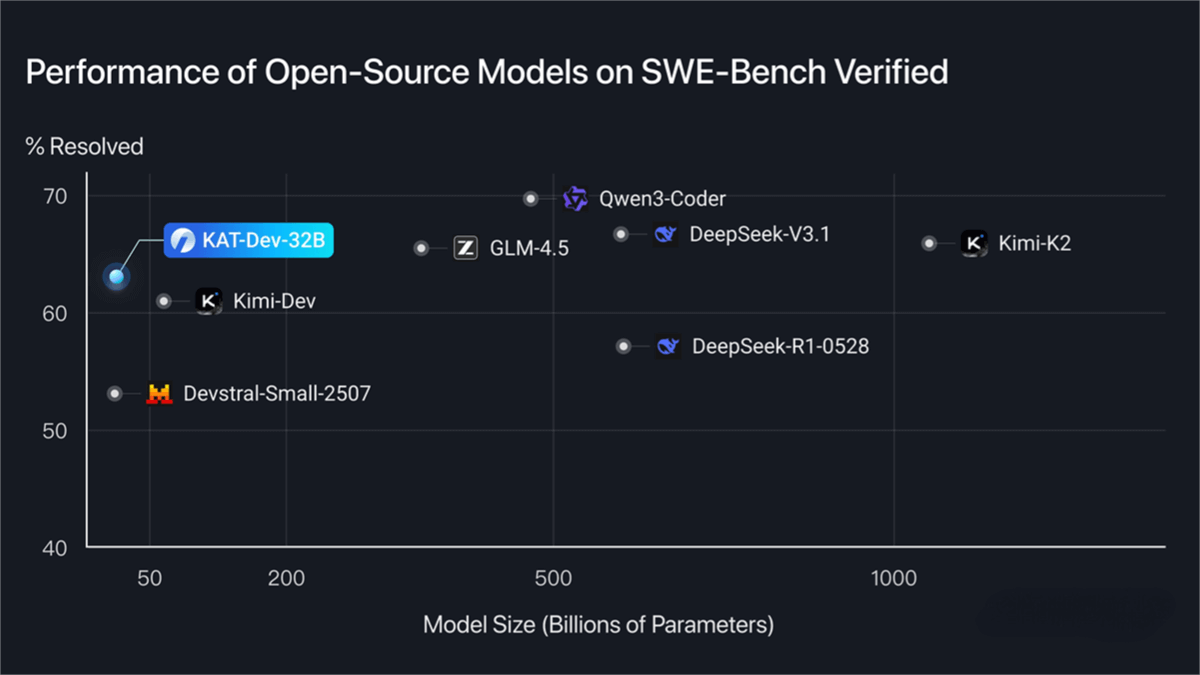

KAT-Dev-32B is an open-source code-focused large language model released by Kuaishou’s Kwaipilot team, with 3.2 billion parameters. On the SWE-Bench Verified benchmark, it achieved a 62.4% solve rate, ranking 5th overall. The model was trained in multiple stages, including intermediate training, supervised fine-tuning (SFT), reinforcement fine-tuning (RFT), and large-scale agent reinforcement learning (RL), to enhance its abilities in tool usage, multi-turn dialogue understanding, and instruction following. It supports mainstream programming languages such as Python, JavaScript, Java, C++, and Go, and is available on Hugging Face for developers to use.

Key Features of KAT-Dev-32B

-

Code Generation: Generates code based on user requirements, supporting multiple mainstream languages including Python, JavaScript, Java, C++, and Go.

-

Code Understanding: Helps developers interpret complex code logic, quickly grasping structure and functionality.

-

Bug Fixing: Detects errors in code and provides repair suggestions to improve development efficiency.

-

Performance Optimization: Optimizes code for better runtime efficiency and improved software performance.

-

Test Case Generation: Automatically generates test cases to increase test coverage and ensure software quality.

-

Multi-Turn Dialogue Understanding: Engages in multi-turn conversations to better understand user needs and provide more precise coding solutions.

-

Domain Knowledge Integration: Incorporates knowledge from specific domains to generate code that aligns with industry standards.

-

Real Development Workflow Support: Simulates real-world development processes to help developers adapt to practical programming environments.

Technical Principles of KAT-Dev-32B

-

Transformer Architecture: Built on the Transformer framework, capable of handling long text sequences and capturing long-range dependencies in code, providing strong foundational capabilities for code generation and understanding.

-

Pretraining + Fine-tuning: First pretrained on large-scale code datasets to learn general programming patterns and language features; then fine-tuned on specific tasks for better adaptation to applications like code generation and comprehension.

-

Reinforcement Learning Optimization: Optimized with reinforcement learning to better follow programming norms and logic during code generation, improving code quality and usability.

-

Multi-Task Learning: Trained on various programming-related tasks such as code generation, completion, and debugging, enabling the model to leverage multiple skills for more comprehensive code understanding and generation.

-

Context Awareness: Understands code context, including variable definitions and function calls, to produce contextually consistent and accurate code snippets.

-

Domain Knowledge Fusion: Integrates domain-specific knowledge into training, allowing the model to generate code tailored to the conventions and standards of specific fields.

Project Repository

-

Hugging Face Model Hub: https://huggingface.co/Kwaipilot/KAT-Dev

Application Scenarios of KAT-Dev-32B

-

Code Understanding: Assists developers in quickly grasping complex code logic and structure for easier maintenance and refactoring.

-

Bug Fixing: Automatically detects code errors and provides fixes, reducing debugging time.

-

Performance Optimization: Analyzes code and suggests improvements to enhance runtime efficiency.

-

Test Case Generation: Generates test cases automatically, improving coverage and ensuring quality.

-

Multi-Language Support: Works across multiple mainstream programming languages, meeting diverse development needs.

-

Development Assistance: Provides real-time code suggestions and auto-completion during development, improving the developer experience.

-

Education & Learning: Offers example code and explanations for learners, supporting programming education.

Related Posts