Lynx – High-Fidelity Personalized Video Generation Model Released by ByteDance

What is Lynx?

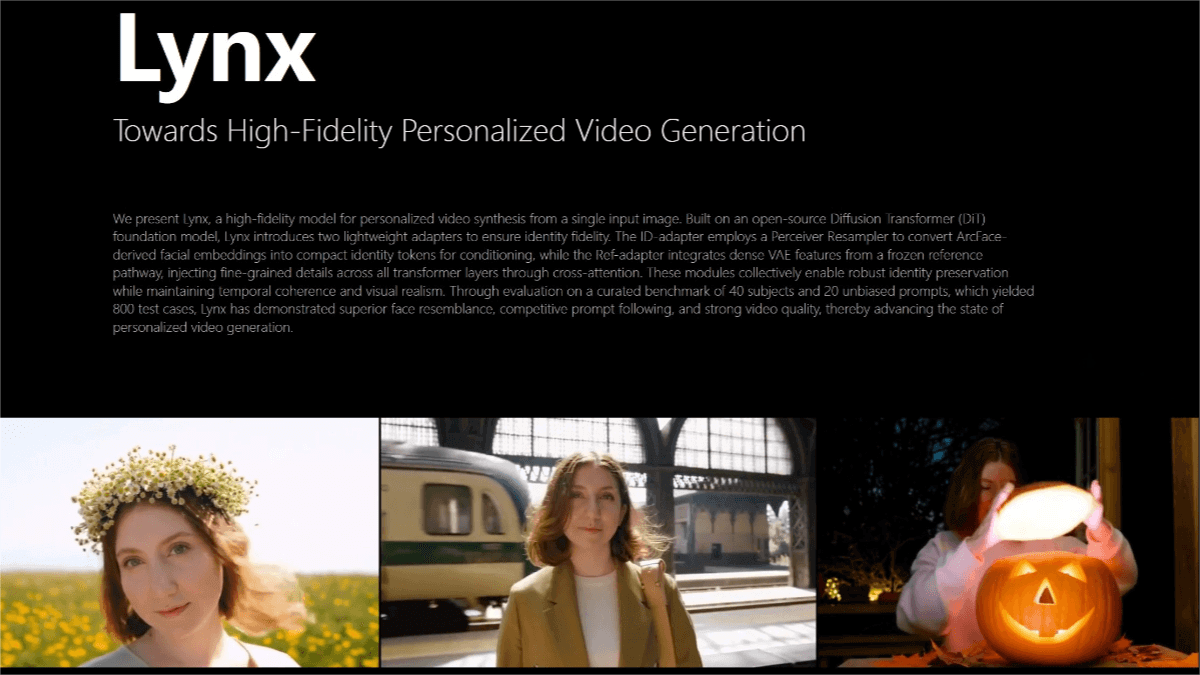

Lynx is a high-fidelity personalized video generation model released by ByteDance. It can generate identity-consistent videos using only a single portrait photo. Built on a Diffusion Transformer (DiT) base model, Lynx incorporates two lightweight adapter modules—ID-adapter and Ref-adapter—for controlling personal identity and preserving facial details, respectively. The model uses a face encoder to capture facial features, X-Nemo technology to enhance expressions, and the LBM algorithm to simulate lighting effects, ensuring identity consistency across different scenes. Its cross-attention adapter combines text prompts with facial features to produce scene-appropriate videos. Lynx also features a “time perceiver” that understands motion physics, maintaining temporal coherence in generated videos. Extensive testing shows that Lynx outperforms similar technologies in facial similarity, scene matching, and video quality. It is licensed under Apache 2.0 for commercial use, provided portrait rights for source images are secured.

Key Features of Lynx

-

Personalized Video Generation: Generates identity-consistent videos from a single portrait photo.

-

Identity Preservation: Ensures consistency of personal features across different scenes using face encoders and adapter modules.

-

Scene Matching: Cross-attention adapters combine text prompts and facial features to generate videos that fit the specified scene.

-

Temporal Coherence: Equipped with a “time perceiver” to understand motion physics and maintain consistency across the video timeline.

-

High Performance: Excels in facial similarity, scene matching, and video quality, outperforming comparable technologies.

-

Commercial Licensing: Apache 2.0 licensed for commercial use, provided portrait rights for source images are obtained.

Technical Principles of Lynx

-

Diffusion Transformer Architecture: Built on an open-source DiT model, efficiently transforming random noise into target video content.

-

Identity Feature Extraction & Retention: Uses ArcFace to extract facial features and Perceiver Resampler to convert feature vectors for adapter input, ensuring consistent identity in generated videos.

-

Detail Enhancement & Adaptation: Lightweight ID-adapter and Ref-adapter modules control identity and preserve facial details for realistic video output.

-

Cross-Attention Mechanism: Injects fine-grained details across all Transformer layers, integrating text prompts with facial features to generate scene-appropriate videos.

-

3D Video Generation: Implements a 3D VAE architecture with a “time perceiver” to understand motion physics and maintain temporal coherence.

-

Adversarial Training Strategy: Uses a triple adversarial setup—generator, discriminator, and identity discriminator—to optimize performance and improve video realism.

Project Links

-

Official Website: https://byteaigc.github.io/Lynx/

-

GitHub Repository: https://github.com/bytedance/lynx

-

HuggingFace Model Hub: https://huggingface.co/ByteDance/lynx

Application Scenarios of Lynx

-

Digital Human Creation: Generates realistic dynamic videos for virtual anchors, customer service avatars, and other digital humans to enhance interaction.

-

Film & VFX Production: Quickly creates video clips of specific characters in different scenes, assisting visual effects production while saving time and cost.

-

Short Video Creation: Enables creators to produce diverse videos from a single photo, enriching content and boosting efficiency.

-

Advertising & Marketing: Generates personalized video ads tailored to products or brands, enhancing appeal and reach.

-

Game Development: Produces personalized character actions and expressions, improving immersion and realism in games.

-

Education & Training: Generates educational or training videos, such as virtual instructors explaining lessons or demonstrating procedures.

Related Posts