RoboBrain-X0 – an open-source cross-ontology generalizable embodied model developed by BAAI (Beijing Academy of Artificial Intelligence)

What is RoboBrain-X0?

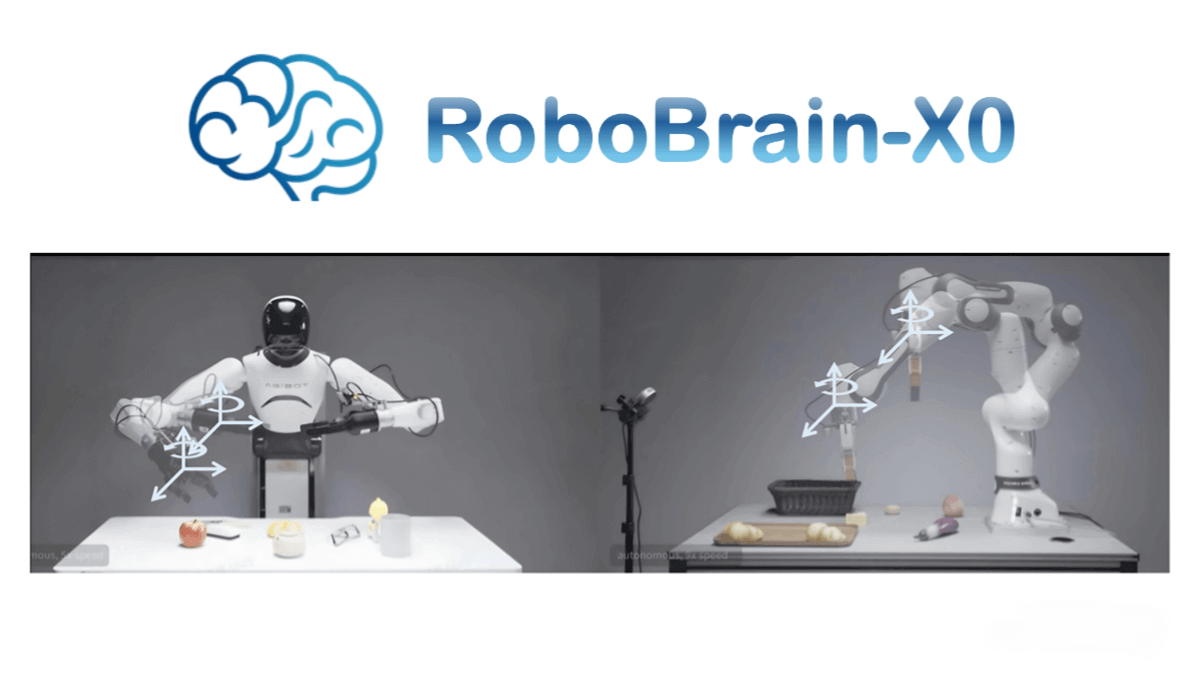

RoboBrain-X0 is the world’s first open-source embodied model that supports zero-shot cross-ontology generalization, released by the Beijing Academy of Artificial Intelligence (BAAI). Without fine-tuning, it can drive various real-world robots with different morphologies to perform basic manipulation tasks, and with a small amount of fine-tuning, it demonstrates strong cross-ontology adaptability to more complex tasks. By unifying the modeling of vision, language, and action, the model decomposes tasks into general semantic action sequences decoupled from any specific robot “body,” and then translates them in real time into executable commands for different robots. This breaks the limitation of single-robot systems and enables unified modeling across heterogeneous embodiments.

Key Features of RoboBrain-X0

-

Zero-shot cross-ontology generalization: Drives multiple real-world robots to complete basic tasks without fine-tuning for each robot, overcoming the traditional dependence on specific hardware forms.

-

Few-shot fine-tuning potential: With only a small number of samples (e.g., 50), the model can further improve its adaptability to complex tasks, demonstrating stronger generalization.

-

Control consistency: For the same task, action primitive sequences generated across different embodiments remain highly consistent, ensuring reliable physical execution.

-

Unified modeling of vision, language, and action: By integrating perception and execution, it provides robots with more comprehensive intelligent support.

-

Efficient task decomposition: Decomposes complex tasks into universal semantic action sequences and translates them into executable instructions in real time, improving task flexibility and adaptability.

-

Open dataset support: The core training dataset RoboBrain-X0-Dataset is open-sourced, offering developers rich resources to accelerate research and applications in embodied intelligence.

Technical Principles of RoboBrain-X0

-

Ontology mapping mechanism: Decomposes tasks into general semantic action sequences independent of robot morphology, then maps them into executable actions across multiple robots, achieving cross-ontology generalization.

-

Unified action space: Uses end-effector pose representation in SE(3) task space, combined with a Unified Action Vocabulary (UAV) and action tokenizer, to align actions of different robots into a shared discrete action primitive space, ensuring semantic consistency and transferability.

-

Grouped Residual Vector Quantizer (GRVQ): Maps continuous control sequences from robots with different degrees of freedom and mechanical structures into a shared discrete action primitive space, enabling semantic alignment and transferability.

-

Multimodal input and output: Accepts single image, multi-image, and text inputs, covering diverse task scenarios, and outputs multi-dimensional action sequences to drive robots.

-

Data-driven training: Trained on large-scale real-world robot data and embodied reasoning data, further integrating RoboBrain 2.0’s dataset, enhancing generalization and task execution capability.

Project Links

-

Official Website: https://superrobobrain.github.io/

-

GitHub Repository: https://github.com/FlagOpen/RoboBrain-X0

-

HuggingFace Model Hub: https://huggingface.co/BAAI/RoboBrain-X0-Preview

-

RoboBrain-X0 Dataset: https://huggingface.co/datasets/BAAI/RoboBrain-X0-Dataset

Application Scenarios of RoboBrain-X0

-

Service robots: Applicable to homes, hotels, hospitals, etc., for tasks such as delivery, cleaning, tidying, and companionship, improving service quality and efficiency.

-

Smart manufacturing: Enables material handling, assembly, and quality inspection in factories, enhancing automation and flexibility in production.

-

Logistics and warehousing: Supports sorting, handling, and stacking in logistics centers, optimizing warehouse management and reducing labor costs.

-

Education and research: Serves as a platform for universities and research institutions to advance robotics and AI research and education, accelerating innovation.

-

Operations in special environments: Suitable for hazardous settings such as nuclear facilities, deep-sea exploration, and space missions, where it can perform inspection, repair, and sampling tasks in place of humans, ensuring safety.

Related Posts