Meta ARE – AI Agent Runtime Evaluation Platform launched by Meta

What is Meta ARE?

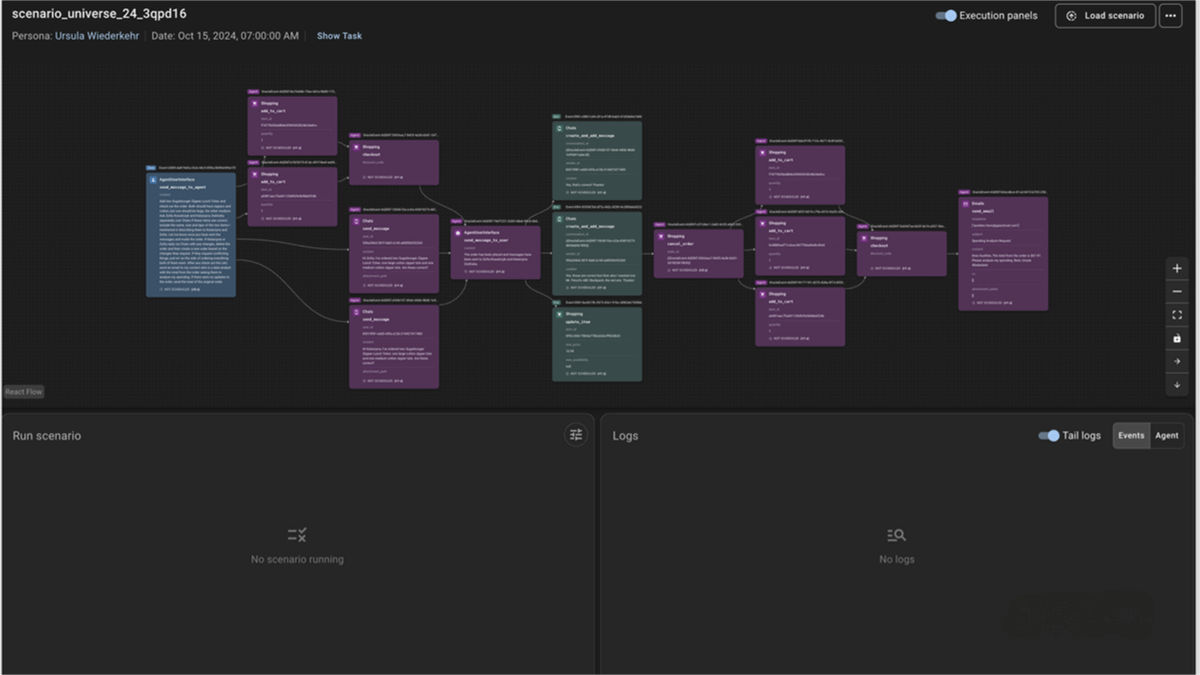

Meta ARE (Agents Research Environments) is a dynamic simulation research platform launched by Meta for training and evaluating AI Agents. The platform creates time-evolving environments that simulate complex, multi-step real-world tasks, requiring agents to adapt their strategies as new information emerges and conditions change. ARE runs the Gaia2 benchmark, which includes 800 scenarios across 10 domains, covering multi-step reasoning, real-world relevance, and comprehensive evaluation. The platform provides interactive applications—such as email, calendar, and file systems—for agents to interact with, supporting multiple models and automated result collection to facilitate systematic evaluation for the research community.

Meta Agents Research Environments (Meta ARE) – Key Features

Dynamic Simulation:

Supports the creation of complex, time-evolving scenarios that simulate real-world multi-step tasks, requiring agents to perform continuous reasoning and adaptation.

Agent Evaluation:

Provides comprehensive benchmarking tools such as the Gaia2 benchmark, which includes 800 scenarios across 10 domains for evaluating a wide range of agent capabilities.

Interactive Applications:

Agents can interact with realistic applications such as email, calendar, file systems, and messaging tools, each with domain-specific data and behavior.

Research and Benchmarking:

Supports parallel execution, multiple model types, and automated result collection, providing systematic evaluation tools for the research community.

Ease of Use:

With quick-start guides and command-line tools, users can easily begin agent evaluation and scenario development using ARE.

Technical Principles of Meta ARE

Dynamic Environments:

Utilizes an event-driven system to introduce dynamic changes, simulating the gradual revelation of information and evolving conditions in the real world. Events are triggered by time or by agent actions, enabling environments to evolve over time.

Agent-Environment Interaction:

Agents interact with environments using the ReAct (Reasoning + Acting) framework, perceiving the environment state, reasoning, and taking actions to accomplish tasks. Agent actions can alter the environment state, which in turn triggers new events.

Multi-Step Tasks:

Tasks are designed to require multi-step reasoning and decision-making—often involving ten or more steps—to mimic complex real-world workflows. Agents must maintain consistent reasoning and adaptability over extended time horizons.

Application Programming Interfaces (APIs):

Provides APIs for a range of applications (e.g., email, calendar) that allow agents to interact with them. Each application has specific data structures and behavior models.

Scenarios and Validation:

Scenarios combine applications, events, and validation logic into complete tasks. Validation logic is used to evaluate whether agent behaviors align with expected goals.

Benchmarking and Evaluation:

Through benchmarks like Gaia2, the system systematically evaluates agent performance across multiple scenarios. Benchmarking supports model comparison, detailed evaluation reports, and leaderboards.

Project Resources

Official Website: https://facebookresearch.github.io/meta-agents-research-environments/

GitHub Repository: https://github.com/facebookresearch/meta-agents-research-environments

Application Scenarios of Meta ARE

AI Agent Capability Evaluation:

Using the 800-scenario Gaia2 benchmark, researchers can comprehensively evaluate AI Agents’ reasoning, decision-making, and adaptability across multiple complex domains.

Multi-Step Task Simulation:

Simulates real-world multi-step workflows such as project management and event response to test agents’ long-term reasoning and task completion capabilities.

Human-AI Interaction Research:

Explores how agents interact with realistic applications such as email and calendars to develop more natural and efficient human-AI collaboration patterns.

Dynamic Environment Adaptation Testing:

Evaluates agents’ adaptability to new information and changing conditions in time-evolving environments, enhancing robustness under uncertainty.

Research and Development Support:

Provides researchers with systematic evaluation tools, supporting parallel execution and multi-model comparison to accelerate AI Agent research and development.

Related Posts