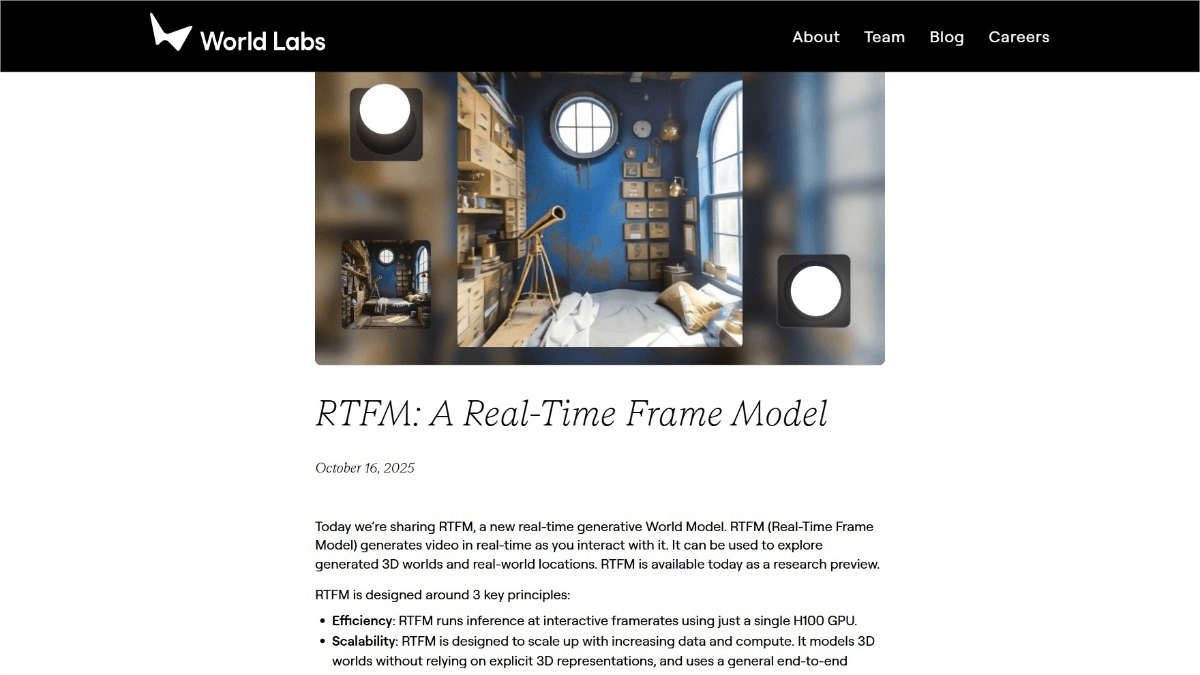

RTFM – a real-time generative world model developed by Fei-Fei Li’s team

What is RTFM?

RTFM (Real-Time Frame Model) is a real-time generative world model developed by Fei-Fei Li’s team. The model can run on a single H100 GPU, generating 3D scenes in real time with persistent interactivity. By learning from large-scale video data, RTFM understands lighting, materials, and spatial relationships—transforming complex physical rendering problems into data-driven perceptual modeling tasks. Each frame in RTFM is assigned spatial coordinates, and through a technique called “context shifting”, the model focuses only on nearby frames to generate new ones, achieving efficient and persistent world construction. RTFM demonstrates the potential of future world models, providing a novel technical path toward real-time, persistent, and interactive virtual worlds.

Key Features of RTFM

-

Real-Time 3D Scene Rendering: Generates high-quality 3D scenes from a single image or a few input views, supporting visual effects such as reflection, shadow, and gloss.

-

Persistent Interaction: Users can interact indefinitely with the generated world, which remains stable and does not disappear or reset when out of view.

-

High Efficiency: Runs at interactive frame rates on a single H100 GPU, making it feasible for current hardware.

-

Versatile Scene Generation: Handles a wide range of environments, from natural landscapes to complex indoor scenes.

Technical Principles of RTFM

-

End-to-End Learning: RTFM is an autoregressive diffusion transformer trained end-to-end on large-scale video datasets. It directly generates new-view frames from input frames without explicit 3D modeling.

-

Spatial Memory and Context Shifting: Each frame is assigned spatial coordinates (position and orientation), forming a spatial memory. When generating a new frame, RTFM retrieves only nearby frames as context, improving efficiency and maintaining persistence.

-

Data-Driven Rendering: By learning from extensive video data, RTFM captures the relationships of light, material, and space, turning traditional physics-based rendering into a data-driven perception problem.

-

Dynamic Scalability: RTFM’s architecture is designed to scale with increasing data and compute resources, laying the foundation for larger and more powerful future models.

Project Links

-

Official Website: https://www.worldlabs.ai/blog/rtfm

-

Online Demo: https://rtfm.worldlabs.ai/

Application Scenarios

-

Game Development: Enables developers to quickly create rich and diverse game worlds, offering immersive player experiences.

-

Virtual Reality (VR) and Augmented Reality (AR): Generates real-time virtual environments or AR objects, making interactions with virtual content more natural and fluid.

-

Film Production: Rapidly generates high-quality virtual scenes and visual effects, assisting in scene setup and compositing while saving time and cost.

-

Architectural Design & Visualization: Allows designers to instantly generate 3D views of architectural models to better showcase and communicate design concepts.

-

Education: Creates virtual lab environments or historical scenes, providing students with immersive and interactive learning experiences.

Related Posts