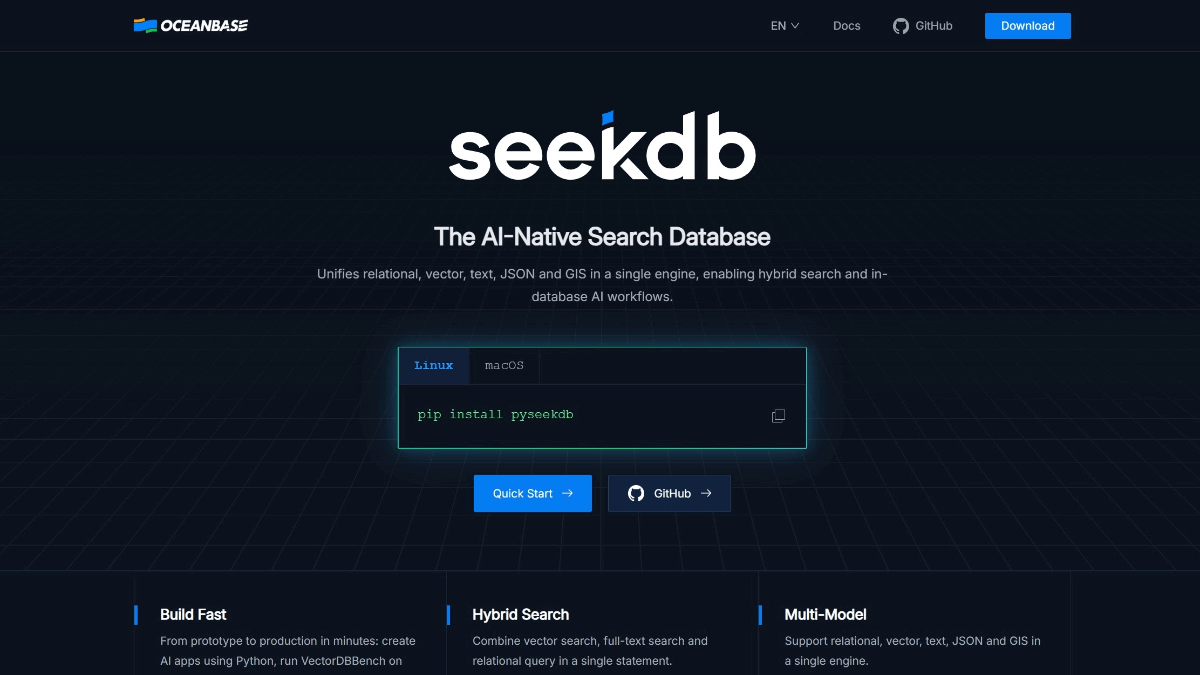

Seekdb – OceanBase’s First Open-Source AI-Native Hybrid Search Database

What is Seekdb?

Seekdb is OceanBase’s open-source AI-native database, designed to address the challenges of multimodal data integration and real-time processing in AI application development. It supports unified queries combining vector search, full-text search, and structured data filtering. A single SQL statement can handle cross-modal data operations, such as “transactions over 50,000 in the past 7 days, with abnormal location and behavior similar to historical fraud cases,” without requiring cross-system calls. It can run on as little as 1 CPU core and 2GB of memory, supports one-step pip installation, and can be embedded in intelligent agents, local applications, or run in service mode. Developers can quickly build AI applications with just three lines of code. Based on OceanBase’s transaction engine, it ensures real-time index updates after data writes, guaranteeing data consistency and timeliness in high-sensitivity scenarios such as finance and government. Seekdb is open-sourced under the Apache 2.0 license, compatible with over 30 mainstream AI frameworks including LangChain and HuggingFace, and provides SQL and Python SDKs to lower the development threshold.

Main Features of Seekdb

-

Hybrid Search Capabilities: Supports unified retrieval of vectors, full-text, scalar, and geospatial data for efficient multimodal querying.

-

Integration of AI Inference and Data Processing: Executes embeddings, re-ranking, and large language model inference directly within the database to improve processing efficiency.

-

Lightweight Deployment and Fast Startup: Runs on as little as 1 CPU core + 2 GB memory, supports pip install for one-step setup, and starts in seconds.

-

Open Source and Broad Compatibility: Apache 2.0 licensed, fully compatible with the MySQL ecosystem, and supports 30+ AI frameworks including Hugging Face and LangChain.

-

Low Latency and High Performance: Millisecond-level response for multimodal data retrieval at billion-scale, meeting real-time requirements.

-

Multiple Running Modes: Supports embedded and client/server deployment modes, adapting to various development needs.

-

Simplified Development: Developers can build knowledge bases, intelligent agents, and other AI applications with just three lines of code.

Technical Principles of Seekdb

-

AI-Native Architecture: Seekdb integrates AI inference deeply into data processing, allowing embeddings, re-ranking, and LLM inference to be executed directly inside the database, improving overall performance.

-

Hybrid Search Engine: Combines vector, full-text, scalar, and geospatial retrieval. Multi-stage search mechanisms ensure high-precision results with low latency, supporting complex multimodal queries.

-

Lightweight Deployment and Quick Startup: Designed to be lightweight, requiring only 1 CPU core + 2 GB memory, with pip install and startup in seconds.

-

Open Source and Compatibility: Apache 2.0 licensed, fully compatible with MySQL ecosystem, and supports 30+ AI frameworks including Hugging Face and LangChain.

-

Low Latency and High Performance: Optimized indexing and retrieval algorithms enable millisecond-level responses for billion-scale multimodal datasets.

-

Flexible Running Modes: Supports both embedded and client/server modes for maximum flexibility.

-

Deep AI Framework Integration: Directly performs vector embedding and model inference within the database, minimizing data transfer and processing delays.

Project Links

-

Official website: https://www.oceanbase.ai/

-

GitHub repository: https://github.com/oceanbase/seekdb

Use Cases of Seekdb

-

Semantic Search: Quickly retrieve text content with hybrid search capabilities for precise semantic matching, enhancing search efficiency and user experience.

-

Knowledge Base Q&A: Build intelligent knowledge bases to respond quickly and accurately to user queries, suitable for internal knowledge management and support.

-

Recommendation Systems: Utilize high performance and multimodal data processing for personalized recommendations with accuracy and real-time capabilities.

-

In-Database Model Inference: Execute AI model inference directly inside the database, reducing data transfer and processing latency.

-

Multimodal Data Retrieval: Handles diverse data types (text, vectors, geospatial data) for unified retrieval and analysis.

-

Intelligent Customer Service and Virtual Assistants: Supports rapid response and accurate answers for AI-powered customer service and virtual assistants, improving service efficiency.

Related Posts