ChatAnywhere – A Real-Time Stylized Portrait Video Generation Framework Launched by Alibaba Tongyi

What is Chat Anyone?

ChatAnyone is a real-time stylized portrait video generation framework introduced by Alibaba’s Tongyi Laboratory. It generates portrait videos with rich expressions and upper-body movements through audio input. By adopting an efficient hierarchical motion diffusion model and a hybrid control fusion generation model, it achieves high-fidelity and natural video generation. It supports real-time interaction and is applicable to numerous scenarios such as virtual hosting, video conferencing, content creation, education, customer service, marketing, social entertainment, and healthcare. ChatAnyone supports stylization control, allowing adjustments to expression styles according to needs for personalized animation generation.

The main functions of ChatAnyone

- Audio-driven Portrait Video Generation: By inputting audio, it can generate portrait videos with rich expressions and upper-body movements, achieving high-fidelity animation generation from a “talking head” to upper-body interaction. It supports diverse facial expressions and style control.

- High Fidelity and Naturalness: The generated portrait video features rich expressions and natural upper-body movements.

- Real-time Performance: Supports real-time interaction, suitable for application scenarios such as video chat.

- Stylized Control: Allows adjustment of expression styles according to needs to achieve personalized animation generation.

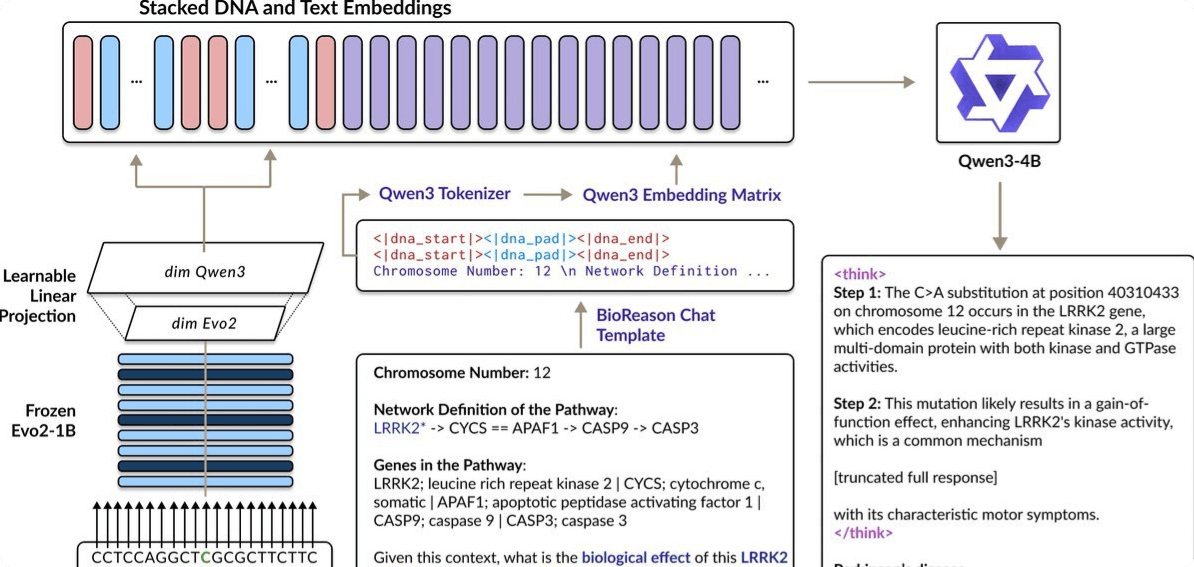

The Technical Principles of ChatAnyone

- Efficient Hierarchical Motion Diffusion Model: Takes audio signals as input and outputs control signals for facial and body movements, considering both explicit and implicit motion signals. Generates diverse facial expressions and achieves synchronization between head and body movements. Supports different intensities of expression changes and the transfer of stylized expressions from reference videos.

- Hybrid Control Fusion Generation Model: Combines explicit landmarks and implicit offsets to generate realistic facial expressions. Injects explicit hand control signals to produce more accurate and lifelike hand movements. Enhances facial realism through a facial optimization module, ensuring that the generated portrait videos are highly expressive and realistic.

- Scalable Real-Time Generation Framework: Supports generation ranging from head-driven animations to upper-body animations with gestures. On a 4090 GPU, it can generate upper-body portrait videos in real time at a resolution of up to 512×768 and a frame rate of 30fps.

The project address of ChatAnyone

- Project official website: https://humanaigc.github.io/chat-anyone/

- Github repository: https://github.com/HumanAIGC/chat-anyone

- arXiv technical paper: https://arxiv.org/pdf/2503.21144

Application scenarios of ChatAnyone

- Virtual Hosts and Video Conferences: Virtual avatars used in news reporting, live streaming with goods sales, and video conferences.

- Content Creation and Entertainment: Generate stylized animated characters, virtual concerts, AI podcasts, etc.

- Education and Training: Generate virtual teacher avatars and virtual characters for training simulations.

- Customer Service: Generate virtual customer service avatars to provide vivid answers and interactions.

- Marketing and Advertising: Generate virtual spokesperson avatars and highly interactive advertising content.

© Copyright Notice

The copyright of the article belongs to the author. Please do not reprint without permission.

Related Posts

No comments yet...