MoCha – An end-to-end conversational character video generation model jointly introduced by Meta and the University of Waterloo

What is MoCha?

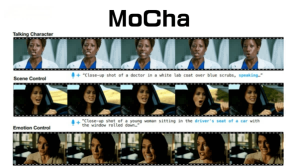

MoCha AI is an end-to-end conversational character video generation model jointly developed by Meta and the University of Waterloo. It can generate complete character animations with synchronized speech and natural movements based on text or voice input. MoCha employs a speech-video window attention mechanism, addressing issues of audio resolution mismatch and lip misalignment during video compression. It supports multi-character turn-based conversations and can generate character animations with emotional expressions and full-body movements.

The main functions of MoCha

- Speech-driven Character Animation Generation: Users input speech, and MoCha can generate character mouth shapes, facial expressions, gestures, and body movements synchronized with the speech content.

- Text-driven Character Animation Generation: Users only need to input a text script. MoCha will automatically synthesize speech and then drive the character to perform complete lip-syncing and action expressions.

- Full-body Animation Generation: Unlike traditional models that only generate facial expressions or mouth shapes, MoCha can generate natural full-body movements, including lip-syncing, gestures, and interactions among multiple characters.

- Multi-character Turn-based Dialogue Generation: MoCha provides structured prompt templates and character tags, enabling automatic identification of dialogue turns to present natural back-and-forth conversations between characters. In multi-character scenarios, users only need to define character information once and can reference these characters in different scenes through simple tags (such as “Character 1”, “Character 2”), eliminating the need for repeated descriptions.

The Technical Principle of MoCha

- Diffusion Transformer (DiT) Architecture:

MoCha is based on the Diffusion Transformer (DiT) architecture, which effectively captures semantic and temporal dynamics by integrating text and speech conditions into the model through cross-attention mechanisms. It can generate realistic and expressive full-body movements while ensuring precise synchronization between character animations and input speech. - Speech-Video Window Attention Mechanism:

To address the challenges of speech-video alignment caused by video compression and parallel generation, MoCha introduces a speech-video window attention mechanism. This mechanism restricts each video token to only attend to temporally adjacent audio tokens, improving lip-sync accuracy and speech-video alignment. By simulating the way human speech operates, the character’s lip movements are precisely matched to the dialogue content. - Joint Training Strategy:

MoCha employs a joint training strategy, training on video data annotated with both speech and text labels simultaneously. This enhances the model’s ability to generalize across diverse character movements and enables fine-grained control over character expressions, actions, interactions, and environments through natural language prompts. - Structured Prompt Templates:

To simplify the text description of multi-character dialogues, MoCha introduces structured prompt templates. By assigning a unique label to each character and using these labels in the text to describe their actions and interactions, redundancy is reduced, and the model’s performance in multi-character scenarios is improved. - Multi-Stage Training Framework:

MoCha adopts a multi-stage training framework that classifies data based on shot types (e.g., close-up, medium shot) and gradually introduces more complex tasks. This ensures the model performs well across tasks of varying difficulty while improving training efficiency.

The project address of MoCha

- Project official website: https://congwei1230.github.io/MoCha/

- arXiv technical paper: https://arxiv.org/pdf/2503.23307

Application scenarios of MoCha

- Virtual Host: MoCha can automatically generate daily vlogs, character Q&A, and other content. Through voice or text input, it generates synchronized character lip movements, facial expressions, gestures, and body movements with the voice content, making the virtual host more vivid and natural.

- Animation and Film Creation: MoCha supports AI automatic voice-over and automatic animation generation, which can reduce the production cost of animation and film creation. It can generate full-body animations, making character movements more natural and closer to the performance of movie-level digital humans.

- Educational Content Creation: MoCha can serve as an AI teacher for lecturing or interacting. Through text-driven methods, it generates character animations that match the teaching content, enhancing the fun and appeal of educational materials.

- Digital Customer Service: MoCha can be used for personified corporate customer service and consultation roles. Through voice or text input, it generates natural and smooth customer service dialogue animations, improving user experience.

© Copyright Notice

The copyright of the article belongs to the author. Please do not reprint without permission.

Related Posts

No comments yet...