Five Major Challenges in Building AI Agents and Their Solutions!

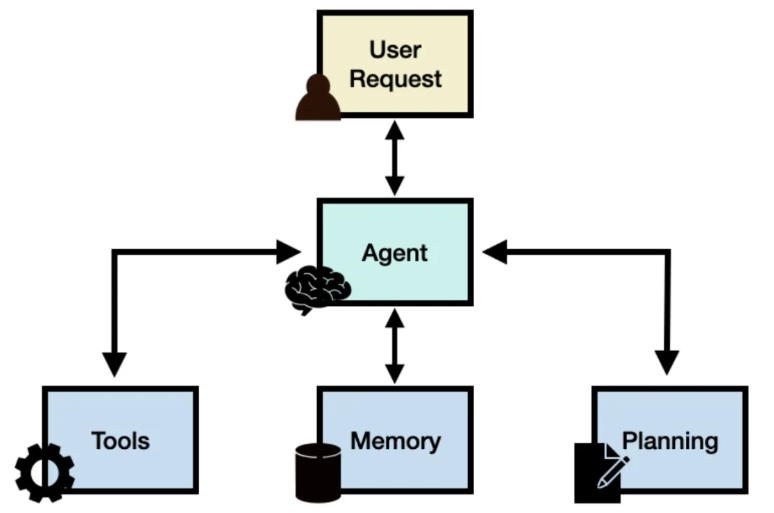

AI Agents are becoming increasingly intelligent. They can conduct decision analysis based on user needs and combine external tools to automate work processes. However, AI Agents still face numerous challenges in practical applications. For example, issues such as context maintenance, multi-step processing, and the integration of external tools can all affect the usability of AI Agents.

This article summarizes the five most common challenges encountered when creating AI Agents and provides practical solutions. Whether you’re a beginner just starting out or a seasoned expert, these best practices will help you design more reliable, scalable, and effective AI Agents.

Reasoning and Decision Management

This is one of the most fundamental issues in building an AI-Agent, namely: “How to ensure the consistency and reliability of the Agent’s decision-making process.” Unlike traditional software systems that follow explicit rules, AI Agents need to interpret user intentions, analyze complex problems, and make correct decisions based on probability distributions. Since these are probability distributions, uncertainty is inevitable. “This ‘uncertainty’ makes it difficult to predict the Agent’s behavior in different scenarios, especially in complex business application environments.”

Solutions

“Utilize structured prompting methods, such as the ReAct framework, to support systematic reasoning. By combining clear behavioral constraints and verification checkpoints, the reliability of outputs can be effectively ensured. Additionally, tools like LangChain and Llama Index can be leveraged to construct clearer action paths.”

The “temperature” parameter of large language models (LLMs) also plays an important role in reasoning and creativity. This parameter controls the randomness of the text generated by the model. A lower setting (closer to 0) produces more accurate and controllable results, while a higher setting (closer to 1) enhances creativity and diversity. It is recommended to adjust this parameter according to your needs. Based on our experience, “when the model is invoked for AI-Agent tasks, setting the temperature between 0 and 0.3 yields the best results.” If you want the output to be more precise and predictable, the temperature value should be set lower.

Multistep Processes and Context Handling

Complex enterprise work processes usually require agents to maintain context across multiple steps and interactions. “As the complexity of the process increases, the challenges of state management, error handling, and context maintenance also intensify.” Agents must not only track the progress of the process but also understand the dependencies between steps and be able to recover smoothly when the process is interrupted or fails.

Solutions

It is necessary to “implement a robust state management system and incorporate clear verification checkpoints throughout the multi-step process.” Establish comprehensive error handling logic for each step and design failure response mechanisms to handle exceptions appropriately when they occur.

For example: A mortgage application Agent needs to obtain the credit report from the last week. If it fails to query from the bank, the account-opening bank, etc. in sequence, it should continue to search for reports within the past 90 days. If it still fails, it ultimately needs to be routed to a loan officer for manual processing. If the Agent encounters data with abnormal formats at any step, it should immediately submit it for manual review.

In addition, “be sure to record the process path and implement a logging system to track the progress of multi-step tasks.” A structured process design will help agents maintain context and resume from interruptions.

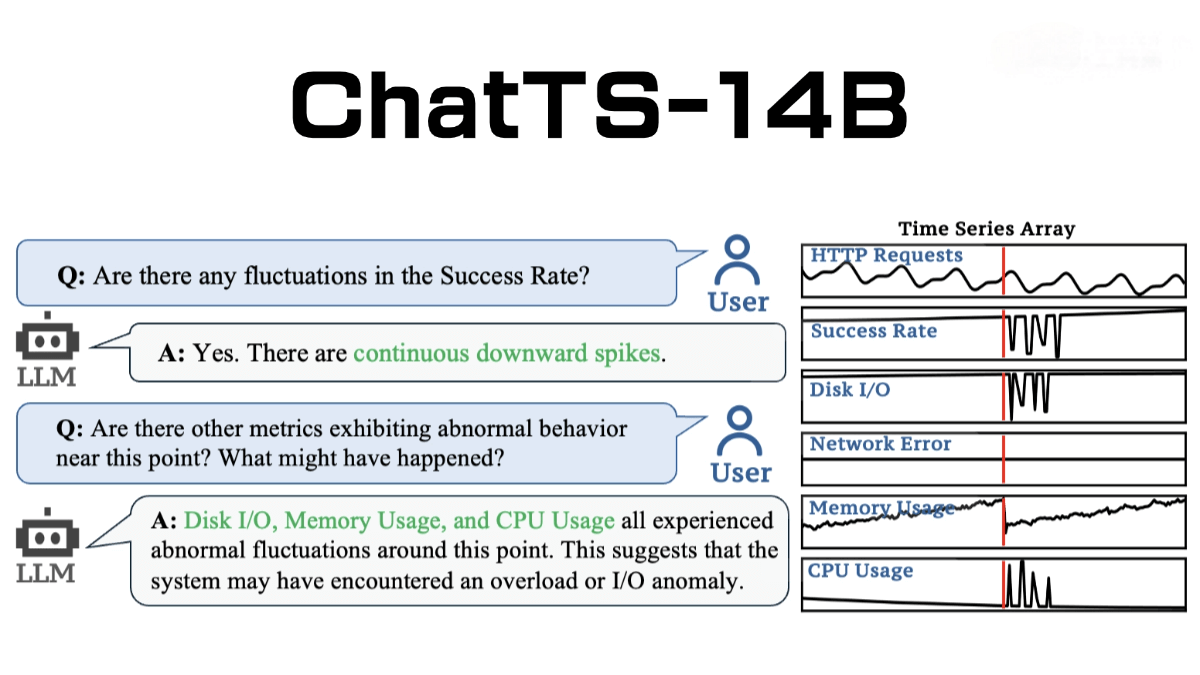

Integrated Tool Management

With the enhancement of AI-Agent capabilities, managing them in relation to various tools and their usage has become increasingly complex. For instance, adding a new tool means introducing additional potential failure points, security risks, and performance overheads. Ensuring that Agents can use tools rationally and handle tool failures is the key to achieving system reliability.

Solutions

To address this issue, “It is necessary to create precise definitions for each tool in the Agent toolkit. This includes clearly specifying when to use the tool, the valid parameter range, and providing clear examples of the expected output.” Build validation logic to enforce these specifications, starting with a small set of well-defined tools rather than many loosely defined ones. Regularly monitor to see which tools are the most effective and which definitions need improvement.

Illusion of Control and Ensuring Accuracy

AI Agents may sometimes generate information that seems reasonable but is entirely incorrect when faced with complex problems or incomplete data (known as “hallucination”). This is particularly dangerous in financial enterprises or government public sectors, where there are extremely high requirements for information accuracy. Such risks become especially severe when the decisions of the Agent impact business operations, customer interactions, and public services.

Solutions

At this point, it is necessary to build a rigorous verification system that utilizes factual grounding and citations to support responses. Additionally, structured data formats such as JSON should be employed to enforce output formatting constraints. Implement a manual review process for key decisions and develop a comprehensive test suite to capture potential hallucinations. By regularly monitoring and logging the outputs of the agent, inaccurate working patterns can be identified and the system can be improved. You can also set a “confidence score” and automatically trigger human intervention when the score falls below a predefined threshold.

Large-scale Performance Management

Running complex AI Agents in high-traffic production environments introduces engineering and related operational challenges that may not be apparent during development or initial deployment. “However, as request volumes increase, issues such as tool timeouts and failures, error responses, and resource bottlenecks in model serving and inference can quickly lead to cascading failures, significantly degrading system performance.”

Solutions

To address these issues, the following strategies can be implemented:

◦ Implement robust error-handling mechanisms at every tool integration point, including the use of circuit breakers to prevent cascading failures.

◦ Establish retry mechanisms for tool call failures and reduce repeated model calls by maintaining response caches.

◦ Deploy a queue management system to control the rate of model calls and tool usage, ensuring the system can handle concurrent requests effectively.

◦ For processes involving human review in the proxy, include citation information in the model’s output to help verify the source of the response.

◦ Set up an LLMOps monitoring system and associated tools, focusing on capturing common failure patterns, such as tool timeout rates, model response accuracy (in large-scale scenarios), and system latency under high load. These metrics enable the identification of performance bottlenecks before they impact users, allowing for adjustments to rate limits and scaling strategies accordingly.

Summary

Building AI Agents that are reliable, scalable, and efficient in real-world applications is far more than just deploying a large language model. “From tool integration management, structured reasoning, multi-step process handling, to hallucination control, each aspect presents unique challenges that must be addressed through systematic approaches.” By implementing robust verification mechanisms, leveraging structured prompting techniques, and integrating various fault-tolerant measures, organizations can significantly enhance the performance and stability of AI agents.

Related Posts