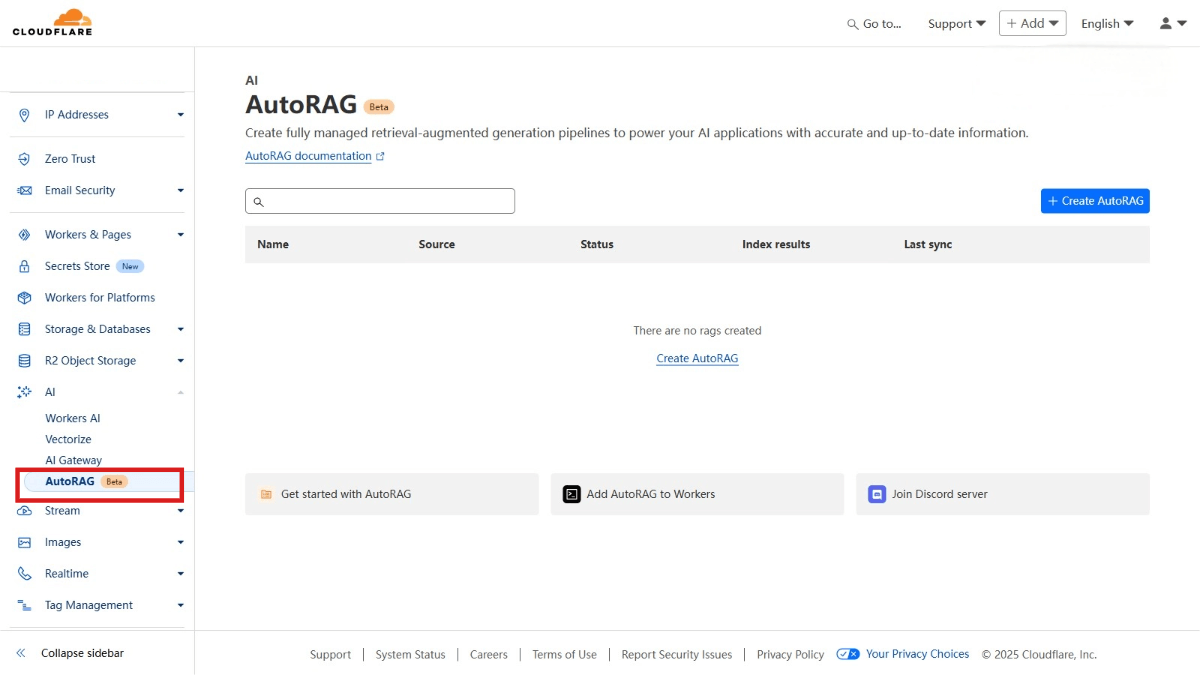

AutoRAG – A fully managed retrieval-augmented generation service launched by Cloudflare

What is AutoRAG?

AutoRAG is a fully managed Retrieval-Augmented Generation (RAG) pipeline launched by Cloudflare, designed to help developers easily integrate context-aware AI into their applications without the need to manage infrastructure. Leveraging automatic data source indexing and continuous content updates, Cloudflare AutoRAG combines technologies such as Cloudflare Workers AI and Vectorize to achieve efficient data retrieval and high-quality AI responses. AutoRAG can be used to build applications such as chatbots, internal knowledge tools, and enterprise knowledge search systems, streamlining the development process while enhancing application performance and user experience.

The main functions of AutoRAG

- Automated Indexing: Automatically ingests data from data sources (such as Cloudflare R2 buckets). Continuously monitors data sources and automatically re-indexes new or updated files to ensure the content is always up-to-date.

- Context-Aware Response: Retrieves relevant information from data sources during queries, combines it with user input, and generates accurate responses based data.

- High-Performance Semantic Retrieval: Performs efficient semantic search using a vector database (Cloudflare Vectorize) to ensure fast retrieval of relevant content.

- Integration and Scalability: Seamlessly integrates with other Cloudflare services (such as Workers AI and AI Gateway). Provides Workers Binding, enabling developers to directly call AutoRAG from Cloudflare Workers.

- Resource Management and Optimization: Offers similarity caching to reduce computational overhead for repeated queries and optimize performance. Supports various data sources, including directly parsing content from website URLs.

The Technical Principles of AutoRAG

- Indexing process:

◦ Extract files from the data source: Read files from the specified data source (such as an R2 bucket).

◦ Markdown conversion: Convert all files into structured Markdown format to ensure consistency.

◦ Chunk processing: Split the text content into smaller fragments to improve the granularity of retrieval.

◦ Embedding vectorization: The embedding model converts text fragments into vectors.

◦ Vector storage: Store the vectors and their metadata in Cloudflare’s Vectorize database. - Query Process:

◦ Query Reception: The user sends a query request based on the AutoRAG API.

◦ Query Rewriting (Optional): Rewrite the query using LLM to improve retrieval quality.

◦ Vector Conversion: Convert the query into a vector for comparison with vectors in the database.

◦ Vector Search: Search for the most relevant vector to the query vector in the Vectorize database.

◦ Content Retrieval: Retrieve relevant content and metadata from storage.

◦ Response Generation: LLM combines the retrieved content with the original query to generate the final response.

The official website address of AutoRAG.

- Website address: cloudflare.AutoRAG

Application scenarios of AutoRAG

- Support for chatbots: Provide intelligent Q&A services for customers based on the enterprise knowledge base, enhancing customer experience.

- Internal knowledge assistant: Assist employees in quickly finding internal documents and knowledge, improving work efficiency.

- Enterprise knowledge search: Provide semantic search functionality, enabling users to find the most relevant content within a large number of documents.

- Intelligent Q&A system: Generate intelligent Q&A pairs for use on FAQ pages or online help centers, providing personalized responses.

- Document semantic search: Conduct semantic searches within the enterprise document library to help users quickly locate the required files.

© Copyright Notice

The copyright of the article belongs to the author. Please do not reprint without permission.

Related Posts

No comments yet...