GLM-Z1-32B – Zhipu AI’s open-sourced new-generation reasoning model

What is GLM-Z1-32B?

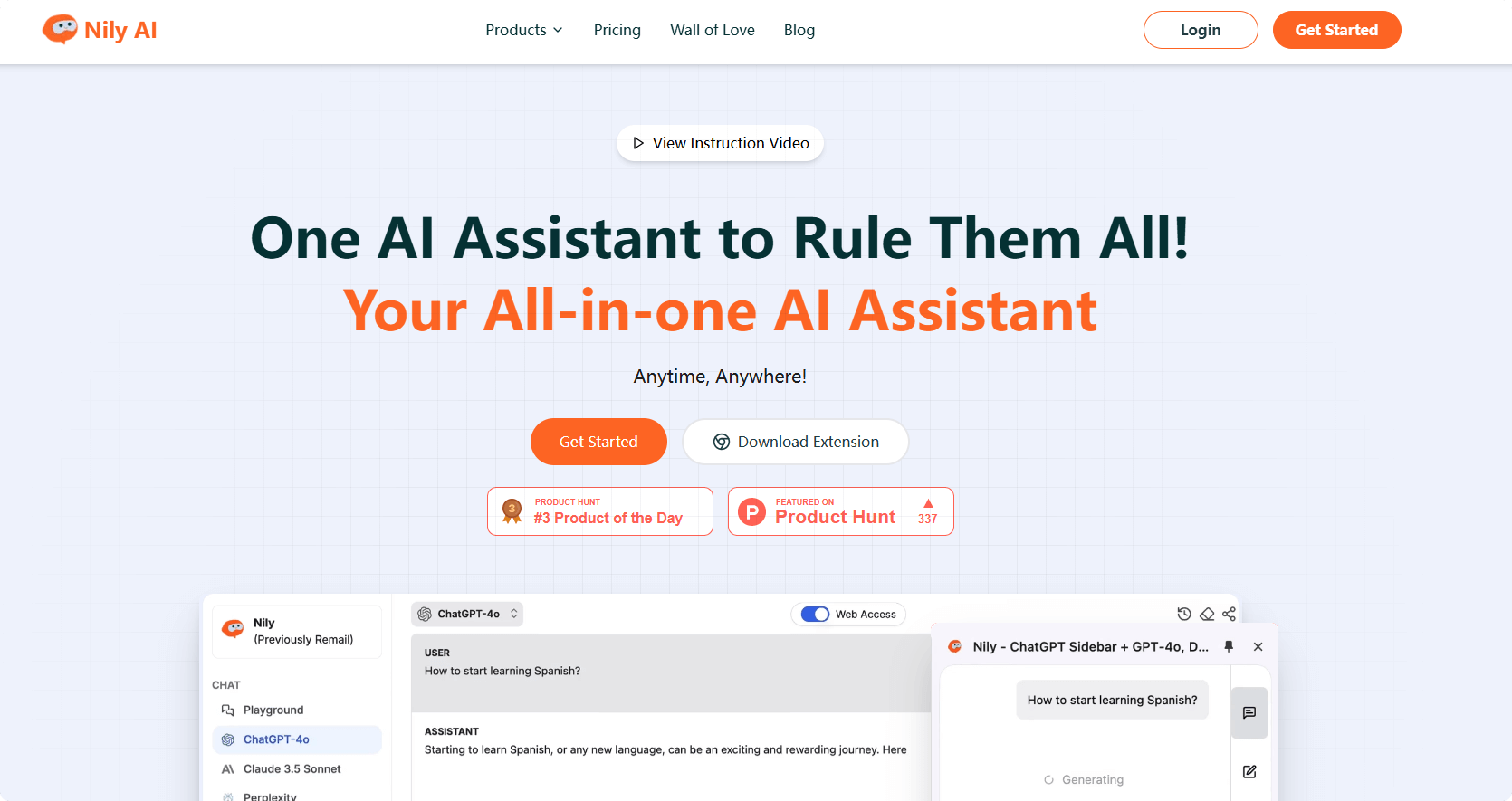

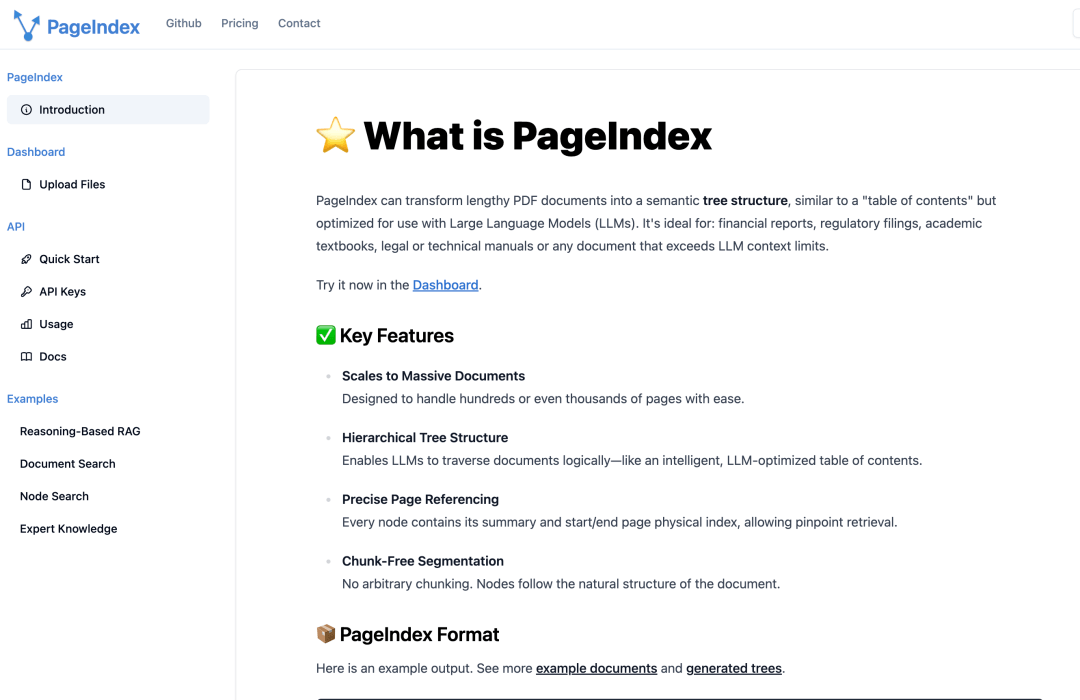

GLM-Z1-32B is a new generation inference model open-sourced by Zhipu. The specific parameter version is GLM-Z1-32B-0414. GLM-Z1-32B is developed based on the GLM-4-32B-0414 base model and optimized through in-depth training. It performs excellently in tasks such as mathematics, coding, and logic, with some of its capabilities comparable to those of DeepSeek-R1, which has as many as 671 billion parameters. Leveraging cold start and expanded reinforcement learning strategies, the model achieves an inference speed of up to 200 tokens/s. It supports lightweight deployment, making it suitable for complex task reasoning. Licensed under the MIT license, it is fully open-source and unrestricted for commercial use. The model allows users to use it for free on the Z.ai platform and supports generating visual pages that can be browsed by scrolling up and down via the Artifacts feature.

The main functions of GLM-Z1-32B

- Math Problem Solving: Capable of handling complex mathematical problems, including reasoning and computation in fields such as algebra, geometry, and calculus.

- Logical Reasoning: The model possesses strong logical reasoning abilities, excelling in handling complex logical problems, such as logic puzzles and logical proofs.

- Code Generation and Understanding: Supports code generation and code comprehension tasks, generating high-quality code snippets based on requirements or analyzing and optimizing existing code.

The Technical Principle of GLM-Z1-32B

- Cold Start Strategy: In the early stages of training, the model quickly adapts to task requirements based on the cold start strategy. Cold start involves fine-tuning from a pre-trained model or conducting initial training with task-specific data.

- Extended Reinforcement Learning Strategy: Based on the extended reinforcement learning strategy, the model continuously optimizes its performance during the training process. Reinforcement learning guides the model to learn the optimal behavior strategy through a reward mechanism.

- Battle Ranking Feedback: By introducing a general reinforcement learning approach based on battle ranking feedback, the model learns how to make better decisions in complex tasks through battles with other models or different versions of itself.

- Task-Specific Optimization: For tasks such as mathematics, coding, and logic, the model undergoes in-depth optimization training. Based on extensive training on specific task data, the model better understands and solves related problems.

Project address of GLM-Z1-32B

- HuggingFace Model Hub: https://huggingface.co/THUDM/GLM-Z1-32B

Application Scenarios of GLM-Z1-32B

- Mathematics and Logical Reasoning: Solve math problems and logical puzzles, and assist in education and scientific research.

- Code Generation and Optimization: Quickly generate code snippets, optimize existing code, and improve development efficiency.

- Natural Language Processing: Accomplish tasks such as question answering, text generation, and sentiment analysis, and be applicable to intelligent customer service and content creation.

- Educational Resource Assistance: Provide intelligent tutoring, generate exercises and tests, and facilitate teaching.

Related Posts