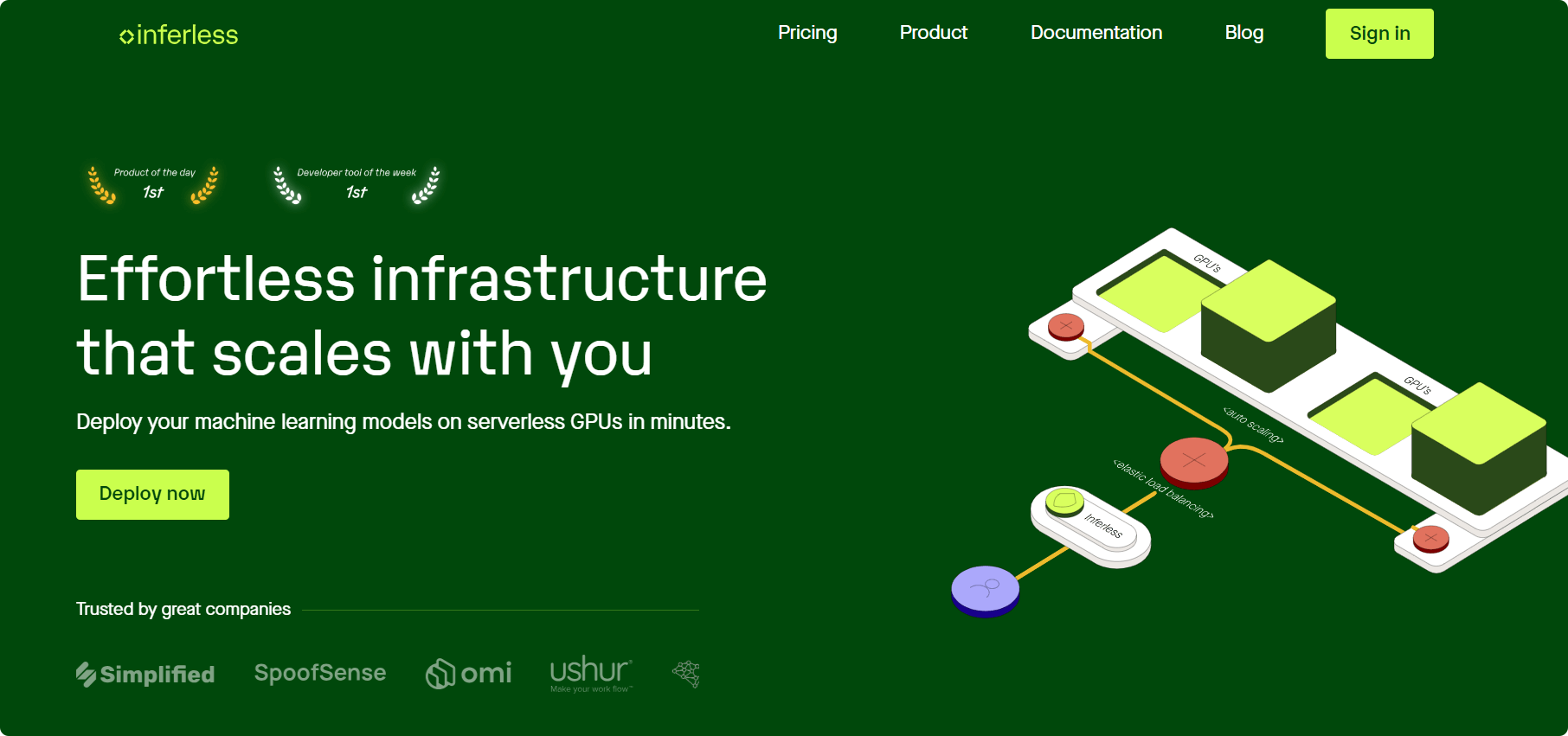

Inferless: Empowering AI Deployment with Serverless GPU Inference

What is Inferless?

Inferless is a serverless GPU platform designed to simplify and accelerate the deployment of machine learning models. By eliminating the need for managing underlying infrastructure, Inferless enables developers to deploy custom models rapidly and efficiently. It supports various model architectures, including those from Hugging Face, Sagemaker, PyTorch, and TensorFlow.

Key Features

1. Rapid Deployment

Deploy any machine learning model with just a few lines of code. Inferless allows integration from sources like Hugging Face, GitHub, Docker, or CLI, enabling models to go live within minutes.

2. Elastic Scalability

Inferless automatically scales GPU resources based on workload demands, handling anything from a single request to millions without manual intervention.

3. Custom Runtimes

Users can create custom container environments tailored to their specific model requirements, ensuring compatibility and optimal performance.

4. Persistent Storage

Provides NFS-like writable volumes that support simultaneous connections from multiple replicas, facilitating efficient data sharing and persistence.

5. Automated CI/CD

Integrates with continuous integration and deployment pipelines, allowing for automatic rebuilding and deployment of models, thus streamlining the development process.

6. Advanced Monitoring

Offers detailed logs for both inference and build processes, aiding in effective monitoring and debugging of deployed models.

7. Dynamic Batching

Enhances throughput by merging server-side requests, optimizing resource utilization and reducing latency.

8. Private Endpoints

Allows customization of endpoints with settings for scaling, timeouts, concurrency, testing, and webhooks, providing greater control over deployments.

Technical Overview

Inferless leverages a serverless architecture to provide on-demand GPU resources without the overhead of managing servers. It utilizes GPU virtualization to deploy multiple models on a single GPU instance, catering to diverse customer needs. By placing high IOPS storage close to the GPU, Inferless significantly reduces model loading times, cutting cold start durations from several minutes to approximately 10 seconds.

Project Links

-

Official Website: https://www.inferless.com

-

Console Access: https://console.inferless.com

-

Documentation: https://docs.inferless.com

-

GitHub Repository: https://github.com/inferlessGitHub

Use Cases

Inferless is versatile and supports a wide range of AI inference scenarios, including:

-

Large Language Model (LLM) Chatbots: Deploy models like GPT-J or GPT-Neo for real-time conversational agents.

-

Computer Vision Applications: Implement image-to-image, image-to-text transformations, and video editing tasks.

-

Speech Recognition and Generation: Develop audio-to-text transcription services suitable for customer support or meeting documentation.

-

Recommendation Systems: Create personalized recommendations for e-commerce platforms based on user behavior.

-

Batch Processing Tasks: Handle large-scale data analysis and predictive model inference in batch operations.

Inferless’s serverless GPU platform offers a robust, flexible, and cost-effective solution for deploying AI models, empowering businesses to scale and innovate without the complexities of infrastructure management.

Related Posts