Gemma 3 QAT – Google’s Latest Open-Source Model, Quantized Version of Gemma 3

What is Gemma 3 QAT?

Gemma 3 QAT (Quantization-Aware Training) is Google’s latest open-source model and a quantization-optimized version of Gemma 3. By using QAT techniques, Gemma 3 QAT dramatically reduces memory requirements while preserving high-quality performance. For instance, the memory footprint of Gemma 3 27B has been reduced from 54GB to just 14.1GB, enabling local deployment on consumer GPUs like the NVIDIA RTX 3090. Similarly, Gemma 3 12B’s memory requirement drops from 24GB to 6.6GB, allowing efficient usage on laptops with GPUs such as the NVIDIA RTX 4060. This enables more users to experience powerful AI models on standard hardware.

Key Features of Gemma 3 QAT

• Significant Memory Reduction:

Thanks to quantization-aware training, Gemma 3 QAT drastically reduces memory usage:

-

Gemma 3 27B: From 54GB (BF16) to 14.1GB (int4) — runs on consumer GPUs like RTX 3090.

-

Gemma 3 12B: From 24GB (BF16) to 6.6GB (int4) — runs efficiently on laptops like RTX 4060.

-

Smaller variants (4B, 1B): Can even run on mobile and edge devices.

• High Performance Preservation:

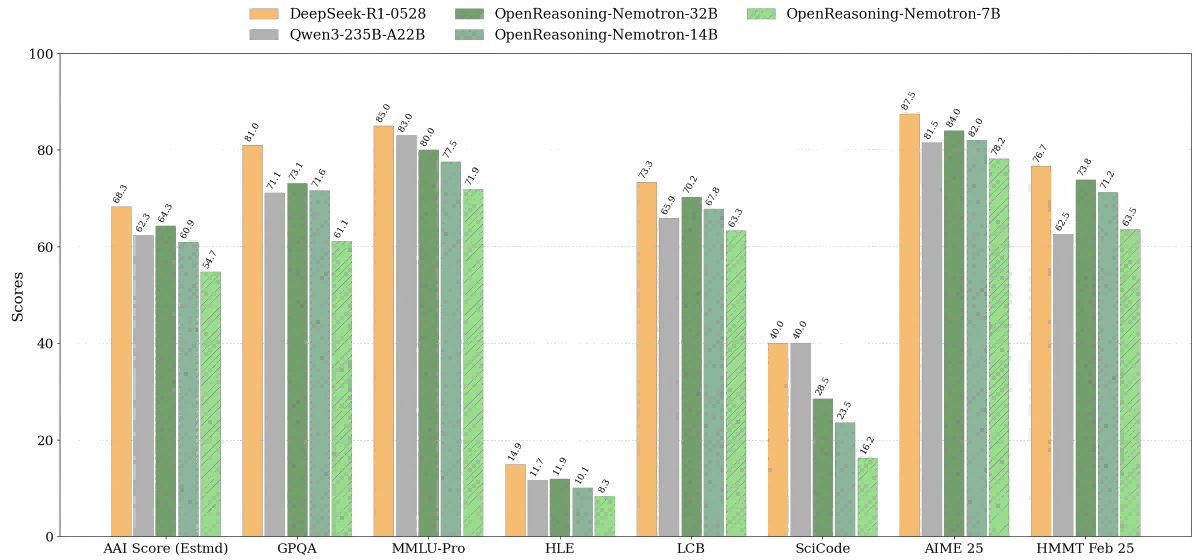

Despite the quantization, performance remains close to the original BF16 model. On the Chatbot Arena Elo leaderboard, the QAT versions remain competitive. Google reports a 54% reduction in perplexity drop after just 5,000 training steps using QAT.

• Multimodal Capabilities:

Gemma 3 QAT supports both image input and text generation, making it ideal for tasks like Visual Question Answering (VQA) and document analysis.

• Long Context Window:

Supports up to 128,000 tokens using hybrid attention mechanisms (sliding window + global attention), significantly reducing KV cache memory usage.

• Hardware Efficiency:

Designed for various consumer-grade hardware including desktop GPUs, laptop GPUs, and edge devices.

• Framework Compatibility:

Supported by major inference frameworks such as Ollama, LM Studio, llama.cpp, and MLX, making deployment easy across platforms.

Technical Highlights

• Pseudo-Quantization:

During forward passes, the model simulates low-precision arithmetic using pseudo-quantization layers that round weights and activations.

• High-Precision Backpropagation:

Gradients are computed in high precision (floating point), ensuring accurate weight updates during training.

• Training and Quantization Combined:

The model learns to adapt to low-precision environments during training, leading to minimal accuracy loss after quantization.

• KV Cache Optimization:

Utilizes sparse caching and dynamic compression to lower memory usage in long-context tasks.

• Hardware Acceleration:

Accelerated by SIMD instruction sets like AVX512 and NEON, boosting inference speed by up to 3×.

Project Page

• Project official website: Gemma 3 QAT

• HuggingFace Model Hub: https://huggingface.co/collections/google/gemma-3-qat

Use Cases for Gemma 3 QAT

• Visual Question Answering (VQA):

The quantized model performs impressively on multimodal tasks such as DocVQA, achieving results close to FP16 versions.

• Document Analysis:

With 128K token context support, Gemma 3 QAT is ideal for handling long documents and complex data structures.

• Long-Form Generation:

Optimized KV caching and grouped-query attention (GQA) reduce memory use by 40% and speed up inference by 1.8× in long-context scenarios.

• Long-Sequence Reasoning:

Effective in tasks requiring reasoning over lengthy inputs like legal documents or research papers.

• Edge Deployment:

The 1B version (just 529MB) can run offline on Android or web environments with latencies as low as 10ms, suitable for privacy-sensitive areas like healthcare and finance.

Related Posts