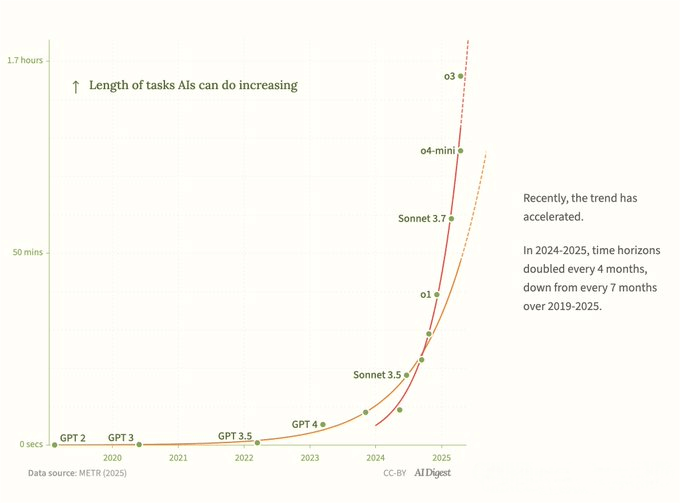

O3 enables the capabilities of agents to soar! However, the founder of LangChain said that OpenAI might be taking a rather narrow path.

Last week, OpenAI’s o3 model went viral! Early users are raving about its capabilities, with noticeable leaps in agent performance. Shortly after, they released a practical guide on AI agents. But does this signal that OpenAI’s LLM-centric approach represents the ultimate solution?

Harrison Chase, the founder of LangChain, recently went public with some strong criticism, arguing that things are not that simple.

O3: Not just smarter, but more like an Agent.

The most stunning aspect of this O3 is undoubtedly the completeness of its Agentic capabilities. It no longer merely answers questions; instead, it can act like an assistant, proactively using tools to accomplish more complex tasks.

First of all, o3 has made remarkable progress in Tool Use and autonomous planning. It can conduct multiple iterative searches to find useful information, just like Deep Research. Moreover, it can call the Python environment to execute code, analyze data, and even generate visualization charts. I’ve tested some data analysis tasks, and o3 not only provided in – depth analyses but also offered quite professional visualization charts and strategic suggestions. This Tool Use experience, which is built into the Chain of Thought (CoT) process, is extremely smooth, and its speed is much faster than that of many Agent products with external frameworks.

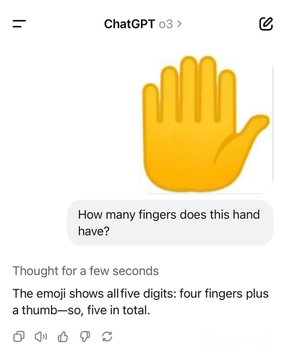

Secondly, o3 has achieved multi – modal Chain – of – Thought (CoT) for the first time, which is definitely a major breakthrough! The model can not only “understand” pictures but also directly reference and manipulate pictures in its “thought chain”. For example, if you show it a blurry screenshot and ask where it is, it may crop the picture and zoom in on the key area during the thinking process to assist in making a judgment. This ability to integrate visual and text – based reasoning enables it to handle real – world information more skillfully, like a “private detective”.

What’s more, the reliability of o3 has also been enhanced as it has learned to “say no”. OpenAI claims that o3 has a 20% lower error rate than o1 on difficult tasks. One manifestation is that when information is insufficient or a task cannot be completed, it will choose to refuse to answer instead of forcibly “hallucinating” an answer. This is crucial for the reliability of agents in practical applications.

Although o3 still has room for improvement in visual reasoning (such as counting fingers and reading a clock) and certain coding tasks, its remarkable agentic capabilities undoubtedly represent the significant progress OpenAI has made in this direction.

The Route Debate between the Workflow Faction and the LLM Faction

The success of o3 seems to confirm OpenAI’s approach: empowering increasingly powerful large language models (LLMs) as the core “brains” and driving agents to complete tasks through prompts and tool calling.

But Harrison Chase disagreed that this was the only or even the best approach. They believed that there were deeper route divergences in the current field of agent construction.

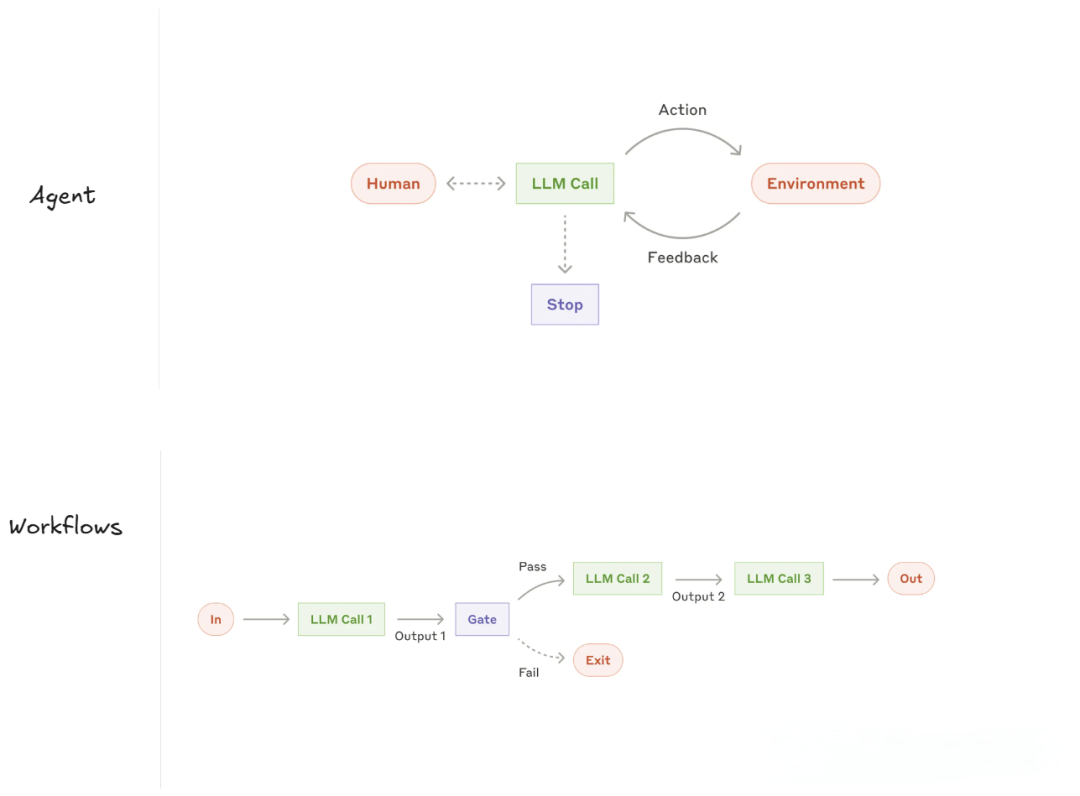

We first need to understand the concept of “Agentic Systems”. In the article “Building Effective Agents” published by Anthropic last year, it was proposed that Agentic Systems mainly include two forms: Workflows and Agents. Workflows coordinate large language models (LLMs) and tools through pre – written code paths, and the processes are relatively fixed. In contrast, Agents allow the LLMs to dynamically determine the processes and the use of tools, which is more autonomous.

The key lies in the fact that both Anthropic and LangChain have observed that in the real world, most production – level agentic systems are often a combination of workflows and agents.

So, what are the core challenges in building an Agent? Harrison Chase points out that the real challenge lies in ensuring that the LLM can obtain the most appropriate contextual information at each step. LLMs may make mistakes either because the model’s capabilities are insufficient or because the contextual information provided to them is incorrect or incomplete.

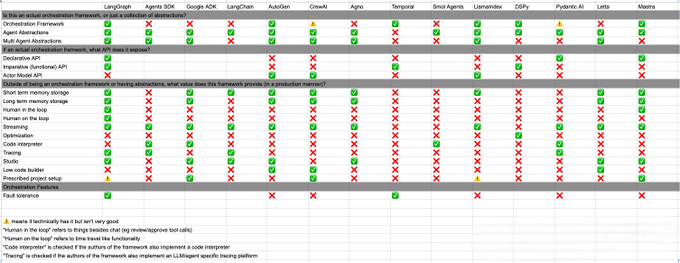

This is the core reason why LangChain questions OpenAI’s approach. In its guidelines, OpenAI implies that “code-first” solutions like their Agents SDK are more flexible than “declarative” frameworks. However, Chase believes this confuses concepts. He points out that many so-called Agent frameworks, including the Agents SDK and earlier LangChain components, are essentially just Agent abstractions.

Although this type of encapsulation lowers the entry threshold, it also brings about the problem of “low upper – limit”. They are like black boxes, hiding too many underlying details, making it extremely difficult for developers to precisely control what context is passed to the LLM at each step. This is precisely the key to building a reliable Agent, and the direct challenge that Agents face upon implementation is their effectiveness!

On the contrary, due to its structured nature, Workflow can precisely provide better predictability and precise control over data flow, making it easier to ensure that the LLM obtains the correct context. In many scenarios, Workflow is simpler, more reliable, and less costly.

Based on these considerations, LangChain has launched LangGraph. This is a fundamental orchestration framework, rather than a simple Agent encapsulation. It enables developers to define Agentic systems using nodes and edges. It not only supports a declarative graph structure but also contains normal imperative code internally. The goal is to provide both “low barriers to entry” (with upper – layer encapsulation) and “high ceilings” (with flexibility at the underlying level), allowing developers to flexibly combine and switch between Workflows and Agents and precisely control the context.

Is O3 the optimal solution? The collision of two major approaches.

Now, looking back at O3, it is undoubtedly a strong testament to the LLM (Large Language Model)-dominant approach. Its advantages lie in its high degree of end-to-end integration, seamless user experience, and the potential for a very high capability ceiling as the model itself continues to evolve.

However, its “black – box” nature also poses challenges. Developers find it difficult to precisely intervene in each step of the model’s thinking and access the information it obtains. This may lead to reliability issues in certain tasks, such as specific visual recognition and complex coding. Meanwhile, its debuggability is also poor, making it hard to pinpoint the root cause when errors occur.

In comparison, although the Workflow or Hybrid mode may require more design efforts, it offers greater controllability, predictability, and debuggability. This advantage is likely to be even more crucial in complex enterprise scenarios where high reliability is required.

Even in a Multi – Agent system, communication among agents remains a core issue. A structured Workflow is often the best way to achieve efficient and reliable collaboration among agents, rather than allowing agents to “dialogue” completely freely.

Whither goes the Agent?

The capabilities of large language models (LLMs) will continue to grow stronger. Does this mean that workflows will eventually become obsolete? Chase doesn’t think so.

He predicts that the hybrid model will become the norm. Most production-grade applications will continue to be a combination of Workflow and Agent to balance flexibility and reliability. For many well – defined tasks, Workflow remains the better choice.

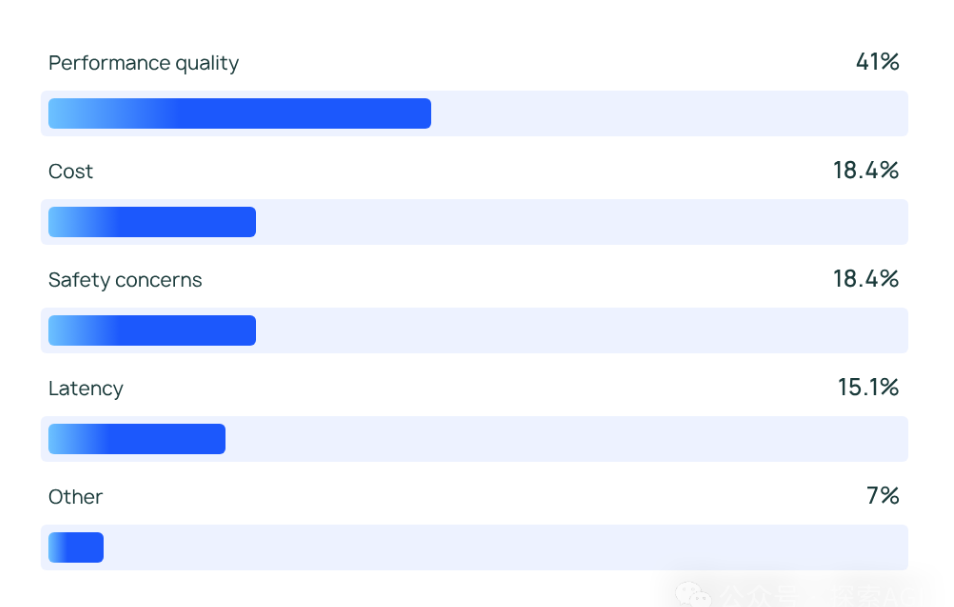

At the same time, a good Agentic framework offers far more than just Agent encapsulation. It should provide a reliable orchestration layer and address key issues in production environments. This includes managing conversation history (short-term memory), enabling Agents to learn from cross-session experiences (long-term memory), seamlessly integrating human approval or feedback (human-machine collaboration/supervision), providing smooth real-time updates (streaming), implementing effective debugging and observability for LLMs, and ensuring system fault tolerance. These are all crucial for building reliable and maintainable systems.

In the future, the enhancement of Agent’s intelligence will rely more on RL Scaling. As demonstrated by o3, enabling the model to perform better in “thinking” and tool utilization through large-scale reinforcement learning allows clear performance improvements with continued computational resource investment.

Conclusion

The release of o3 is undoubtedly a significant milestone in the development of Agents, demonstrating the tremendous potential of the LLM-led approach. However, there is not just one way to build Agents. Ensuring that LLMs obtain the correct context is the core challenge, and the combination of Workflow and Agent, along with a flexible and controllable orchestration framework, may be the key to more reliable and powerful Agentic systems.

In the future, by combining RL Scaling and experience – driven learning, we may witness the birth of truly intelligent agents.

Related Posts