The prospects of the release of DeepSeek R2 have sparked speculation

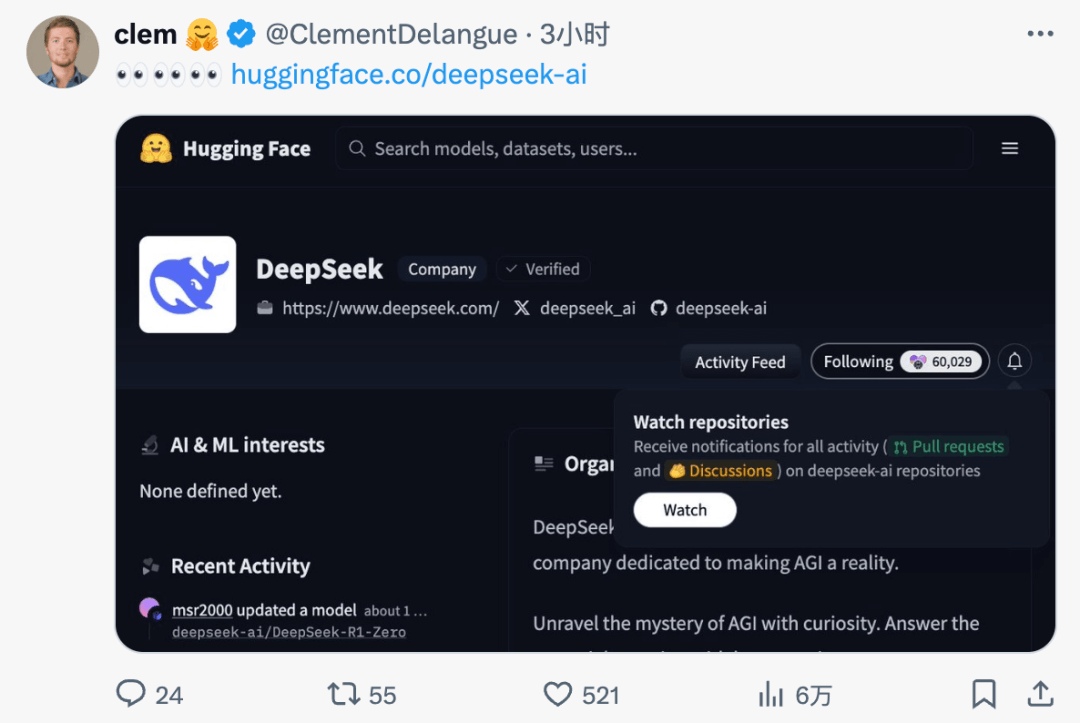

Hugging Face CEO Clément Delangue posted a tweet that has sparked widespread speculation, hinting that DeepSeek R2 might be on the verge of release. The link attached to the tweet points to DeepSeek’s Hugging Face repository. Meanwhile, leaked information about DeepSeek R2 is rapidly spreading on social media platforms. It shows that the model has 1.2 trillion parameters, with 78 billion active parameters, and adopts a hybrid MoE (Mixture of Experts) architecture. Additionally, its cost is reported to be 97.3% lower than that of GPT – 4o, with training data amounting to 5.2PB and a test score of 89.7%. However, the authenticity of this information has not yet been confirmed. As of now, neither DeepSeek nor Qwen officials have responded to this.

Related Posts