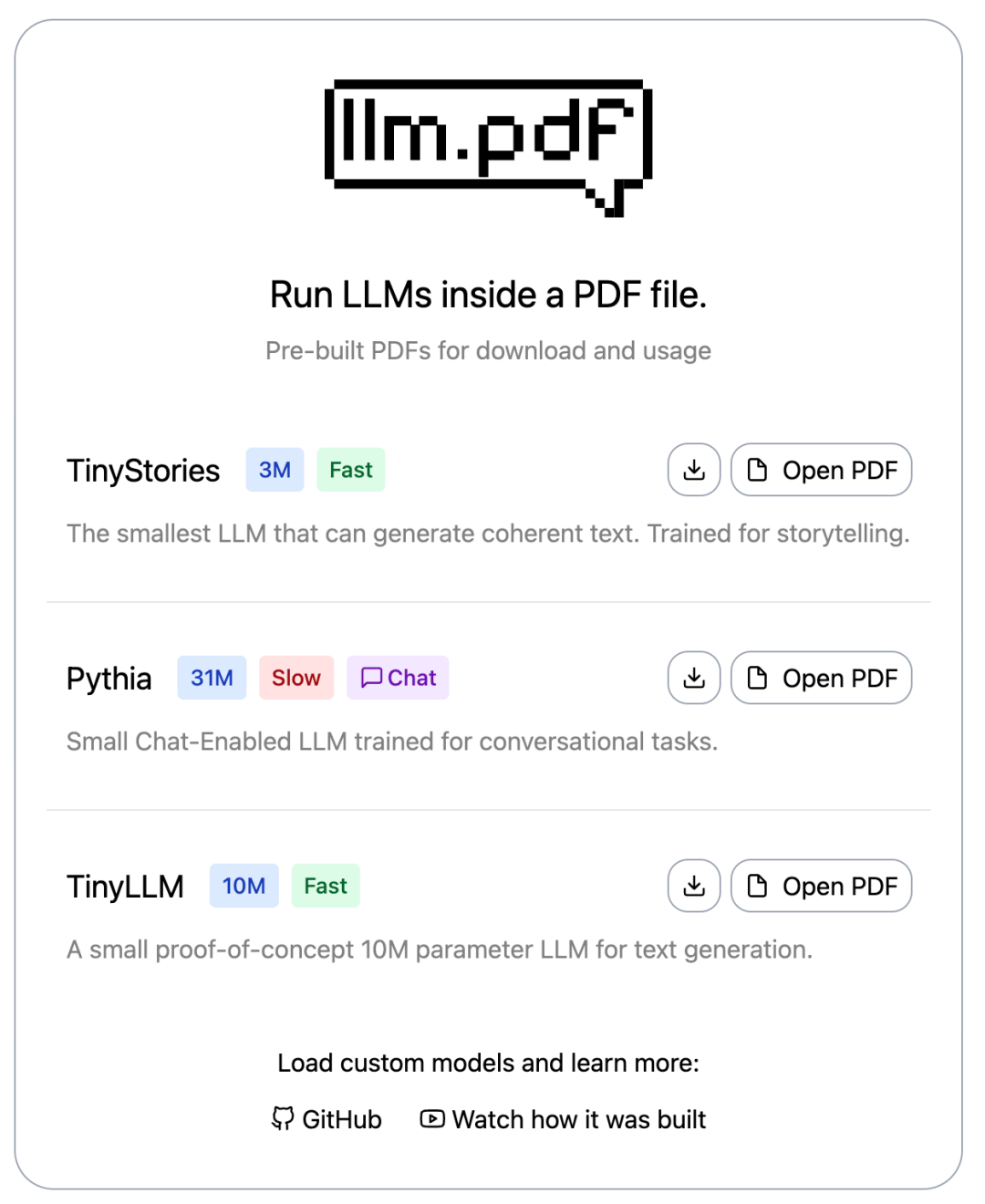

llm.pdf: Embedding Large Language Models Directly into PDF Files

What is llm.pdf?

llm.pdf is an innovative proof-of-concept project demonstrating the feasibility of embedding an entire Large Language Model (LLM) within a PDF file. This approach leverages Emscripten to compile llama.cpp into asm.js, enabling the execution of LLM inference directly within the PDF using an old PDF.js injection technique. By embedding the entire LLM file into the PDF as base64, this project showcases the potential of running LLMs entirely offline, without relying on external servers or cloud services.

Key Features

-

Local LLM Execution: Run LLM inference directly within a PDF file, eliminating the need for server-side processing.

-

Custom Model Integration: Utilize the

scripts/generatePDF.pyscript to embed any compatible LLM into a PDF. -

Support for GGUF Quantized Models: Currently supports GGUF format quantized models, with Q8 quantized models recommended for optimal performance.

-

Offline Functionality: Operate entirely offline, ensuring privacy and reducing dependency on external resources.

-

Open Source: The project is open-source, encouraging community contributions and experimentation.

Technical Overview

The core technology behind llm.pdf involves compiling llama.cpp into asm.js using Emscripten. This compiled code is then injected into the PDF using an old PDF.js injection method. The LLM model is embedded into the PDF as a base64-encoded string, allowing the PDF to load and execute the model locally. This setup enables the execution of LLM inference directly within the PDF, providing a novel approach to offline AI applications.

Project Repository

-

GitHub Repository: https://github.com/EvanZhouDev/llm.pdf

Use Cases

-

Offline AI Assistants: Implement AI-powered assistants that function entirely offline, ensuring user privacy and data security.

-

Educational Materials: Create interactive educational PDFs that include AI-driven content generation and assistance.

-

Document Automation: Develop automated systems for generating and processing documents using embedded LLMs.

-

Research and Development: Explore the feasibility and potential applications of embedding AI models within documents for various use cases.

Related Posts