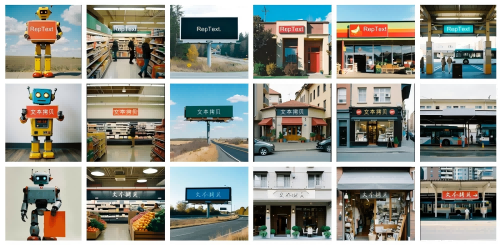

RepText – A multilingual visual text rendering framework jointly launched by Liblib AI and Shakker Labs

What is RepText?

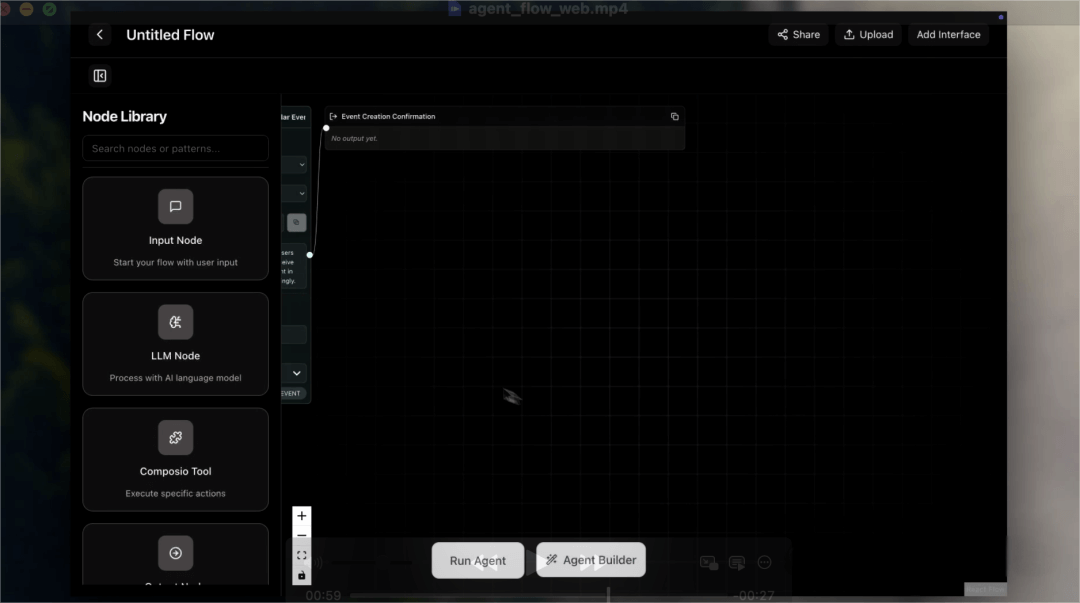

RepText is a multilingual visual text rendering framework developed by Shakker Labs and Liblib AI. Unlike traditional models that rely on understanding the semantics of text, RepText achieves high-quality text rendering through glyph replication. It is built upon a pretrained single-language text-to-image generation model and incorporates ControlNet architecture, Canny edge detection, positional information, and glyph latent variable replication to accurately render multilingual text in user-specified fonts and positions.

RepText is well-suited for a variety of scenarios, including graphic design and natural scene rendering.

Key Features of RepText

-

Multilingual Text Rendering: Supports rendering text in multiple languages (including non-Latin scripts), with user-defined content, font, color, and position.

-

Precise Control: Enables users to precisely control the placement and style of text in images, offering a high degree of customization.

-

High-Quality Generation: Uses innovative techniques to ensure that rendered text blends naturally with backgrounds, maintaining high clarity and visual accuracy.

-

Model Compatibility: Seamlessly integrates with existing text-to-image generation models (e.g., DiT-based models) without requiring retraining of the base model.

Technical Principles of RepText

-

Imitation Over Understanding: The core idea of RepText is to mimic glyphs rather than understand text semantics. Text is generated by replicating glyph shapes, akin to how humans learn to write.

-

ControlNet Framework: Built on the ControlNet architecture, it uses Canny edge detection and positional data as conditioning inputs to guide text generation—eliminating reliance on text encoders and reducing the need for multilingual comprehension.

-

Glyph Latent Variable Replication: During inference, RepText is initialized from noiseless glyph latent variables, providing guidance for more accurate rendering and better color control.

-

Region Masking: To prevent interference with non-text areas during generation, RepText employs region masks that ensure only the text regions are modified, preserving the original background.

-

Text-Aware Loss: During training, a text-aware loss (based on feature maps from OCR models) is introduced to improve the legibility and accuracy of the generated text.

Project Links

-

Official Website: https://reptext.github.io/

-

GitHub Repository: https://github.com/Shakker-Labs/RepText

-

arXiv Paper: https://arxiv.org/pdf/2504.19724

Application Scenarios

-

Graphic Design: Ideal for designing greeting cards, posters, brochures, etc., with fine control over font, color, and placement.

-

Natural Scene Rendering: Generates text in natural environments, such as store signs, billboards, road signs, etc., supporting multiple languages and font styles.

-

Artistic Creation: Enables the generation of artistic fonts and complex layouts, including calligraphy-style text and decorative typography—serving as a source of inspiration and material for artists.

-

Digital Content Creation: Accelerates content generation in areas like video games, animation, and web design by producing context-appropriate text quickly.

-

Multilingual Content Localization: Supports localized visual text rendering for globalized digital content, making it easy to produce versions in different languages.

Related Posts