Transformer Lab: The All-in-One Platform for Localized Large Model Development

What is Transformer Lab?

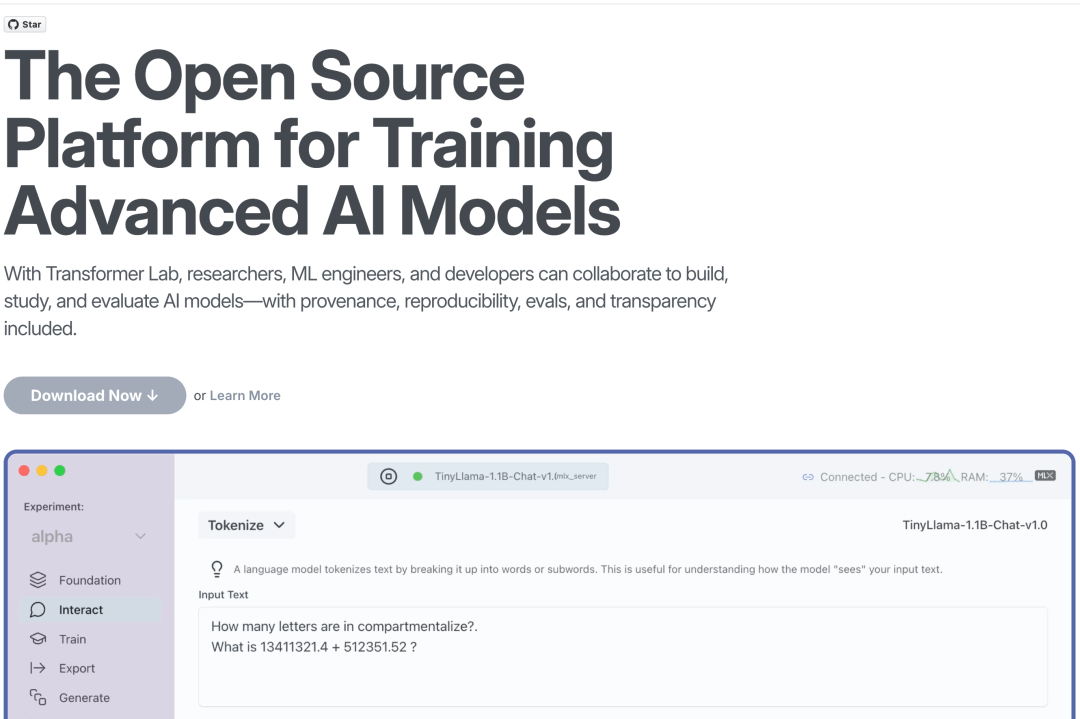

Transformer Lab is an open-source platform supported by Mozilla Builders, designed to provide researchers, machine learning engineers, and developers with an integrated environment for building, training, fine-tuning, and evaluating large language models (LLMs). The platform emphasizes model traceability, reproducibility, evaluability, and transparency, enabling users to efficiently develop and test AI models locally on their machines.

Key Features

-

One-Click Download of Popular Models: Supports the download of hundreds of popular models such as Llama3, Phi3, Mistral, Mixtral, Gemma, Command-R, and more. Models can also be retrieved from Hugging Face, and it supports model format conversion (e.g., MLX, GGUF).

-

Model Interaction and Inference: Provides features such as chat, completion, preset prompts, chat history, generation parameter tuning, batch inference, embedding computation, LogProbs visualization, tokenizer visualization, and inference logs.

-

Training and Fine-Tuning Support: Supports various training methods such as pretraining from scratch, fine-tuning, DPO, ORPO, SIMPO, reward modeling, and GRPO.

-

Comprehensive Evaluation Tools: Integrates tools like Eleuther Harness, LLM as evaluators, objective metrics, red-teaming evaluations, as well as evaluation visualizations and charts.

-

Plugin Support: Offers an extensive library of plugins, with the ability for users to customize plugins to extend functionality.

-

Retrieval-Augmented Generation (RAG): Supports a drag-and-drop interface for files, compatible with engines like Apple MLX and HF Transformers.

-

Cross-Platform and Multi-GPU Support: Compatible with Windows, macOS, and Linux, supports training and inference on Apple Silicon via MLX, CUDA-based training and inference, and multi-GPU training.

Technical Principles

-

Modular Architecture: Transformer Lab consists of two main components: the Transformer Lab App (a graphical client application) and the Transformer Lab API (a server for machine learning work).

-

Plugin System: All platform features are built on a plugin system, including training, model serving, and format conversion, allowing users to add or customize plugins based on their needs.

-

Automatic Server Configuration: Provides an automatic server configuration feature, simplifying the user’s workflow and allowing them to focus on model development and testing.

Project Links

-

Official Website: https://transformerlab.ai/

-

GitHub Repository: https://github.com/transformerlab/transformerlab-app

-

Discord Community: https://discord.gg/transformerlab

Application Scenarios

-

Research and Education: Provides a localized platform for academic researchers and educators to conduct experiments and teach large language models effectively.

-

Enterprise In-House Development: Enterprises can use Transformer Lab to develop and test proprietary language models locally, ensuring data security and privacy.

-

Personalized Assistant Development: Developers can use the platform to create custom AI assistants tailored to specific business needs or personal preferences.

-

Model Evaluation and Comparison: Offers comprehensive evaluation tools for comparing and optimizing the performance of different models.

Related Posts