FlexiAct – A motion transfer model jointly launched by Tsinghua University and Tencent

What is FlexiAct?

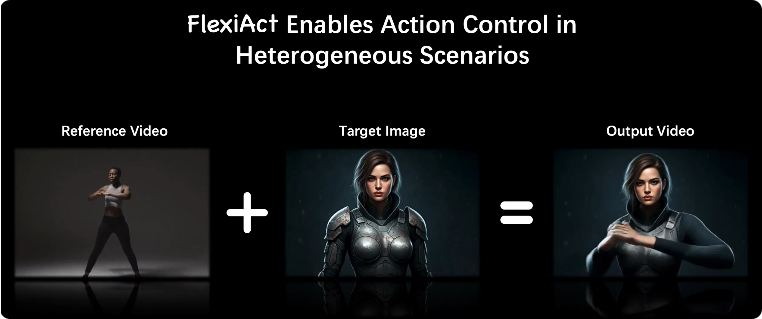

FlexiAct is a novel motion transfer model jointly developed by Tsinghua University and Tencent ARC Lab. Given a target image, FlexiAct can transfer motions from a reference video to the target subject, achieving accurate motion adaptation and appearance consistency even in heterogeneous scenarios with significant spatial structure differences or across domains. The model introduces a lightweight RefAdapter module and a Frequency-Aware Motion Extraction (FAE) module to overcome the limitations of existing methods related to layout, viewpoint, and skeletal structure discrepancies, while preserving identity consistency. FlexiAct demonstrates outstanding performance in motion transfer for both humans and animals and shows promising potential for widespread applications.

Core Features of FlexiAct

-

Cross-subject Motion Transfer: Supports transferring motions from one person to another, or from a person to an animal.

-

Appearance Consistency: Maintains the target subject’s original appearance (e.g., clothing, hairstyle) while transferring motions.

-

Flexible Spatial Structure Adaptation: Achieves natural motion transfer even when the reference video and target image differ significantly in layout, viewpoint, and skeletal structure.

Technical Principles of FlexiAct

-

RefAdapter (Spatial Structure Adapter):

RefAdapter is a lightweight image-conditioned adapter designed to address spatial structure differences between the reference video and the target image. During training, random video frames are selected as conditional images to maximize spatial structure diversity. This allows the model to adapt to various poses, layouts, and viewpoints while maintaining appearance consistency. A small number of trainable parameters (such as LoRA modules) are injected into the MMDiT layers of CogVideoX-I2V to achieve flexible spatial adaptation, avoiding the rigid constraints of traditional methods. -

Frequency-Aware Motion Extraction (FAE):

FAE is an innovative motion extraction module that completes motion extraction directly during the denoising process, without relying on separate spatiotemporal architectures. FAE observes that during different timesteps in the denoising process, the model focuses differently on motion (low frequency) and appearance details (high frequency). At earlier timesteps, the model pays more attention to motion information; at later timesteps, it focuses on appearance details. FAE dynamically adjusts attention weights to prioritize motion information early and focus on appearance details later, enabling precise motion extraction and control.

Project Links

-

Project Website: https://shiyi-zh0408.github.io/projectpages/FlexiAct/

-

GitHub Repository: https://github.com/shiyi-zh0408/FlexiAct

-

HuggingFace Model Hub: https://huggingface.co/shiyi0408/FlexiAct

-

arXiv Paper: https://arxiv.org/pdf/2505.03730

Application Scenarios of FlexiAct

-

Film Production: Rapidly generate realistic character motions, reducing shooting costs.

-

Game Development: Create diverse character animations to enhance gaming experiences.

-

Advertising & Marketing: Generate virtual spokesperson movements to boost ad appeal.

-

Education & Training: Produce instructional or rehabilitation motion content to aid learning and recovery.

-

Entertainment & Interaction: Enable users to create engaging videos, enhancing entertainment value.

Related Posts