HealthBench: Revolutionizing AI Evaluation in Healthcare with Realistic Benchmarks

What is HealthBench?

HealthBench is a comprehensive benchmark introduced by OpenAI to evaluate the performance of AI systems in healthcare settings. Developed in collaboration with 262 physicians from 60 countries, it encompasses 5,000 realistic health-related conversations. Each conversation is accompanied by a custom rubric created by medical professionals to assess the quality of AI-generated responses. The primary goal of HealthBench is to ensure that AI models are not only accurate but also safe and effective when applied to real-world healthcare scenarios.

Key Features of HealthBench

-

Realistic Health Conversations:

The dataset includes 5,000 multi-turn, multilingual dialogues that simulate interactions between patients and healthcare providers. These conversations cover a wide range of medical specialties and are designed to reflect the complexity of real-life clinical scenarios. -

Physician-Created Evaluation Rubrics:

Each conversation is evaluated using a rubric developed by practicing physicians, ensuring that the assessments align with clinical standards and priorities. -

Focus on Real-World Impact:

HealthBench emphasizes meaningful evaluations that go beyond theoretical knowledge, capturing the nuances of patient-provider interactions and clinical decision-making processes. -

Encouraging Continuous Improvement:

By highlighting areas where current AI models can improve, HealthBench serves as a tool for developers to enhance the capabilities and safety of their systems in healthcare applications.

Technical Principles Behind HealthBench

-

Dataset Composition:

The conversations in HealthBench are generated through a combination of synthetic methods and human adversarial testing. This approach ensures a diverse and challenging set of scenarios that test the limits of AI models. -

Evaluation Methodology:

AI responses are assessed based on rubrics that consider factors such as accuracy, relevance, and safety. This structured evaluation framework allows for consistent and objective comparisons across different models. -

Model Performance Insights:

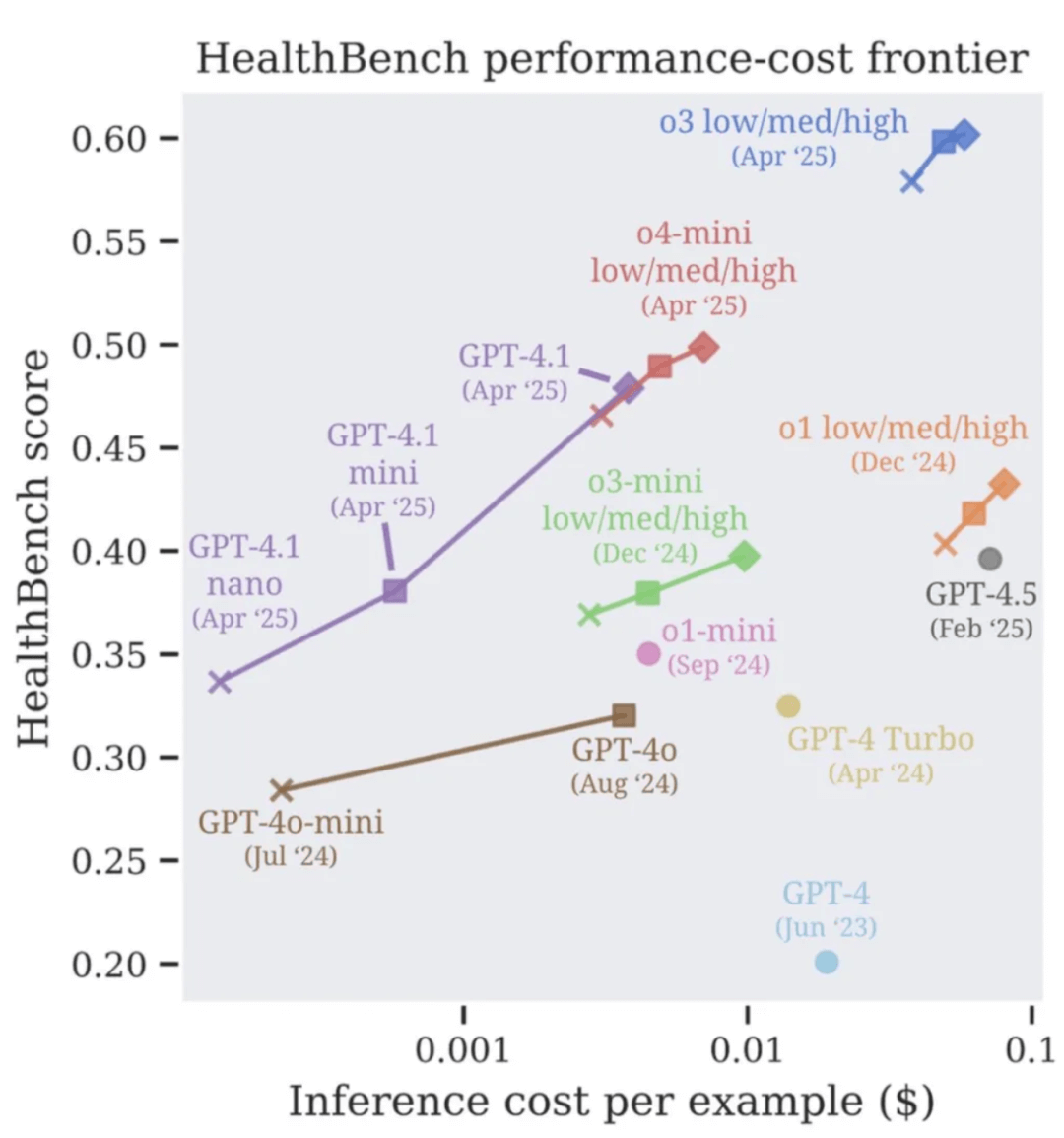

HealthBench provides detailed analyses of how various AI models perform across different medical contexts, offering insights into their strengths and areas needing improvement.

Project Access

-

Official Website: https://openai.com/index/healthbench/

Application Scenarios

-

AI Model Development:

Developers can use HealthBench to benchmark and refine AI models intended for healthcare applications, ensuring they meet clinical standards. -

Medical Education:

Educational institutions can incorporate HealthBench into curricula to teach students about AI’s role and evaluation in healthcare. -

Healthcare Policy and Regulation:

Regulatory bodies can utilize insights from HealthBench to inform guidelines and standards for AI deployment in medical settings. -

Clinical Decision Support:

Healthcare providers can assess the reliability of AI tools intended to assist in clinical decision-making processes.

Related Posts