LightLab – An image light source control model launched by institutions including Google

What is LightLab?

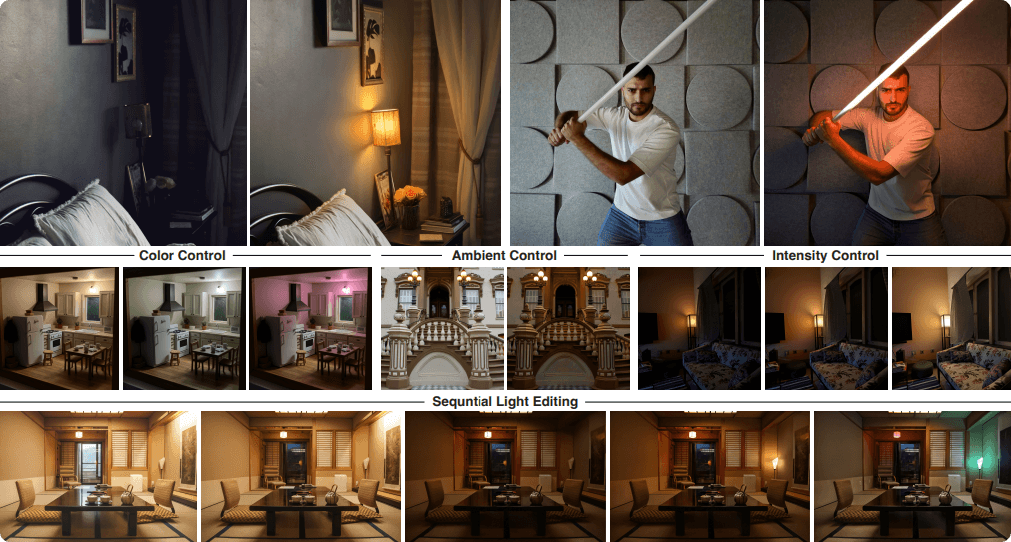

LightLab is an image-based lighting control model developed by Google and other institutions, leveraging the power of diffusion models to offer fine-grained, parametric control over light sources within a single image. The model allows users to adjust light source intensity and color, insert virtual light sources, and modify ambient light levels. Trained using a combination of a small number of real image pairs and a large corpus of synthetically rendered images, LightLab is capable of generating physically plausible lighting effects such as shadows and reflections. It features an interactive demo interface where users can intuitively manipulate lighting parameters via sliders, enabling sophisticated lighting edits. LightLab performs robustly across various scenarios, offering powerful functionality for photography and image editing.

Key Features of LightLab

-

Light Source Intensity Control:

Users can precisely adjust the intensity of specific light sources within an image, ranging from completely off to any desired brightness level. -

Light Source Color Control:

Allows users to alter the color of a light source, supporting a variety of color temperatures and custom RGB values. -

Ambient Light Control:

Users can simulate different ambient lighting conditions by adjusting the overall ambient light intensity. -

Virtual Light Insertion:

Enables the addition of virtual light sources that generate realistic lighting effects based on the scene’s context. -

Sequential Editing:

Supports multiple consecutive edits to the same image, with each new lighting adjustment building on the previous one.

Technical Foundations of LightLab

-

Diffusion Models:

Utilizes the powerful generative capabilities of diffusion models to learn and synthesize realistic lighting effects. -

Data Generation:

Training data consists of a blend of a small number of real photo pairs (capturing complex geometry and lighting) and a large volume of synthetic rendered images (offering diverse lighting conditions). -

Linear Light Model:

Based on the linear nature of light, uses simple additive and subtractive operations to compose image sequences with varying lighting intensities and colors. -

Conditional Diffusion Model:

The diffusion process is conditioned on user-defined lighting parameters—such as source intensity, color, and ambient light—enabling customized image generation. -

Tone Mapping:

Applies tone mapping techniques to ensure the generated images have visually appropriate exposure and contrast. -

Parametric Control:

Provides parameterized controls for light properties, allowing users to make intuitive adjustments via UI elements like sliders.

Project Links

-

Project Website: https://nadmag.github.io/LightLab/

-

Hugging Face Repository: https://huggingface.co/papers/2505.09608

-

arXiv Paper: https://arxiv.org/pdf/2505.09608

Application Scenarios

-

Photography Post-Processing:

Adjust lighting in photos to enhance or change illumination effects after shooting. -

Visual Effects (VFX):

Quickly generate scenes under varied lighting conditions for film and animation. -

Interior Design:

Simulate the effect of different lighting layouts within a room. -

Game Development:

Fine-tune lighting in game environments to improve realism and immersion. -

Advertising Production:

Highlight product features and create visually appealing promotional images.

Related Posts