UQLM: The Secret Weapon for Making Large Language Models More Trustworthy

What is UQLM?

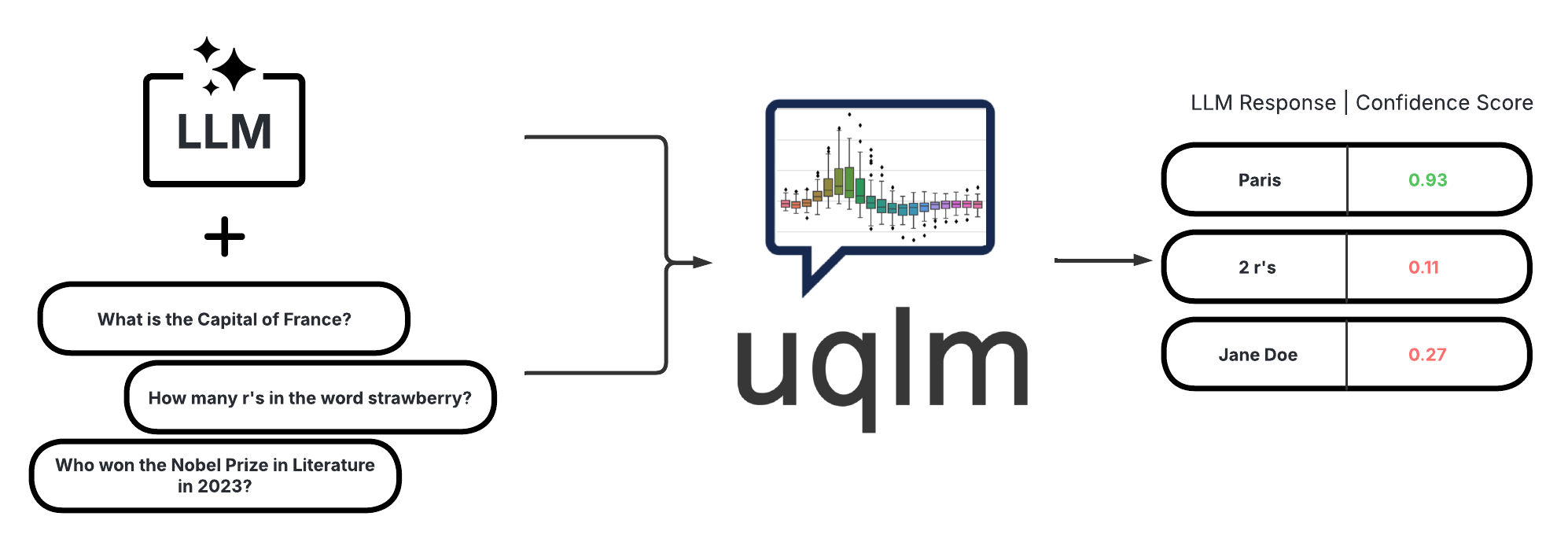

UQLM (Uncertainty Quantification for Language Models) is an open-source Python library developed by CVS Health. It is designed to detect hallucinations in large language model (LLM) outputs using uncertainty quantification techniques. UQLM provides developers and researchers with a suite of advanced scorers to assess the reliability of LLM outputs, helping to identify and reduce the spread of misinformation.

Key Features

-

Multiple Scorer Types:

Includes black-box scorers, white-box scorers, LLM-as-a-judge scorers, and ensemble scorers to meet different evaluation needs. -

Response-Level Confidence Scoring:

Each scorer returns a confidence score between 0 and 1, indicating the likelihood that the model’s output is trustworthy. -

Compatibility with Various LLMs:

Designed to work with any large language model, offering great flexibility. -

Out-of-the-Box Usability:

Easy-to-use interfaces allow for quick integration into existing workflows.

Technical Principles

-

Black-box Scorers:

Assess the consistency of multiple generated responses to estimate uncertainty—without needing internal access to the model. -

White-box Scorers:

Use token-level probability data from the model to quickly evaluate uncertainty, suitable for models that expose token probabilities. -

LLM-as-a-Judge Scorers:

Employ one or more LLMs to review and critique the original model’s output, offering highly customizable evaluation methods. -

Ensemble Scorers:

Combine results from multiple scorers to deliver robust confidence estimates, ideal for high-stakes applications.

Project Links

-

GitHub Repository: https://github.com/cvs-health/uqlm

-

Official Documentation: https://cvs-health.github.io/uqlm/latest/index.html

Use Cases

-

Healthcare:

Ensure the accuracy of AI-generated medical advice and reduce the risk of misinformation. -

Legal Document Drafting:

Evaluate the reliability of legal text outputs and assist legal professionals in review processes. -

Educational Content Creation:

Detect potential inaccuracies in educational materials to enhance the quality of learning resources. -

Customer Service Automation:

Validate the correctness of automated replies to improve customer satisfaction.

Related Posts