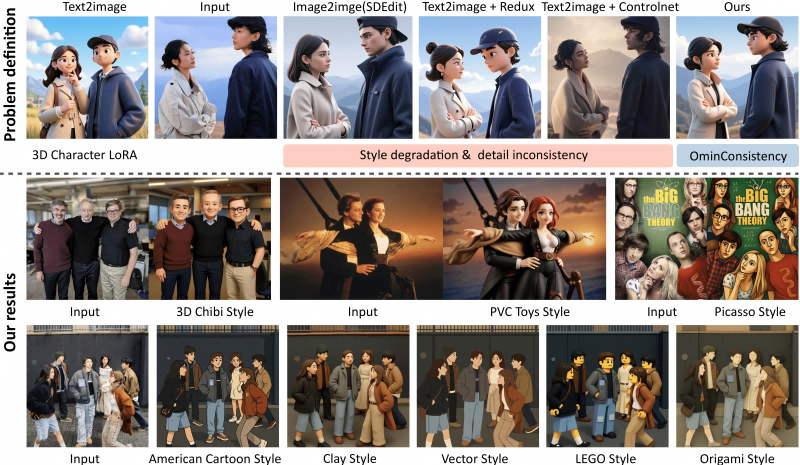

OmniConsistency – An image style transfer model launched by the National University of Singapore

What is OmniConsistency?

OmniConsistency is an image style transfer model developed by the National University of Singapore, designed to address the consistency problem of stylized images in complex scenes. The model is trained on a large-scale paired stylized dataset using a two-stage training strategy that decouples style learning from consistency learning. It maintains semantic, structural, and detail consistency across multiple styles. OmniConsistency supports seamless integration with LoRA (Low-Rank Adaptation) modules of arbitrary styles, enabling efficient and flexible stylization effects. In experiments, OmniConsistency demonstrates performance comparable to GPT-4o while offering higher flexibility and generalization ability.

Main Features of OmniConsistency

-

Style Consistency: Maintains consistent style across multiple styles, avoiding style degradation.

-

Content Consistency: Preserves the semantics and details of the original image during stylization to ensure content integrity.

-

Style-Agnostic: Seamlessly integrates with LoRA modules of any style, supporting diverse style transfer tasks.

-

Flexibility: Supports flexible layout control without relying on traditional geometric constraints such as edge maps, sketches, or pose maps.

Technical Principles of OmniConsistency

-

Two-Stage Training Strategy:

-

Stage One (Style Learning): Independently trains multiple style-specific LoRA modules, each capturing unique details of a particular style.

-

Stage Two (Consistency Learning): Trains a consistency module on paired data by dynamically switching between different style LoRA modules. This ensures the consistency module focuses on structural and semantic consistency without absorbing specific style features.

-

-

Consistency LoRA Module:

Introduces low-rank adaptation (LoRA) modules in the conditional branch only, avoiding interference with the main network’s stylization capabilities. Uses causal attention to ensure internal interaction among condition tokens while keeping the main branch (noise and text tokens) clean for causal modeling. -

Conditional Token Mapping (CTM):

Guides high-resolution generation with low-resolution conditional images. Uses a mapping mechanism to ensure spatial alignment and reduce memory and computational cost. -

Feature Reuse:

Caches intermediate features of conditional tokens during the diffusion process to avoid redundant computation and improve inference efficiency. -

Data-Driven Consistency Learning:

Constructs a high-quality paired dataset consisting of 2,600 image pairs across 22 different styles. Uses a data-driven approach to learn semantic and structural consistency mappings.

Project Resources

-

GitHub Repository: https://github.com/showlab/OmniConsistency

-

HuggingFace Model Hub: https://huggingface.co/showlab/OmniConsistency

-

arXiv Technical Paper: https://arxiv.org/pdf/2505.18445

-

Online Demo: https://huggingface.co/spaces/yiren98/OmniConsistency

Application Scenarios of OmniConsistency

-

Art Creation: Apply various artistic styles (such as anime, oil painting, sketch) to images, helping artists quickly generate stylized works.

-

Content Generation: Quickly generate images that conform to specific styles, enhancing content diversity and appeal.

-

Advertising Design: Create style-consistent images for advertising and marketing materials, improving visual impact and brand consistency.

-

Game Development: Rapidly generate stylized characters and scenes for games, increasing development efficiency.

-

Virtual Reality (VR) and Augmented Reality (AR): Generate stylized virtual environments and elements to enhance user experience.

Related Posts