DGM – A self-improving AI Agent system that iteratively modifies its own code to enhance performance

What is DGM?

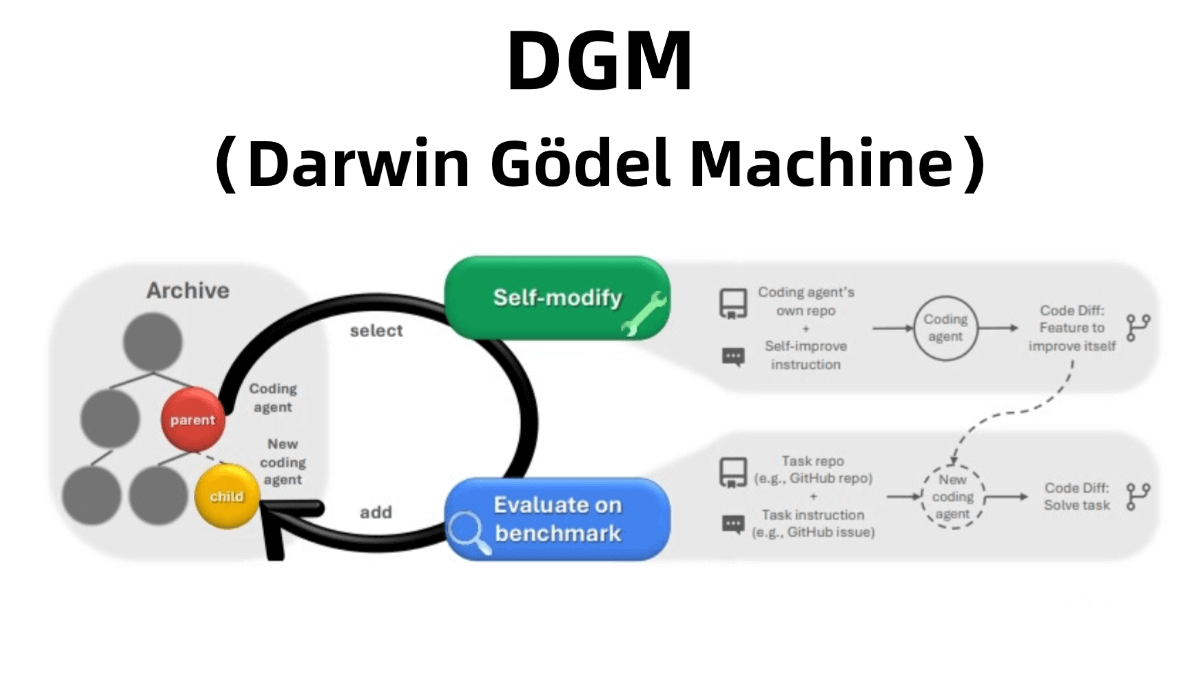

DGM (Darwin Gödel Machine) is a self-improving AI system that enhances its performance by iteratively modifying its own code. DGM selects an agent from its archive of coding agents, generates a new version based on a foundation model, and validates the new agent’s performance through benchmark tests. If the performance improves, the new agent is added to the archive.

Inspired by Darwinian evolution, DGM employs an open-ended exploration strategy to evolve along multiple paths from diverse starting points, helping it avoid local optima. In experiments, DGM has demonstrated significant performance improvements—for example, increasing performance on the SWE-bench benchmark from 20.0% to 50.0%, and on the Polyglot benchmark from 14.2% to 30.7%. All self-improvement processes take place in a secure, sandboxed environment.

Key Features of DGM

-

Self-Improvement:

DGM iteratively modifies its own code to optimize performance and functionality. A self-modification module reads the system’s source code and uses a foundation model to generate modification suggestions. -

Empirical Validation:

Each code modification is validated through programming benchmarks (e.g., SWE-bench and Polyglot). A secure evaluation engine uses Docker containers to isolate and assess the performance of each new version. -

Open-Ended Exploration:

Inspired by Darwinian evolution, DGM explores a wide range of evolutionary paths from diverse starting points to avoid local optima. It maintains an archive of coding agents and continuously adds newly generated variants, allowing any archived agent to serve as a starting point for further evolution. -

Safety Measures:

All execution and self-modification processes take place within an isolated sandbox environment, minimizing risks to the host system.

How DGM Works

-

Self-Modification Stage:

DGM selects an agent from its archive and generates a new version using a foundation model. -

Validation Stage:

The new agent is evaluated using programming benchmarks to determine whether it performs better than the original. -

Archive Update:

Validated agents with improved performance are added to the archive, which stores all generated variants.

Project Links

-

GitHub Repository: https://github.com/jennyzzt/dgm

-

arXiv Technical Paper: https://arxiv.org/pdf/2505.22954

Application Scenarios

-

Automated Programming:

DGM can automatically generate and optimize code, reducing developer workload and increasing programming efficiency. Its self-improvement mechanism helps produce more efficient code and enhance overall software performance. -

Code Optimization:

DGM detects and improves problematic code automatically, enhancing readability and execution efficiency. Continuous iteration leads to better versions of code, saving time and development costs. -

Automated Debugging:

DGM can evolve to fix detected issues without human intervention, significantly reducing maintenance costs. It identifies potential bugs and generates fixes autonomously. -

Research Platform:

DGM offers a practical platform for studying self-improving systems and supports academic research in this emerging area. Researchers can explore new algorithms and models to advance AI technologies.

Related Posts