Playmate – A Facial Animation Generation Framework Developed by the Quwan Technology Team

What is Playmate?

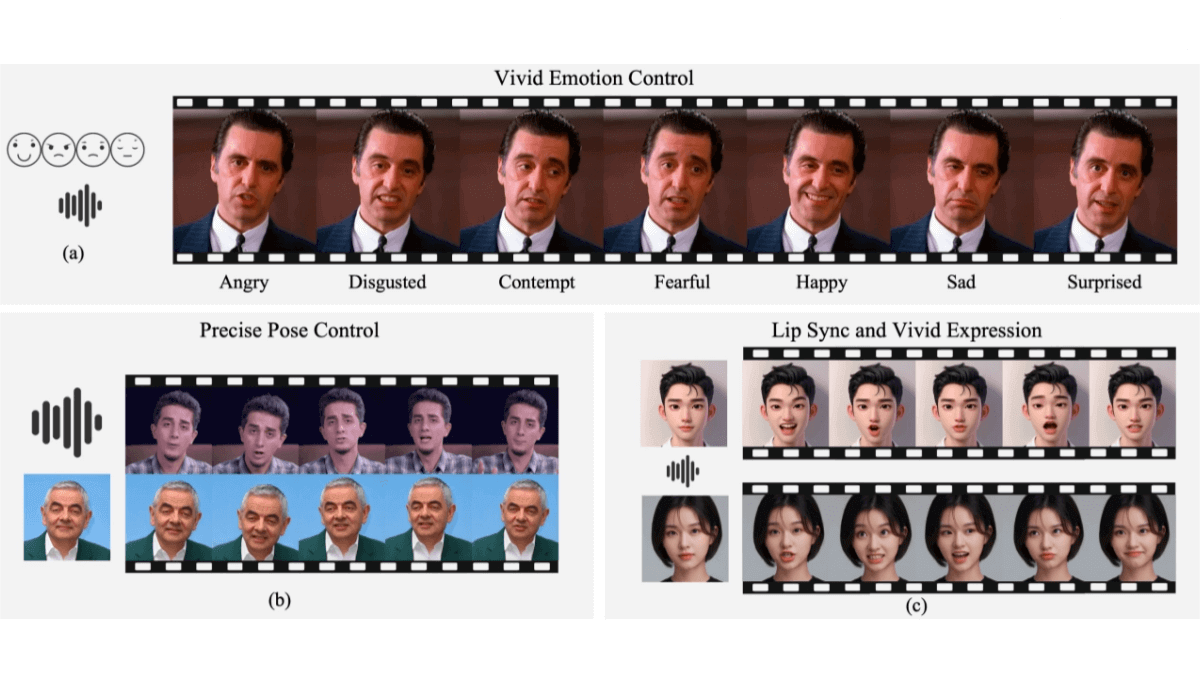

Playmate is a facial animation generation framework developed by the Guangzhou-based Quwan Technology team. It leverages a 3D implicit space-guided diffusion model with a dual-stage training framework to precisely control facial expressions and head poses based on audio and emotion prompts. Playmate enables the generation of high-quality dynamic portrait videos. Through a motion disentanglement module and an emotion control module, Playmate provides fine-grained control over video output, significantly enhancing video quality and emotional expressiveness. It marks a major advancement in audio-driven portrait animation, enabling flexible generation of expressive, stylized animations with broad application potential.

Key Features of Playmate

-

Audio-Driven Animation:

Generates dynamic portrait videos from just a static photo and an audio clip, achieving natural lip-sync and expressive facial movements. -

Emotion Control:

Produces emotionally expressive videos based on specific emotion cues (e.g., anger, disgust, contempt, fear, happiness, sadness, surprise). -

Pose Control:

Supports head movement control using reference images to generate a wide range of poses. -

Independent Control:

Allows independent manipulation of facial expression, lip movement, and head pose. -

Diverse Style Generation:

Capable of generating dynamic portraits in various styles, including realistic faces, cartoons, artistic portraits, and even animals—suitable for diverse use cases.

Technical Principles of Playmate

-

3D Implicit Space-Guided Diffusion Model:

Utilizes 3D implicit representation to disentangle facial attributes such as expression, lip movement, and head pose. An adaptive normalization strategy improves motion attribute disentanglement accuracy, ensuring more natural video output. -

Dual-Stage Training Framework:

-

Stage 1: Trains an audio-conditioned diffusion transformer that directly generates motion sequences from audio. A motion disentanglement module separates expression, lip movement, and head pose.

-

Stage 2: Introduces an emotion control module, encoding emotional cues into the latent space to finely control emotional expressiveness in generated videos.

-

-

Emotion Control Module:

Built on Diffusion Transformer (DiT) blocks, it embeds emotion conditions into the generation process. The model uses Classifier-Free Guidance (CFG) to balance output quality and diversity by adjusting CFG weights. -

Efficient Diffusion Model Training:

Employs a pretrained Wav2Vec2 model to extract audio features, and uses a self-attention mechanism to align audio and motion features. A Markov chain with forward and reverse steps adds Gaussian noise to motion data, and the diffusion transformer predicts the denoised sequence, generating the final motion output.

Project Resources for Playmate

-

Project Website: https://playmate111.github.io/Playmate/

-

GitHub Repository: https://github.com/Playmate111/Playmate

-

arXiv Technical Paper: https://arxiv.org/pdf/2502.07203

Application Scenarios for Playmate

-

Film Production:

Generates virtual character animations, enhances visual effects, and supports character replacement—reducing manual work and improving realism. -

Game Development:

Enables creation of animated NPCs and interactive story characters, enhancing immersion and interactivity. -

Virtual Reality (VR) & Augmented Reality (AR):

Powers natural facial expressions and lip-sync in virtual characters, virtual meetings, and social VR experiences to improve user engagement. -

Interactive Media:

Used in livestreaming, video conferencing, virtual influencers, and interactive ads to make content more lively and engaging. -

Education & Training:

Supports virtual teacher creation, simulation training, and language learning, making educational content more engaging and immersive.

Related Posts