MirrorMe – An Audio-Driven Portrait Animation Framework by Alibaba Tongyi

What is MirrorMe?

MirrorMe is a real-time, high-fidelity audio-driven portrait animation framework developed by Alibaba’s Tongyi Lab. Built upon the LTX video model, MirrorMe incorporates three key innovations—identity injection mechanism, audio-driven control module, and progressive training strategy—to address the challenges of generating temporally consistent, high-quality animated videos in real time. It ranks first on the EMTD benchmark, demonstrating outstanding image fidelity, lip-sync accuracy, and temporal stability. With efficient inference performance, MirrorMe supports real-time applications such as e-commerce live streaming.

Key Features of MirrorMe

-

Real-Time High-Fidelity Animation Generation: Capable of producing high-quality upper-body animated videos in real time at 24 FPS, enabling smooth and interactive experiences.

-

Audio-Driven Lip Synchronization: Accurately converts audio signals into corresponding mouth movements, achieving highly realistic lip-sync.

-

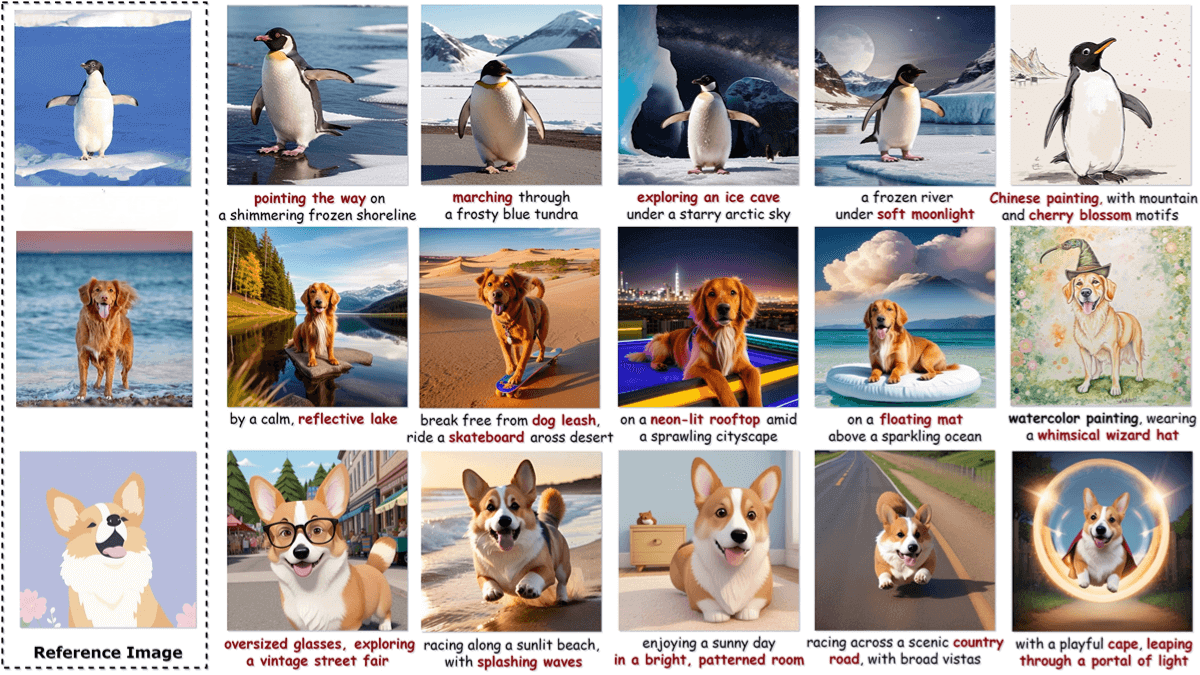

Identity Preservation: Uses a reference-based identity injection mechanism to ensure the generated animation closely resembles the input reference image.

-

Precise Facial Expression and Hand Gesture Control: Supports fine control of facial expressions and integrates hand gesture signals for accurate hand motion animation.

Technical Foundations of MirrorMe

-

Core Architecture: Based on the LTX video model, a video generation framework utilizing a Diffusion Transformer. It applies spatiotemporal tokenization (each token represents a 32×32×8 pixel block), achieving an ultra-high compression ratio of 1:8192.

-

Identity Injection Mechanism: Ensures the animated subject visually matches the reference image. The 3D VAE in LTX encodes the reference image into latent identity variables, which are concatenated in the temporal dimension with the noised latent variables. These are then fused using self-attention to preserve appearance fidelity in the generated video.

-

Audio-Driven Control Module:

-

Causal Audio Encoder: Uses the pretrained wav2vec2 model to extract frame-level audio embeddings, then compresses the sequence with a causal encoder to align with the video latent sequence in temporal resolution.

-

Audio Adapter: Injects audio features into the video generation process using a cross-attention-based adapter that merges audio and video features, ensuring accurate facial and mouth movement synchronization.

-

-

Progressive Training Strategy: Improves training effectiveness and output quality by starting with facial close-ups to learn the audio-expression mapping. The model then gradually expands to full upper-body synthesis, using facial masks to maintain dynamic facial responsiveness. A pose encoder is also introduced to incorporate hand keypoints for precise hand gesture control.

-

Efficient Inference: Leverages LTX’s compression and denoising capabilities to speed up inference. Temporally compresses video input to 1/8 of its original length and spatially downscales it to 1/32 of original resolution, significantly reducing the number of latent tokens. On consumer-grade NVIDIA GPUs, MirrorMe achieves 24 FPS real-time generation, meeting strict latency requirements for live applications.

Project Links

-

Technical Paper on arXiv: https://arxiv.org/pdf/2506.22065v1

Application Scenarios of MirrorMe

-

E-commerce Live Streaming: Creates realistic virtual anchors that respond with natural expressions and movements based on input audio, enhancing interactivity and engagement in live streams.

-

Virtual Customer Service: Generates lifelike virtual customer service avatars that interact with users in real time via audio-driven animations. Supports multilingual environments, making it accessible to users with diverse language backgrounds.

-

Online Education: Powers virtual teachers that generate real-time facial expressions and gestures based on teaching content. Also supports creating personalized teacher avatars resembling students to provide tailored learning experiences.

-

Virtual Meetings: Generates virtual meeting participants that animate facial and body expressions in real time from speech, improving remote collaboration and the sense of face-to-face interaction.

-

Social Media: Enables users to create personalized virtual avatars for social media, interact using voice-driven animations, and share engaging content, boosting creativity and viewer participation.

Related Posts