OpenReasoning-Nemotron: NVIDIA’s High-Performance Reasoning Model Family Surpassing o3 to Become the New Open-Source Benchmark

What is OpenReasoning-Nemotron?

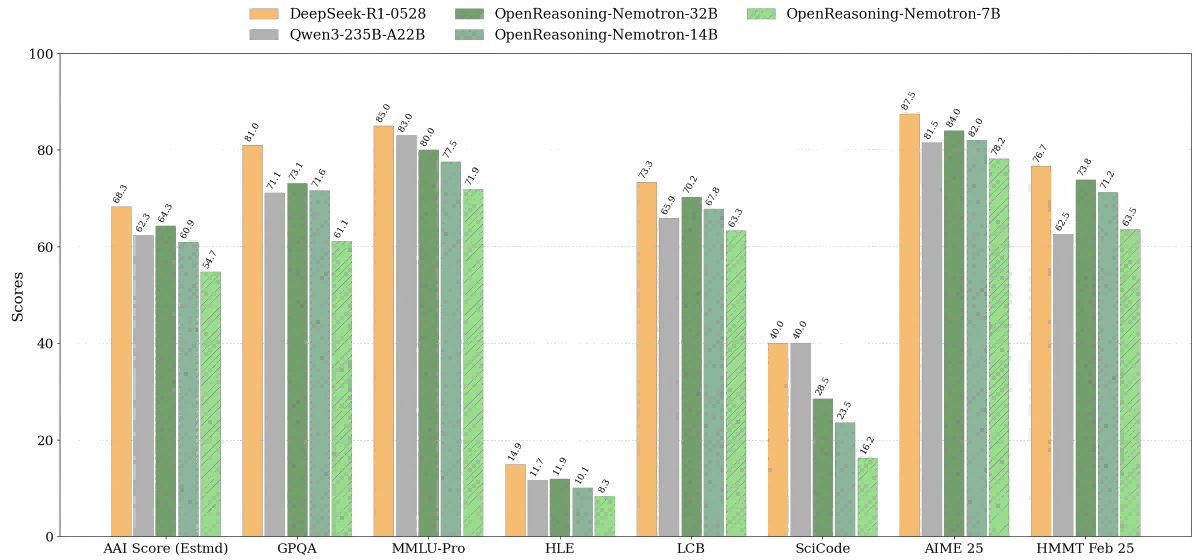

OpenReasoning-Nemotron is a series of high-performance large language models (LLMs) launched by NVIDIA, based on the Qwen 2.5 architecture. Originating from the DeepSeek R1 0528 671B model, these models are distilled from large-scale data and come in four parameter sizes: 1.5B, 7B, 14B, and 32B. They focus on reasoning tasks in mathematics, science, and programming. Available on the Hugging Face platform, these models aim to provide researchers with a stronger reasoning baseline and to advance technologies such as reinforcement learning for reasoning (RL4R).

Key Features and Advantages

-

High-Quality Distilled Dataset: Utilizing the DeepSeek R1 0528 model, NVIDIA generated 5 million high-quality reasoning samples covering mathematics, programming, and science domains. This dataset will be released in the coming months to further boost model reasoning capabilities.

-

Multiple Model Sizes: To accommodate different computing resources and research needs, OpenReasoning-Nemotron offers models with 1.5B, 7B, 14B, and 32B parameters, suitable for a range of applications from lightweight to high-performance.

-

Outstanding Reasoning Performance: The models demonstrate excellent results on various reasoning benchmarks. Particularly, models with 7B parameters and above show significant score improvements, achieving a top score of 78.2, surpassing existing models such as o3 to become the new leader in open-source models.

-

Robust Research Foundation: Training and evaluation utilize NVIDIA’s NeMo-Skills toolkit, ensuring high quality and reproducibility. Related code and data generation tools are also open-sourced to facilitate secondary development and customized training by researchers.

Technical Principles and Architecture

OpenReasoning-Nemotron models are based on the Qwen 2.5 architecture and leverage large-scale data distillation techniques to extract knowledge from the DeepSeek R1 0528 671B model, producing strong reasoning capabilities. The training process employs NVIDIA’s NeMo-Skills toolchain, which covers data generation, preprocessing, model conversion, training, and evaluation to guarantee model quality and reproducibility. Model evaluation uses the pass@1 metric to comprehensively measure performance across reasoning tasks.

Project Links

-

Hugging Face Model Page: https://huggingface.co/models?search=openreasoning-nemotron

-

Official Blog Post: https://huggingface.co/blog/nvidia/openreasoning-nemotron

Application Scenarios

-

Mathematical Reasoning: The models excel at solving complex math problems, achieving outstanding results on benchmarks such as AIME24 and AIME25.

-

Scientific Reasoning: They understand and reason about scientific concepts, assisting researchers in scientific exploration.

-

Programming Assistance: The models comprehend code logic, offering programming suggestions and code generation to enhance development efficiency.

-

Reinforcement Learning Reasoning Research: Their high performance provides a strong baseline for advanced research in reinforcement learning for reasoning (RL4R), fostering progress in this field.

Related Posts