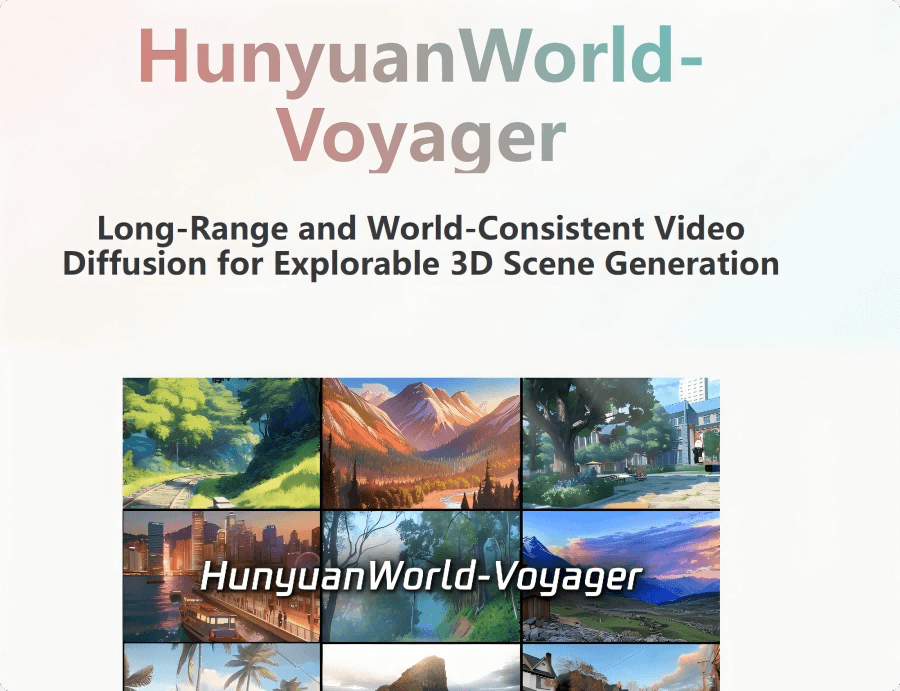

What is HunyuanWorld-Voyager?

HunyuanWorld-Voyager (abbreviated as Voyager) is Tencent’s industry-first ultra-long-range roaming world model that natively supports 3D reconstruction. It is a novel video diffusion framework capable of generating 3D point cloud sequences along user-defined camera paths from a single image. The model supports 3D-consistent scene video generation along custom camera trajectories, producing aligned RGB and depth videos for efficient and direct 3D reconstruction. Voyager includes two key components: world-consistent video diffusion and long-range world exploration, achieving iterative scene expansion through efficient point pruning and autoregressive inference. It also introduces a scalable data engine for generating large-scale RGB-D video training data. On the WorldScore benchmark, Voyager demonstrates outstanding performance across multiple metrics, highlighting its powerful capabilities.

Main Features of HunyuanWorld-Voyager

-

3D Point Cloud Sequence Generation from a Single Image: Generates 3D-consistent point cloud sequences based on user-defined camera paths, supporting long-range world exploration.

-

3D-Consistent Scene Video Generation: Produces 3D-consistent scene videos along user-defined camera trajectories, providing an immersive 3D scene roaming experience.

-

Supports Real-Time 3D Reconstruction: The generated RGB and depth videos can be directly used for efficient 3D reconstruction without additional tools, enabling rapid video-to-3D conversion.

-

Versatile Application Scenarios: Suitable for video reconstruction, image-to-3D generation, video depth estimation, and other 3D understanding and generation tasks, with broad application potential.

-

Powerful Performance: On Stanford University’s WorldScore benchmark, Voyager achieves excellent results across multiple key metrics, demonstrating its strong capabilities in 3D scene generation and video diffusion.

Technical Principles of HunyuanWorld-Voyager

-

World-Consistent Video Diffusion: The model uses a unified architecture to jointly generate aligned RGB and depth video sequences, ensuring global consistency conditioned on existing world observations.

-

Long-Range World Exploration: Utilizes efficient point pruning and autoregressive inference combined with smooth video sampling to iteratively expand scenes while maintaining context-aware consistency.

-

Scalable Data Engine: Introduces a video reconstruction pipeline that automates camera pose estimation and metric depth prediction, generating large-scale, diverse training data for any video without manual 3D annotations.

-

Autoregressive Inference and World Caching: Efficient point pruning and autoregressive inference, combined with a world caching mechanism, enable iterative scene expansion, maintain geometric consistency, and support arbitrary camera trajectories.

-

Efficient 3D Reconstruction: Generated RGB and depth videos can be directly used for rapid 3D reconstruction, eliminating the need for additional reconstruction tools.

HunyuanWorld-Voyager Project Links

-

Official Website: https://3d-models.hunyuan.tencent.com/world/

-

GitHub Repository: https://github.com/Tencent-Hunyuan/HunyuanWorld-Voyager

-

Hugging Face Model Hub: https://huggingface.co/tencent/HunyuanWorld-Voyager

-

Technical Report: https://3d-models.hunyuan.tencent.com/voyager/voyager_en/assets/HYWorld_Voyager.pdf

Application Scenarios of HunyuanWorld-Voyager

-

Video Reconstruction: Enables efficient and direct 3D reconstruction by generating aligned RGB and depth videos without additional tools.

-

Image-to-3D Generation: Converts a single image into 3D-consistent point cloud sequences, supporting rapid transformation from 2D images to 3D scenes for virtual scene creation.

-

Video Depth Estimation: Produces depth information aligned with RGB videos for video analysis and 3D understanding tasks.

-

Virtual Reality (VR) and Augmented Reality (AR): Generated 3D scenes and videos can be used to create immersive VR experiences or AR applications.

-

Game Development: 3D scene assets can be seamlessly integrated into mainstream game engines, providing rich creative content for game development.

-

3D Modeling and Animation: Generated 3D point clouds and videos serve as inputs for 3D modeling and animation production, improving creative efficiency.