AnyI2V – An Image-to-Animation Generation Framework Launched by Fudan University in Collaboration with Alibaba DAMO Academy and Others

What is AnyI2V?

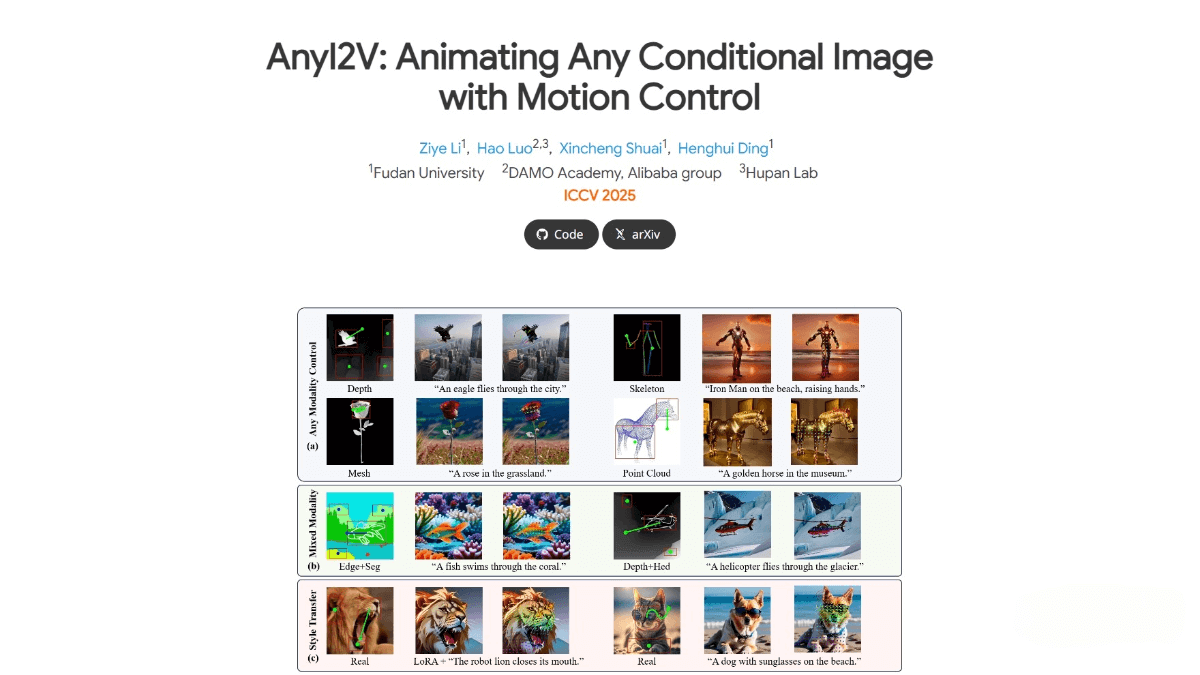

AnyI2V is an innovative image-to-animation generation framework jointly developed by Fudan University, Alibaba DAMO Academy, and Hupan Lab. The framework does not require large amounts of training data and can transform static conditional images (such as meshes or point clouds) into dynamic videos, supporting user-defined motion trajectories. AnyI2V supports multiple input modalities and allows flexible editing through LoRA and text prompts. It excels in spatial and motion control, providing an efficient and flexible new approach to animating images.

Key Features of AnyI2V

-

Multimodal support: Accepts various conditional input types, including modalities that are difficult to obtain paired training data for, such as meshes and point clouds.

-

Hybrid conditional inputs: Combines different types of inputs to increase flexibility.

-

Editing capabilities: Allows style transfer and content adjustment using LoRA or text prompts.

-

Motion control: Users can define motion trajectories to precisely control video animation effects.

-

No training required: Eliminates the need for large datasets or complex training procedures, lowering the barrier to entry.

Technical Principles

-

DDIM inversion: Performs DDIM (Denoising Diffusion Implicit Model) inversion on conditional images to extract features for subsequent animation generation.

-

Feature extraction and replacement: Removes the temporal self-attention module from the 3D U-Net to focus on spatial features. Features are extracted from spatial blocks and stored at specific timesteps.

-

Latent representation optimization: Replaces extracted features into the 3D U-Net and optimizes latent representations, constrained by automatically generated semantic masks for precise local updates.

-

Motion control: Users input motion trajectories, which are combined with optimized latent representations to generate videos with user-defined motion paths, allowing precise control over object movement.

Project Resources

-

Official website: https://henghuiding.com/AnyI2V/

-

GitHub repository: https://github.com/FudanCVL/AnyI2V

-

arXiv technical paper: https://arxiv.org/pdf/2507.02857

Application Scenarios

-

Animation production: Enables animators to quickly generate prototypes by converting static images into dynamic videos, providing more creative space.

-

Video special effects: Used in film production to generate complex visual effects, animate static scene images, or add dynamic effects to characters for enhanced visual impact.

-

Game development: Generates dynamic scenes and character animations for games, creating richer and more vivid visual experiences.

-

Dynamic advertising: Allows designers to convert static ad images into dynamic videos that capture audience attention.

-

Social media content: Brands and creators can produce eye-catching social media videos to increase engagement and content virality.

Related Posts