Hunyuan 3D-Omni – A 3D Asset Generation Framework by Tencent Hunyuan

What is Hunyuan3D-Omni?

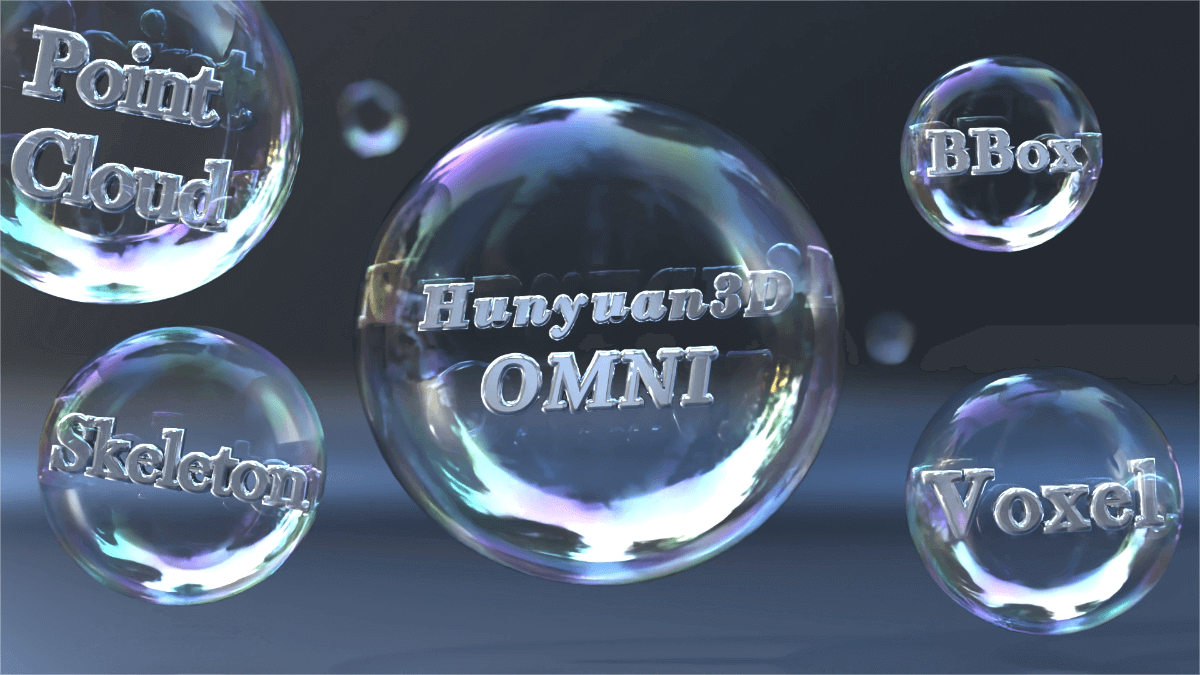

Hunyuan3D-Omni is a 3D asset generation framework introduced by Tencent’s Hunyuan 3D team, designed to achieve precise 3D model generation through multiple control signals. Built on the Hunyuan3D 2.1 architecture, it incorporates a unified control encoder capable of handling diverse inputs such as point clouds, skeletal poses, and bounding boxes, avoiding signal confusion. The framework employs a progressive, difficulty-aware sampling strategy during training, prioritizing more challenging signals to enhance robustness against missing inputs. Hunyuan3D-Omni supports a wide range of control modes—including bounding boxes, skeletal poses, point clouds, and voxels—enabling the generation of character models with specific poses or models constrained by bounding boxes. It effectively addresses common issues in traditional 3D generation, such as distortions and missing details.

Key Features of Hunyuan3D-Omni

-

Multi-modal control signal input: Supports point clouds, skeletal poses, bounding boxes, voxels, and more. A unified control encoder converts these signals into guiding conditions for model generation, enabling precise 3D model creation.

-

High-fidelity 3D model generation: Produces high-quality 3D models, overcoming common problems like distortions, flattening, missing details, and misaligned proportions.

-

Geometry-aware transformations: Integrates geometric awareness to generate models that are structurally logical, natural, and realistic.

-

Enhanced robustness in production: Uses progressive, difficulty-aware sampling during training to ensure stability across varied input conditions. Even with partial missing signals, the model can still generate high-quality 3D assets.

-

Standardized and stylized outputs: Supports standardized character poses and offers style options for diverse aesthetic requirements across different scenarios.

Technical Principles of Hunyuan3D-Omni

-

Unified control encoder: Encodes multiple control signals (point clouds, skeletal poses, bounding boxes, voxels) into point cloud representations. A lightweight encoder extracts features, avoiding control target confusion and enabling effective multimodal fusion.

-

Progressive training strategy: Applies a progressive, difficulty-aware sampling method, prioritizing harder signals while reducing weight on easier ones. This fosters robust multimodal fusion and strengthens resilience to missing inputs.

-

Geometry-aware generation: Embeds geometric understanding in the generation process, allowing the model to interpret the geometry of input signals and produce geometrically consistent 3D assets.

-

Diffusion-based generation: Employs diffusion models to generate 3D assets by progressively denoising. Control signals serve as guiding conditions, enabling controllable 3D generation.

-

Extended model architecture: Builds upon Hunyuan3D 2.1, expanding support for multiple control signals while enhancing overall performance and output quality.

Project Resources

-

GitHub Repository: https://github.com/Tencent-Hunyuan/Hunyuan3D-Omni

-

HuggingFace Models: https://huggingface.co/tencent/Hunyuan3D-Omni

-

arXiv Paper: https://arxiv.org/pdf/2509.21245

Application Scenarios of Hunyuan3D-Omni

-

Game development: Rapidly generate high-quality 3D characters, props, and environments to boost efficiency and reduce production costs.

-

Film production: Create realistic 3D effects and animations, accelerating workflows and enhancing visual quality.

-

Architectural design: Generate 3D assets for buildings and interior designs, aiding design visualization.

-

Virtual reality (VR) and augmented reality (AR): Build immersive 3D environments and interactive objects to enrich user experiences.

-

Industrial design: Produce product prototypes and component models for design validation and presentations.

-

Education and training: Create 3D teaching resources, such as virtual labs and historical scene reconstructions, to enhance learning outcomes.

Related Posts