Ring-1T-preview – Ant Group’s Bailian Open-Source Trillion-Parameter Reasoning Model

What is Ring-1T-preview?

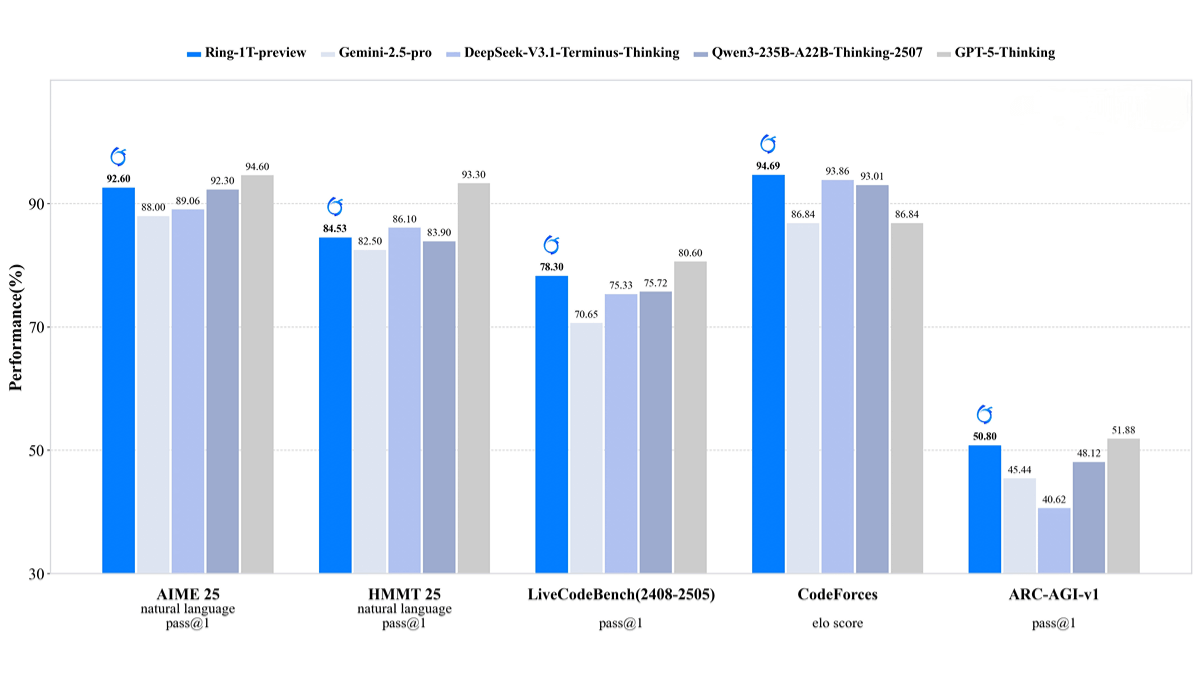

Ring-1T-preview is the preview version of Ant Group’s open-source trillion-parameter model. Built on the Ling 2.0 MoE architecture and pretrained on 20T tokens, it is further enhanced with reasoning ability training via ASystem, Ant’s self-developed reinforcement learning framework. The model demonstrates outstanding performance in natural language reasoning, scoring 92.6 on AIME 2025—close to GPT-5—and solving the 3rd problem in IMO 2025 in a single attempt, while providing partially correct answers for other problems, showcasing advanced reasoning skills.

Key Features of Ring-1T-preview

-

Powerful Natural Language Reasoning: Achieved 92.6 points on the AIME 2025 benchmark, close to GPT-5’s 94.6, highlighting strong mathematical reasoning abilities.

-

Efficient Problem-Solving: Successfully solved the 3rd problem in IMO 2025 in one attempt and produced partially correct answers for other problems, reflecting advanced reasoning capacity.

-

Multi-domain Competence: Demonstrated strong results across tasks such as HMMT 2025, LiveCodeBench v6, CodeForces, and ARC-AGI-1, proving broad applicability.

-

Open-source Collaboration: Both code and weights are fully open-sourced on Hugging Face, encouraging community exploration, feedback, and faster iteration.

Technical Foundations of Ring-1T-preview

-

Architecture Design: Built on the Ling 2.0 MoE framework with a trillion-parameter scale, offering powerful expressiveness and efficient computation.

-

Pretraining Corpus: Trained on 20T high-quality tokens to capture rich linguistic knowledge and patterns.

-

Reinforcement Learning Training: Enhanced with RLVR training using the in-house reinforcement learning system ASystem, boosting reasoning and decision-making abilities.

-

Continuous Iteration: The model is still under active training, with ongoing optimization to address current challenges such as multilingual interference and repetitive reasoning.

Project Repository

- Hugging Face Model Hub: https://huggingface.co/inclusionAI/Ring-1T-preview

Application Scenarios of Ring-1T-preview

-

Natural Language Reasoning: Scored 92.6 on AIME 2025, nearly matching GPT-5’s 94.6, proving strong mathematical reasoning power.

-

Code Generation and Optimization: Outperformed GPT-5 with a score of 94.69 on the CodeForces benchmark, showcasing excellent code generation skills.

-

Multi-agent Frameworks: Can be integrated into the multi-agent framework AWorld for testing and exploring complex reasoning tasks.

-

Academic Research & Development: As the world’s first open-source trillion-parameter reasoning model, it provides researchers and developers with a high-performance, reproducible foundation for reasoning, promoting transparency and collaborative innovation in the large-model ecosystem.

Related Posts