What is Code2Video?

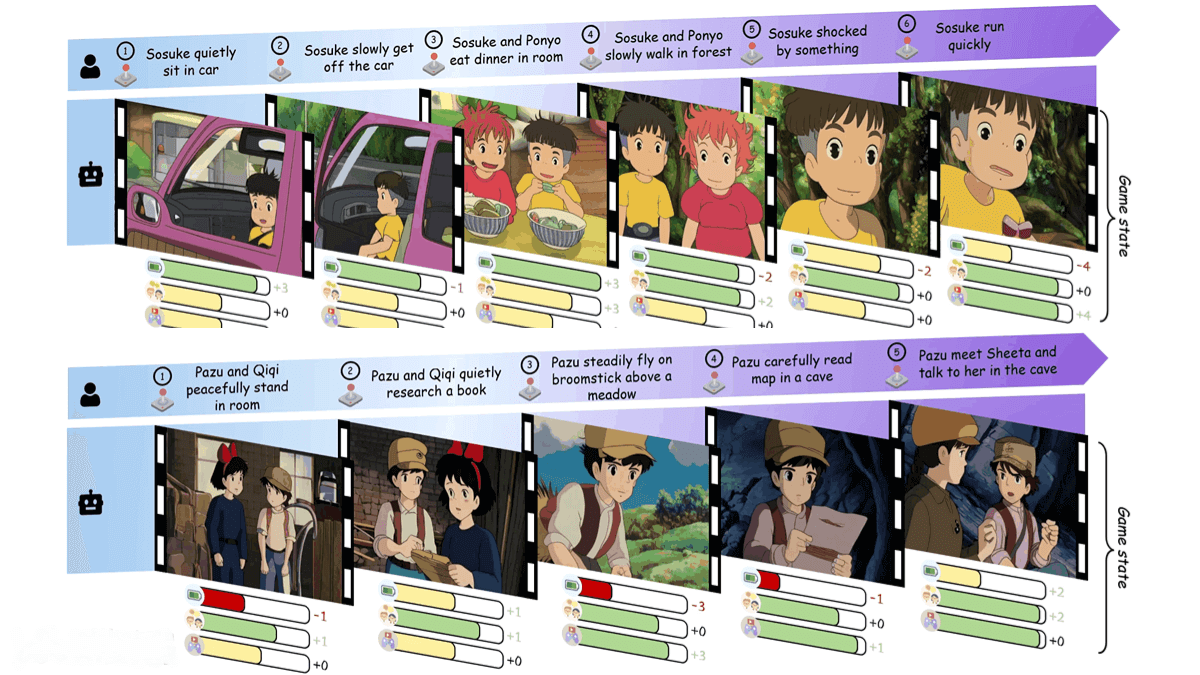

Code2Video is an AI instructional video generation framework developed by the Show Lab team at the National University of Singapore. It automatically creates high-quality educational videos from Python code. The core innovation lies in a three-agent collaboration model:

-

Planner – converts knowledge points into a structured storyboard.

-

Coder – transforms the storyboard into executable Manim code.

-

Critic – optimizes the visual layout.

Compared to traditional video generation tools, Code2Video shows superior performance in knowledge transfer efficiency (40% improvement on the MMMC benchmark) and video stability, making it especially suitable for subjects like mathematics and programming that require precise visual representation.

Key Features of Code2Video

-

Code-Centric Generation Paradigm

Uses Manim code as a unified medium to control both temporal sequences and spatial layouts, ensuring clear, coherent, and reproducible content. -

Modular Three-Agent Collaboration

Planner (storyboard expansion), Coder (debuggable code synthesis), and Critic (layout optimization) work together for structured video generation. -

Multidimensional Evaluation System

Measures efficiency, aesthetics, and end-to-end knowledge transfer (e.g., TeachQuiz and AES metrics) to comprehensively assess video quality. -

Flexible Script Generation

Supports single-concept or batch video generation with configurable API selection, output directories, and parallel processing options. -

Rich Visual Resource Integration

Integrates external APIs (e.g., IconFinder, Icons8) to fetch icons and other visual assets, enhancing video appearance.

Technical Principles

-

Code-Centric Generation Paradigm

Uses Manim code as the primary medium. Instead of manipulating pixels directly, Code2Video generates executable animation scripts, controlling both temporal sequences and spatial layout to ensure structured, reproducible content. -

Multi-Agent Collaboration Framework

-

Planner: Parses input content (text or concepts) and generates a storyboard with logical structure and keyframe sequence.

-

Coder: Uses LLMs (e.g., GPT-4) to generate Manim-compliant animation code, supporting iterative debugging and optimization.

-

Critic: Checks layout and visual consistency, correcting errors such as object collisions or improper animation timing.

-

-

Manim Engine Rendering

Generated code is rendered via Manim, leveraging vector graphics for high-precision mathematical formulas, dynamic charts, and smooth animation transitions. Rendering includes frame splitting, transformations, quantization, and entropy coding, outputting formats like MP4. -

External Resource Integration

Automatically inserts visual elements such as icons and backgrounds from external APIs to enrich video content. -

Evaluation & Optimization

Uses TeachQuiz and AES (Automated Evaluation System) to assess knowledge accuracy, visual smoothness, and learner outcomes, iteratively optimizing code generation strategies.

Project Links

-

Official Website: https://showlab.github.io/Code2Video/

-

GitHub Repository: https://github.com/showlab/Code2Video

-

arXiv Paper: https://arxiv.org/pdf/2510.01174

Use Cases of Code2Video

-

Educational Video Generation

Teachers can quickly convert abstract knowledge (e.g., math formulas, physics laws) into dynamic instructional videos. Step-by-step animations improve knowledge transfer efficiency, suitable for K12, higher education, and professional training. -

Research and Academic Presentations

Researchers can generate animations for technical principles, experiment simulations, or paper results, clearly illustrating complex models such as algorithms or scientific experiments. -

Corporate Training & Skills Instruction

Creates standardized operational guides, software tutorials, safety demonstrations, or product training videos. Code-controlled workflows ensure accuracy and consistency while reducing training costs. -

Personalized Learning Content

Generates custom videos tailored to learner needs (e.g., language learning, coding instruction), embedding interactive elements such as exercises and dynamic feedback to adapt to different learning paces and levels. -

Science Communication & Knowledge Dissemination

Enables media creators to rapidly produce educational animations (e.g., astronomy explanations, historical event reconstructions), making complex concepts more accessible and engaging to the public.