What is Coral NPU?

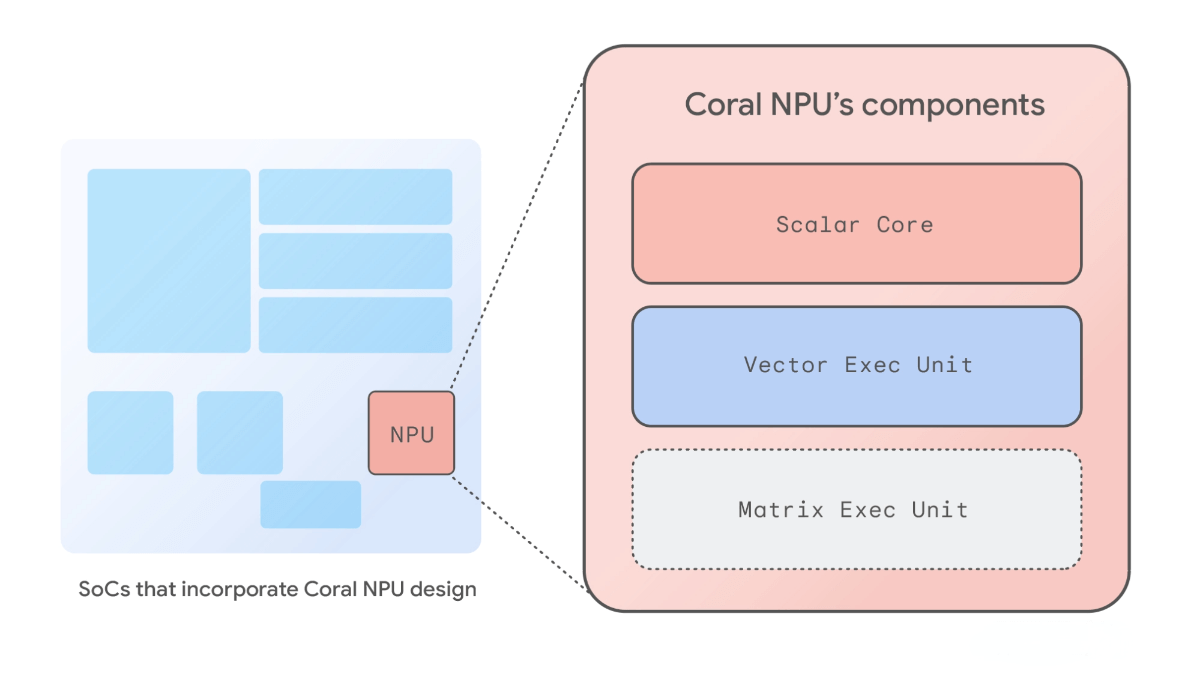

Coral NPU is Google’s full-stack open-source AI platform designed specifically for low-power edge devices such as smartwatches and AR glasses. It addresses three key challenges—performance, fragmentation, and privacy. Built on the RISC-V instruction set, Coral NPU features a scalar core, vector execution unit, and matrix execution unit to efficiently support machine learning inference tasks. It provides a unified developer experience with support for TensorFlow, JAX, and PyTorch, while ensuring user privacy through hardware-enforced security. Coral NPU aims to deliver an always-on AI experience with minimal battery consumption.

Main Features of Coral NPU

1. Efficient Machine Learning Inference:

Coral NPU is a neural processing unit (NPU) optimized for low-power edge devices. It efficiently executes machine learning (ML) model inference tasks, supporting a wide range of ML applications including image classification, person detection, pose estimation, and Transformer-based tasks.

2. Ultra-Low Power Operation:

With an optimized hardware architecture, Coral NPU operates at extremely low power levels (only a few milliwatts), making it ideal for wearables, smartwatches, and IoT devices that require continuous AI functionality.

3. Unified Developer Experience:

It provides a complete software toolchain supporting popular ML frameworks such as TensorFlow, JAX, and PyTorch. Using compilers like IREE and TFLM, models can be optimized into compact binaries for efficient execution on edge devices.

4. Hardware-Enforced Privacy Protection:

Coral NPU integrates hardware-level security mechanisms such as CHERI technology, which isolates sensitive AI models and personal data within hardware-enforced sandboxes to safeguard user privacy.

5. Customizable Architecture:

Based on the RISC-V instruction set, Coral NPU offers an open and extensible architecture, allowing developers to customize and optimize it according to specific application needs.

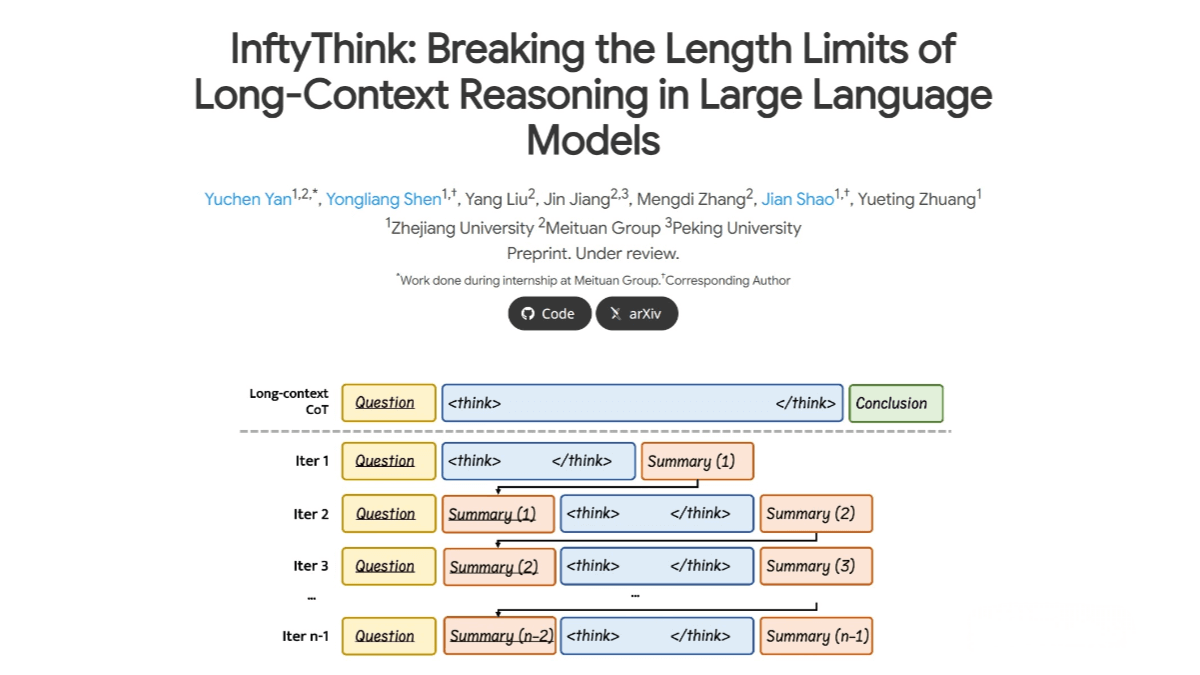

Technical Principles of Coral NPU

AI-First Hardware Design:

Coral NPU is built around an AI-centric hardware architecture that prioritizes the Matrix Engine, a key component for compute-intensive deep learning tasks. By reducing scalar computation overhead and dedicating more resources to AI workloads, Coral NPU achieves high inference efficiency.

RISC-V Instruction Set:

The NPU is based on the open RISC-V instruction set architecture, supporting a 32-bit address space and multiple extensions (integer, floating-point, vector operations). The openness and extensibility of RISC-V allow Coral NPU to be tailored to diverse use cases.

Collaborative Multi-Component Design:

-

Scalar Core: Manages data flow to backend cores and performs low-power CPU functions.

-

Vector Execution Unit: Supports SIMD (Single Instruction, Multiple Data) operations for efficient large-scale data processing.

-

Matrix Execution Unit: A high-efficiency MAC (Multiply-Accumulate) engine designed to accelerate neural network computations.

Compiler Toolchain:

Coral NPU provides a complete toolchain from model development to device deployment. Models from frameworks like TensorFlow and JAX are optimized through MLIR (Multi-Level Intermediate Representation) and compiled via the IREE compiler into hardware-optimized binaries.

Hardware Security Mechanisms:

Coral NPU leverages CHERI-based security, enabling fine-grained memory protection and software partitioning. Sensitive data and models are isolated within hardware sandboxes to prevent memory-based attacks and protect user privacy.

Project Links

-

Official Website: https://research.google/blog/coral-npu-a-full-stack-platform-for-edge-ai/

-

GitHub Repository: https://github.com/google-coral/coralnpu

Application Scenarios of Coral NPU

1. Context Awareness:

Detects user activities (e.g., walking, running), proximity, or environmental conditions (e.g., indoor/outdoor, in motion) to enable “Do Not Disturb” mode or other context-aware features, providing more intelligent user interactions.

2. Audio Processing:

Supports voice and sound detection, keyword spotting, real-time translation, transcription, and audio-based accessibility features, enhancing the device’s voice interaction capabilities.

3. Image Processing:

Enables person and object detection, facial recognition, gesture recognition, and low-power visual search, empowering devices with intelligent vision perception.

4. User Interaction:

Facilitates gesture-based, voice-based, and sensor-driven interactions for intuitive and natural device control, making human-device interaction more seamless and user-friendly.

Related Posts