UNO-Bench – An Omni-Modal Large Model Evaluation Benchmark Released by Meituan LongCat

What is UNO-Bench?

UNO-Bench is an omni-modal large model evaluation benchmark introduced by Meituan’s LongCat team. Designed to address the gaps in existing evaluation systems, UNO-Bench uses high-quality and diverse data to accurately measure both single-modal and omni-modal capabilities. The benchmark is the first to verify the “composition law” of omni-modal large models, revealing the complex interplay between single-modal and omni-modal abilities. With its innovative multi-step open-ended questions and efficient data compression algorithms, UNO-Bench improves both evaluation granularity and efficiency, providing a scientific assessment tool that advances the development of omni-modal foundation models.

Main Features of UNO-Bench

Accurate capability assessment:

Uses high-quality, diverse datasets to evaluate model performance across single-modal and omni-modal tasks involving images, audio, video, and text.

Revealing capability composition laws:

Verifies the “composition law” of omni-modal large models for the first time, exposing the intricate relationships between single-modal and omni-modal abilities and offering theoretical support for model optimization.

Innovative evaluation methodology:

Introduces Multi-step Open-ended (MO) questions to assess how well models maintain reasoning depth across complex tasks, effectively distinguishing differences in reasoning capabilities.

Efficient data management:

Adopts a clustering-guided hierarchical sampling method to greatly reduce evaluation costs while maintaining highly consistent model rankings.

Support for multimodal fusion research:

Provides a unified evaluation framework that helps researchers explore multimodal fusion and paves the way for more powerful omni-modal models in the future.

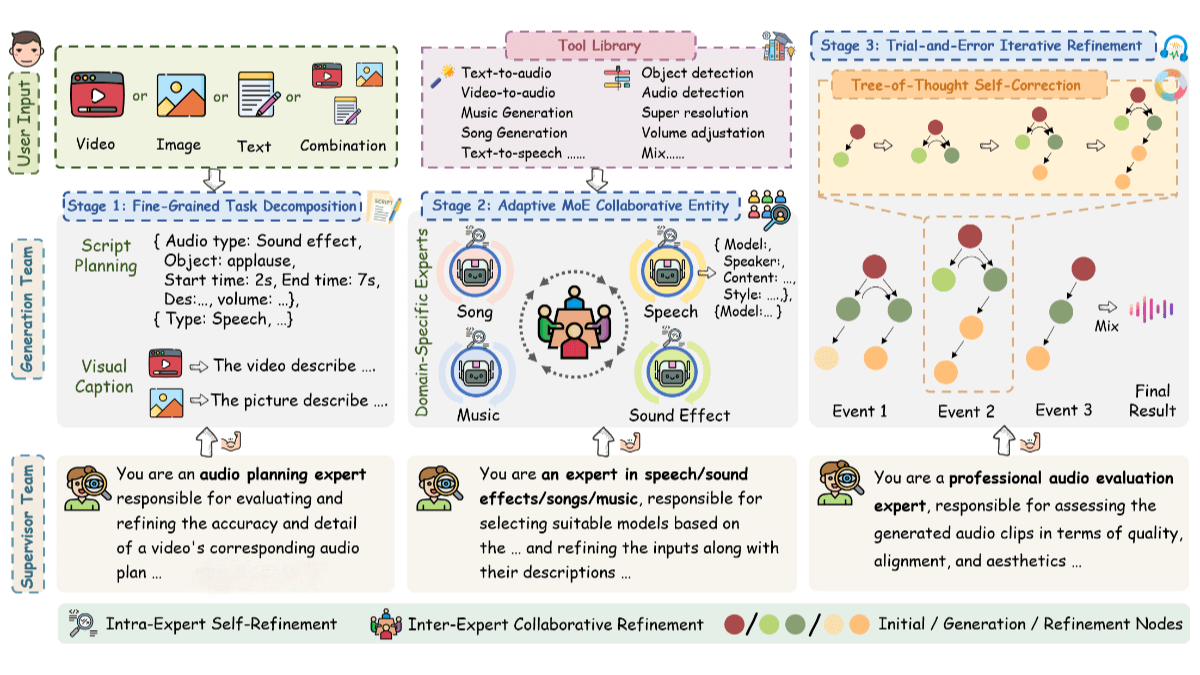

Technical Principles Behind UNO-Bench

Unified capability framework:

Model capabilities are decomposed into a perception layer and a reasoning layer.

-

The perception layer covers fundamental skills such as basic recognition and cross-modal alignment.

-

The reasoning layer includes advanced tasks such as spatial and temporal reasoning.

This dual-dimensional framework provides a clear blueprint for data construction and model evaluation.

High-quality data construction:

-

Data collection & annotation: Data is manually labeled with multi-round quality checks to ensure high quality and diversity. Over 90% of the dataset is private and original, preventing data contamination.

-

Cross-modal solvability: Ablation studies ensure that more than 98% of questions genuinely require multimodal information, avoiding redundancy from single-modal cues.

-

Audio-visual recombination: Audio content is designed independently and manually paired with visual materials to break information redundancy, compelling models to perform true cross-modal fusion.

-

Data optimization & compression: A clustering-guided hierarchical sampling method selects representative samples from large-scale data, reducing evaluation costs while preserving ranking consistency.

Innovative evaluation methods:

Complex reasoning tasks are decomposed into multiple sub-questions requiring open-ended text answers. Expert-weighted scoring provides precise measurement of reasoning capabilities. Through fine-grained question type categorization and iterative multi-round annotation, the system automatically scores multiple types of questions with an accuracy of up to 95%.

Verification of the composition law:

Regression analysis and ablation experiments show that omni-modal performance is not a simple linear sum of single-modal abilities. Instead, it follows a power-law synergistic pattern. This nonlinear relationship provides a new analytical framework for evaluating fusion efficiency.

Project Links for UNO-Bench

-

Official Website: https://meituan-longcat.github.io/UNO-Bench/

-

GitHub Repository: https://github.com/meituan-longcat/UNO-Bench

-

HuggingFace Dataset: https://huggingface.co/datasets/meituan-longcat/UNO-Bench

-

arXiv Paper: https://arxiv.org/pdf/2510.18915

Application Scenarios of UNO-Bench

Model development and optimization:

Provides standard evaluation tools to help developers refine model architectures and improve multimodal fusion capabilities.

Industry application evaluation:

Useful in fields such as intelligent customer service and autonomous driving for assessing model performance in multimodal interaction scenarios and improving user experience.

Academic research and competitions:

Serves as a unified benchmark for academic analysis, model comparison, and multimodal competitions, driving technological breakthroughs.

Product development and market evaluation:

Helps companies assess product functionality and competitive positioning, offering scientific support for multimodal product development.

Cross-modal application development:

Supports domains such as multimedia content creation and intelligent security, enhancing performance and reliability in multimodal applications.

Related Posts