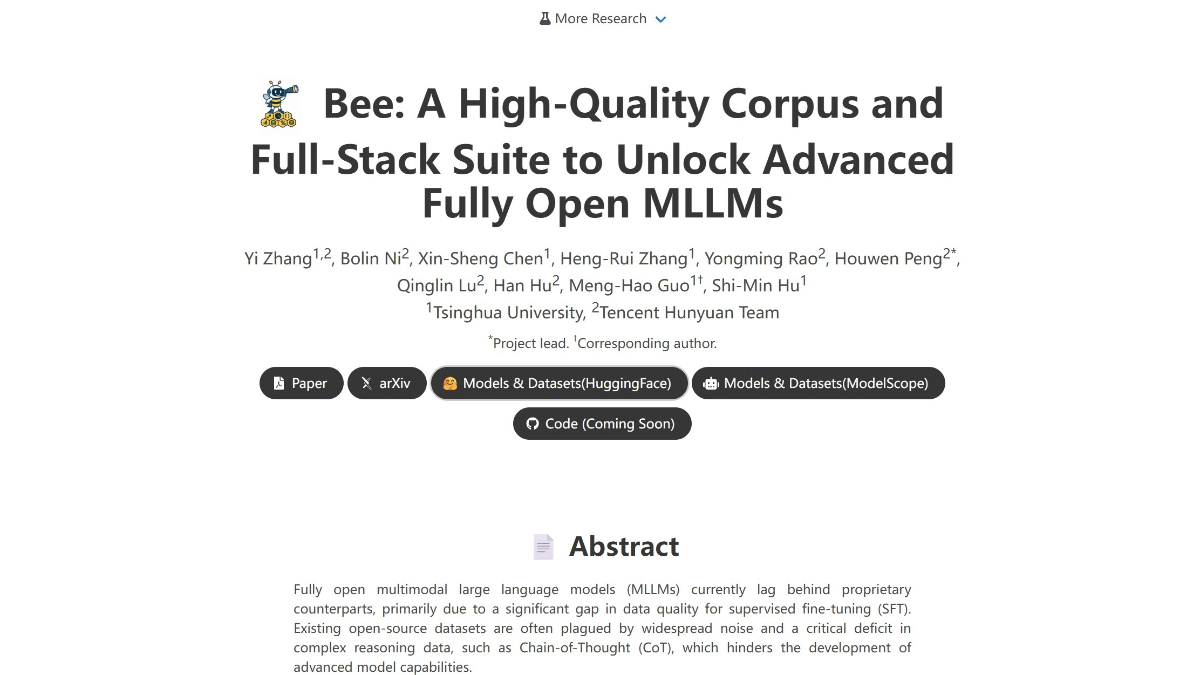

Bee – A Full-Stack Multimodal Large Model Solution Jointly Open-Sourced by Tsinghua University and Tencent

What is Bee?

Bee is a high-quality Multimodal Large Language Model (MLLM) project jointly developed by Tsinghua University and Tencent’s Hunyuan team. It aims to address performance bottlenecks in open-source models caused by insufficient data quality. The project’s core contributions include:

-

Honey-Data-15M, a high-quality supervised fine-tuning dataset containing around 15 million Q&A pairs. The dataset is enhanced through multi-step cleaning and a dual-layer Chain-of-Thought (CoT) expansion strategy to significantly improve data quality.

-

HoneyPipe and DataStudio, open-source data processing pipelines and frameworks that provide transparent and fully reproducible data preparation workflows.

-

Bee-8B, an 8-billion-parameter model trained on Honey-Data-15M. Bee-8B achieves new SOTA results across multiple benchmarks among fully open-source MLLMs, performing on par with or even surpassing several semi-open models.

Key Features

High-quality dataset construction:

Releases Honey-Data-15M, a 15-million-sample supervised fine-tuning dataset meticulously cleaned and enhanced with dual-layer CoT reasoning, dramatically improving data quality and forming a solid foundation for multimodal model training.

Full-stack data processing pipeline:

Open-sources HoneyPipe and DataStudio, providing an end-to-end data workflow—including data aggregation, noise filtering, and CoT enhancement—that ensures transparency and reproducibility, surpassing traditional static dataset-release practices.

High-performance model training and evaluation:

Bee-8B is trained on Honey-Data-15M and achieves SOTA performance among fully open-source multimodal LLMs across various benchmarks, demonstrating the crucial role of high-quality data in boosting model capabilities.

Open-source ecosystem:

Provides a complete suite of open-source resources, including datasets, processing pipelines, training recipes, evaluation tools, and model weights, accelerating community development and supporting research and applications in the multimodal LLM field.

Technical Principles of Bee

Data aggregation and deduplication:

Collects large volumes of image-text pairs from multiple sources and applies strict deduplication to ensure dataset diversity and efficient processing.

Noise filtering:

Combines rule-based methods with model-based approaches to remove noisy samples such as malformed formats, low-quality images, or mismatched instructions.

Chain-of-Thought (CoT) expansion:

Generates rich reasoning traces using both short and long CoT strategies, enhancing model reasoning capabilities for tasks of varying complexity.

Fidelity verification:

Uses LLM-as-a-Judge semantic comparison to ensure that generated CoT responses are accurate and consistent.

Model training and optimization:

Bee-8B is trained on the high-quality Honey-Data-15M dataset and optimized using techniques such as Supervised Fine-tuning (SFT) and Reinforcement Learning (RL).

Project Links

-

Project website: https://open-bee.github.io/

-

HuggingFace model hub: https://huggingface.co/collections/Open-Bee/bee

-

arXiv paper: https://arxiv.org/pdf/2510.13795

-

Honey-Data-15M dataset: https://huggingface.co/datasets/Open-Bee/Honey-Data-15M

Application Scenarios of Bee

Multimodal content generation:

Generates high-quality image descriptions, video captions, and other multimodal content to enhance creative efficiency and diversity.

Intelligent Q&A systems:

Provides accurate and detailed responses to complex questions using strong reasoning abilities.

Education:

Supports teaching by generating instructional materials or answering student questions, enabling personalized learning.

Research assistance:

Helps researchers organize and analyze data, generate reports, or provide suggestions for experimental design.

Business intelligence:

Analyzes market trends, user feedback, and other data to support decision-making and forecasting.

Healthcare:

Assists with medical diagnosis, generates medical image analysis reports, or offers medical-related consultation.

Related Posts