Qwen3-235B-A22B-Thinking-2507 – Alibaba’s Latest Reasoning Model

What is Qwen3-235B-A22B-Thinking-2507?

Qwen3-235B-A22B-Thinking-2507 is Alibaba’s newly released state-of-the-art open-source reasoning model. Built on a sparse Mixture-of-Experts (MoE) architecture with 235 billion total parameters (22 billion activated per inference), it features a 94-layer Transformer network and 128 expert nodes. The model is designed specifically for complex reasoning tasks and supports a native context length of up to 256K tokens, making it ideal for long documents and deep reasoning chains.

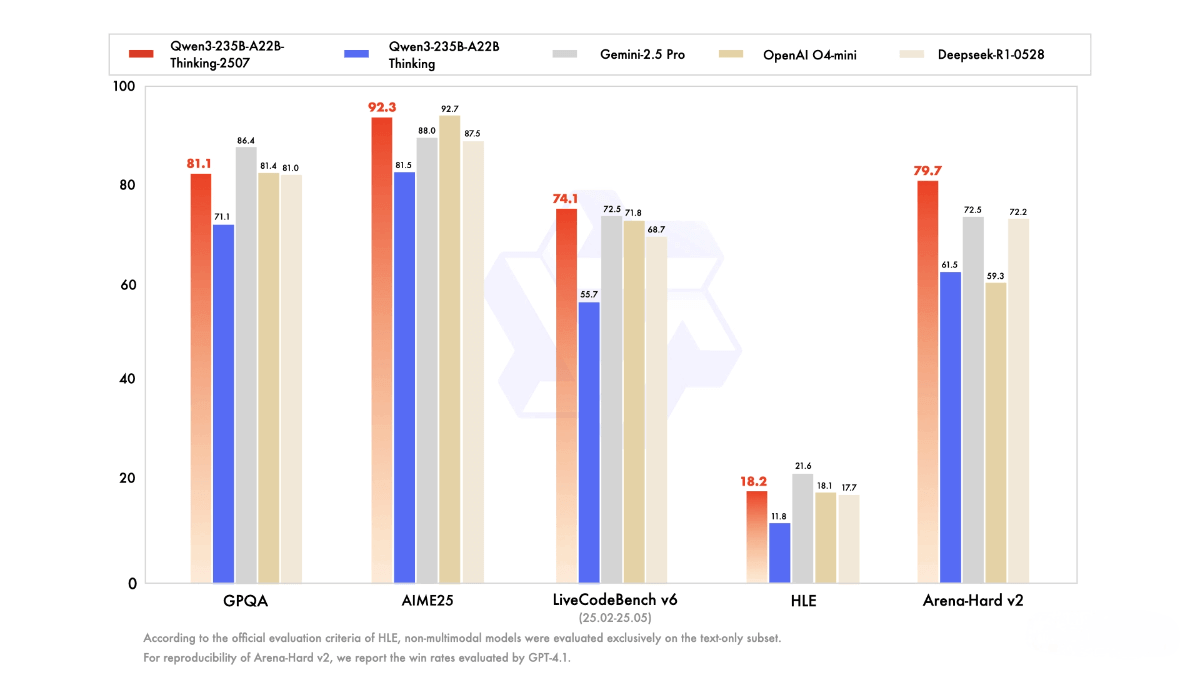

In terms of performance, Qwen3-235B-A22B-Thinking-2507 demonstrates significant improvements in core capabilities such as logical reasoning, mathematics, scientific analysis, and programming. It has achieved new best-in-class results among open-source models on benchmark tests like AIME25 (math) and LiveCodeBench v6 (coding), even outperforming some closed-source models. It also excels in general tasks like knowledge understanding, creative writing, and multilingual communication.

The model is released under the Apache 2.0 license, free for commercial use. Users can try or download it via QwenChat, ModelScope, or Hugging Face. The pricing is set at $0.70 per million input tokens and $8.40 per million output tokens.

Key Features of Qwen3-235B-A22B-Thinking-2507

-

Logical Reasoning:

Excels at complex, multi-step reasoning tasks. -

Mathematical Computation:

Shows significant improvements in mathematical tasks, setting new open-source records in difficult tests like AIME25. -

Scientific Analysis:

Capable of tackling complex scientific problems with accurate analysis and explanations. -

Code Generation:

Generates high-quality code across various programming languages. -

Code Optimization:

Assists developers in optimizing existing code for better performance. -

Debugging Support:

Offers debugging suggestions to help developers identify and fix issues quickly. -

256K Context Window:

Natively supports processing of long sequences up to 256,000 tokens—ideal for document analysis and long-form conversations. -

Deep Reasoning Chains:

Automatically engages in multi-step reasoning without manual mode-switching, making it suitable for deep analytical tasks. -

Multilingual Dialogue:

Supports text generation and conversation in multiple languages, enabling cross-lingual applications. -

Instruction Following:

Accurately understands and executes user instructions to generate high-quality responses. -

Tool Use:

Integrates with external tools to extend functionality.

Technical Highlights of Qwen3-235B-A22B-Thinking-2507

-

Sparse Mixture-of-Experts (MoE) Architecture:

The model uses a sparse MoE design with 235 billion total parameters, activating 22 billion parameters per inference. It includes 128 expert nodes, with each token dynamically selecting 8 experts—balancing efficiency with performance. -

Autoregressive Transformer Structure:

Built on a 94-layer autoregressive Transformer backbone, it natively supports extremely long context lengths of up to 256K tokens, making it highly capable in long-text tasks. -

Optimized for Reasoning Tasks:

The model is tailored for deep reasoning by default, making it especially strong in areas requiring structured thinking—like logic, math, science, programming, and academic benchmarks. -

Two-Stage Training Paradigm:

Qwen3-235B-A22B-Thinking-2507 is trained using a combination of pretraining and post-training, further enhancing performance. It has achieved top results on several benchmarks, including AIME25 and LiveCodeBench. -

Dynamic Activation Mechanism:

The MoE framework allows the model to dynamically select experts based on task complexity during inference, improving adaptability and accuracy.

Project Link

-

Hugging Face Model Hub: https://huggingface.co/Qwen/Qwen3-235B-A22B-Thinking-2507

Application Scenarios for Qwen3-235B-A22B-Thinking-2507

-

Code Generation and Optimization:

Generates clean, functional code and helps developers enhance code efficiency. -

Creative Writing:

Excels at story creation, copywriting, and ideation, providing detailed and imaginative outputs. -

Academic Writing:

Supports writing academic papers and literature reviews with professional-level analysis and recommendations. -

Research Design:

Assists in designing scientific research plans, offering logical and well-informed suggestions.

Related Posts