ViMax – A Multi-Agent Video Generation Framework Open-Sourced by the University of Hong Kong

What is ViMax?

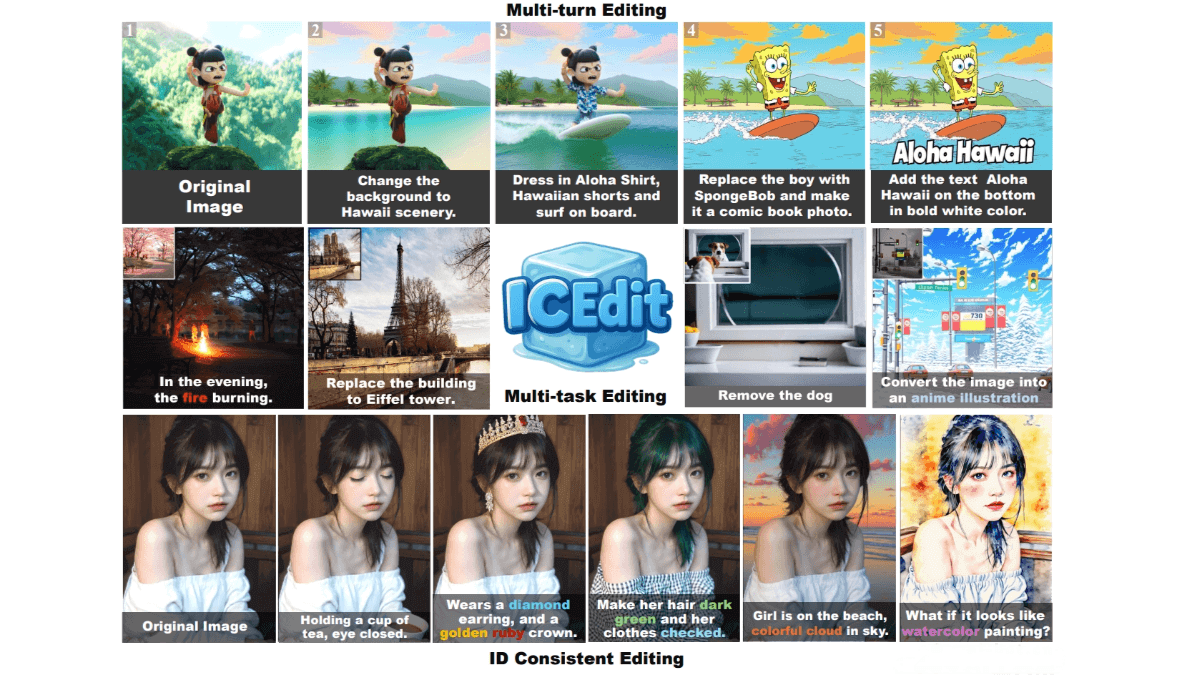

ViMax is an end-to-end multi-agent video generation framework developed by the Data Science Lab at the University of Hong Kong. It can automatically transform ideas, scripts, or novels into complete videos. The framework integrates the roles of director, screenwriter, producer, and video generator, supporting modes such as Idea2Video, Novel2Video, Script2Video, and AutoCameo. ViMax can generate multi-minute long videos while maintaining character and scene consistency. With intelligent shot planning, multi-camera simulation, and automated consistency checking, ViMax streamlines the process from concept to final production, greatly simplifying video creation, lowering technical barriers, and empowering creators with a powerful tool.

Key Features of ViMax

• Idea2Video

Transforms simple ideas into complete video stories—ideal for creators without detailed scripts.

• Novel2Video

Automatically adapts long-form novels into episodic video content, enabling cinematic visualization of literary works.

• Script2Video

Generates videos directly from structured and detailed scripts—suitable for creators with mature screenplays.

• AutoCameo

Generates personalized videos featuring the user: upload a photo, and ViMax creates a video including your likeness.

Technical Principles of ViMax

ViMax uses a multi-agent collaborative architecture, breaking down video generation into modular tasks executed by different agents:

1. Input Parsing

Extracts key information—characters, scenes, styles—from ideas or scripts.

2. Script Understanding & Shot Design

Generates detailed storyboards based on extracted information, planning camera angles and narrative pacing.

3. Visual Asset Planning

Intelligently selects reference images and designs appropriate scene layouts and visual styles for each shot.

4. Consistency Checking

Uses MLLM/VLM models to detect inconsistencies in generated images, ensuring continuity of characters and environments throughout the video.

5. Parallel Generation & Composition

Accelerates the process through parallel shot generation and composes all shots into a complete final video.

Project Link

-

GitHub Repository: https://github.com/HKUDS/ViMax

Application Scenarios

• Short-Form Video Creation

Creators can quickly transform ideas into short videos for platforms like TikTok, Bilibili, etc.

• Educational Videos

Converts complex teaching materials into engaging visual content, improving comprehension and retention for learners.

• Interactive Videos

Via AutoCameo, users can embed themselves into videos, enhancing personalization and engagement.

• Novel Visualization

Adapts long-form novels into video episodes, providing new forms of dissemination for literary works.

• Personal Story Videos

Users can turn their own stories or memories into videos for personal archiving or sharing.

Related Posts