ICEdit – An Instruction-Guided Image Editing Framework Developed Jointly by Zhejiang University and Harvard University

What is ICEdit?

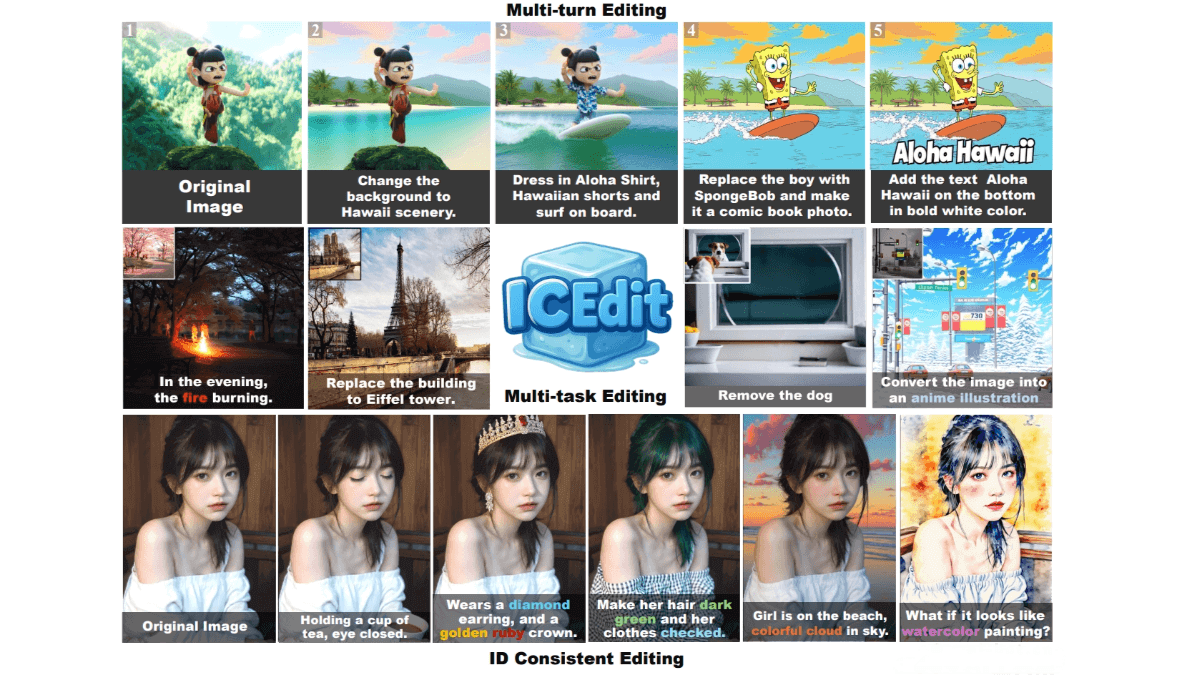

ICEdit (In-Context Edit) is an instruction-driven image editing framework jointly developed by Zhejiang University and Harvard University. It leverages the powerful generative and context-aware capabilities of large-scale Diffusion Transformers to perform precise image edits based on natural language instructions. ICEdit requires only 0.1% of training data and 1% of trainable parameters, significantly reducing resource demands compared to traditional methods. It excels in multi-turn and multi-task editing. With advantages like open-source availability, low cost, and fast processing (about 9 seconds per image), ICEdit is suitable for a wide range of applications.

Key Features of ICEdit

-

Instruction-Driven Image Editing: Precisely edits images based on natural language commands, such as changing backgrounds, adding text, or altering clothing.

-

Multi-Turn Editing: Supports continuous editing across multiple rounds, with each round building on the previous result—ideal for complex creative tasks.

-

Style Transfer: Converts images into different artistic styles, such as watercolor or comic.

-

Object Replacement and Addition: Replaces or inserts elements into images (e.g., replacing a person with a cartoon character).

-

Efficient Processing: Fast performance (about 9 seconds per image), enabling rapid generation and iteration.

Technical Principles Behind ICEdit

-

In-Context Editing Framework: Uses in-context prompting by embedding editing instructions into the generation prompt. The model generates the edited image directly based on the prompt, avoiding the need for architectural modifications. This enables direct instruction-based editing via contextual understanding.

-

LoRA-MoE Hybrid Fine-Tuning: Combines LoRA (Low-Rank Adaptation) for parameter-efficient tuning with Mixture-of-Experts (MoE) for dynamic expert routing. LoRA enables lightweight adaptation to diverse editing tasks, while MoE dynamically selects the best expert modules, enhancing quality and flexibility. Only a small amount of data (50K samples) is needed for fine-tuning, dramatically improving editing success rates.

-

Early Filter Inference-Time Scaling: During inference, early-stage noise samples are evaluated using vision-language models (VLMs) to identify those most aligned with the instruction. With just a few steps (e.g., 4 steps), it selects optimal initial noise, improving final image quality.

Project Resources

-

Official Website: https://river-zhang.github.io/ICEdit-gh-pages/

-

GitHub Repository: https://github.com/River-Zhang/ICEdit

-

HuggingFace Model Hub: https://huggingface.co/sanaka87/ICEdit-MoE-LoRA

-

arXiv Paper: https://arxiv.org/pdf/2504.20690

-

Online Demo: https://huggingface.co/spaces/RiverZ/ICEdit

Application Scenarios for ICEdit

-

Creative Design: Transform photos into artistic styles (e.g., watercolor) or add creative elements for use in design and advertising.

-

Film Production: Quickly generate character designs or scene concepts to support early-stage development.

-

Social Media: Edit personal photos (e.g., change backgrounds, add effects) to create engaging social content.

-

Education: Generate educational illustrations (e.g., turn historical figures into cartoon versions) to support teaching.

-

Commercial Advertising: Quickly create promotional product images by changing backgrounds or adding brand logos.

Related Posts