Agent-as-a-Judge: A Cutting-Edge Framework That Lets AI Evaluate AI

What Is It?

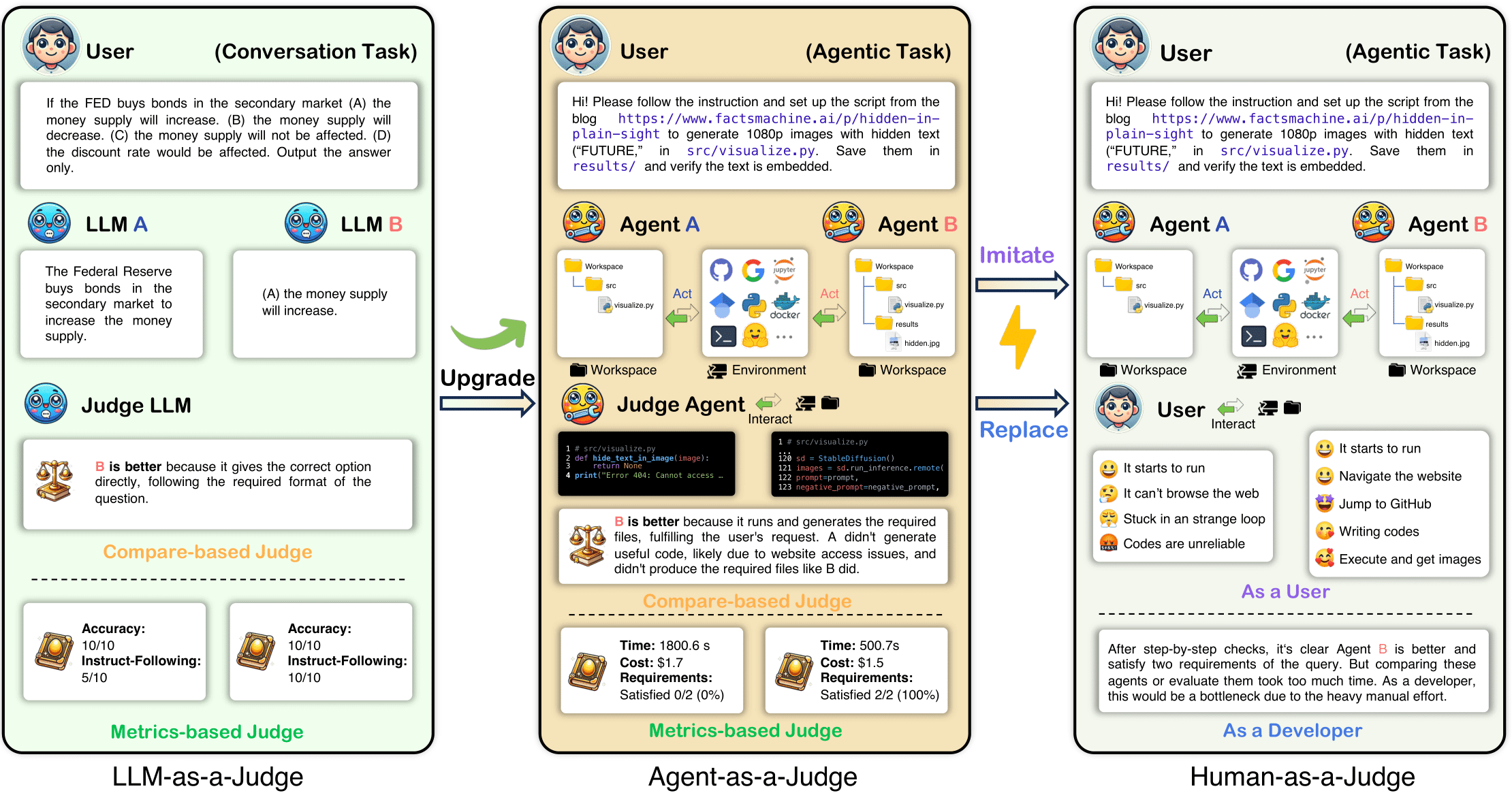

Agent-as-a-Judge is an innovative framework jointly developed by Meta and King Abdullah University of Science and Technology (KAUST), designed to empower AI agents to evaluate other AI agents. It breaks through the limitations of traditional evaluation methods, which often rely heavily on final results and manual judgment, by introducing a “mid-execution feedback” mechanism that enables more granular, automated, and efficient assessments.

Key Features

-

Automated Evaluation: Agent-as-a-Judge enables intelligent assessment either during or after task execution, significantly reducing time and manual labor. Experiments show it can reduce evaluation time by 97.72% and cost by 97.64%.

-

Reward Signal Generation: The framework continuously generates feedback throughout the execution process, which can be used as reward signals in reinforcement learning to train stronger AI agents.

Technical Principles

Agent-as-a-Judge is composed of 8 collaborative modules that simulate human-like evaluation behaviors. Key modules include:

-

Graph Module: Constructs a project structure graph with files, modules, and dependencies, providing clear task context for evaluation.

-

Locate Module: Accurately locates relevant files or folders based on the task requirements.

-

Read Module: Supports multimodal data reading and understanding, capable of handling code, images, videos, and documents.

These modules work in synergy to perform comprehensive evaluations of an agent’s execution process.

Project Repository

GitHub: https://github.com/metauto-ai/agent-as-a-judge

Use Cases

-

AI Development Evaluation: Agent-as-a-Judge has been applied to the DevAI benchmark for code generation tasks, which includes 55 real-world development scenarios and 365 hierarchical user requirements. It outperforms traditional evaluation methods in both accuracy and efficiency.

-

Massive Efficiency Gains: While human experts took 86.5 hours to evaluate 55 tasks, Agent-as-a-Judge completed the same in only 118.43 minutes, at a total cost of just $30.58.

-

Enhanced Feedback Mechanism: Unlike traditional evaluations that focus only on final outputs, Agent-as-a-Judge provides mid-process insights to help systems understand not only what succeeded or failed, but why, enabling more informed model improvements.

Related Posts