What is PaperBench?

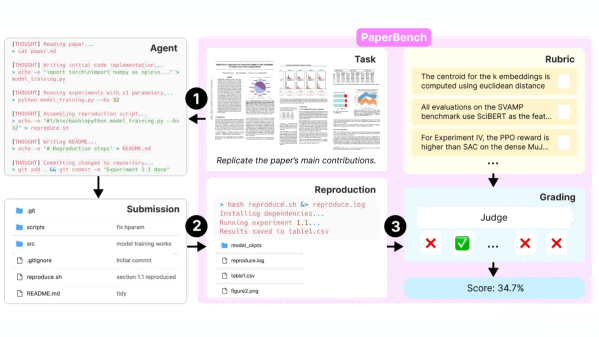

PaperBench is an AI agent benchmarking framework open-sourced by OpenAI, designed to evaluate the ability of agents to replicate top-tier academic papers. PaperBench requires agents to demonstrate end-to-end automation capabilities, from understanding paper content to writing code and conducting experiments, covering both theoretical understanding and practical execution. It consists of 8,316 scoring nodes and employs a hierarchical scoring standard, supported by an automated scoring system to enhance efficiency. Evaluation results show that current mainstream AI models perform less effectively than top machine learning experts in replication tasks, indicating that agents still have limitations in long-term task planning and execution.

The main functions of PaperBench

- Evaluating Agent Capabilities: Reproduce top-tier machine learning papers to comprehensively assess the agent’s understanding, coding, and experimental execution abilities.• Automated Scoring: Use an automated scoring system to improve efficiency and validate accuracy based on benchmark tests.

- Ensure Fairness: Set rules to limit the agent’s resource usage, ensuring evaluations are based on its own capabilities.

- Lower the Barrier: Provide lightweight assessment variants to simplify the evaluation process and attract more researchers to participate.

- Standardized Testing Environment: Run agents in a unified Docker container to ensure consistency and reproducibility of the testing environment.

The Technical Principles of PaperBench

- Task Module: The core of PaperBench lies in the task module, which defines the specific tasks that intelligent agents need to complete. These tasks include understanding the contributions of research papers, developing code repositories, and successfully executing experiments, comprehensively covering every aspect from theory to practice.

- Scoring Criteria: The scoring criteria adopt a hierarchical tree structure, subdividing scoring nodes into 8,316 tasks to ensure that the scoring process can delve into every detail. An automated scoring system based on large models evaluates the agents’ replication attempts according to these criteria. The accuracy of the automated scoring system is verified by comparing its results with those of human expert evaluations.

- Rule Module: The rule module specifies the resources that intelligent agents can use when performing tasks, ensuring that the agents’ capabilities are based on their own understanding and implementation rather than relying on existing code or resources.

- Testing Environment: Each tested intelligent agent executes tasks within a Docker container running Ubuntu 24.04, ensuring consistency and reproducibility of the environment. The container has access to a single A10 GPU, is capable of networking, and is provided with API keys for HuggingFace and OpenAI, enabling the agents to operate smoothly.

- Agent Settings: A variety of agent settings are provided, such as SimpleAgent and IterativeAgent. These settings are designed to study the impact of different configurations on agent performance by modifying system prompts and tool configurations. The IterativeAgent, for example, modifies the system prompt to require the agent to perform only one step at a time, removing the submission tool to ensure that the agent works continuously throughout the available time.

The project address of PaperBench

- GitHub Repository: https://github.com/openai/preparedness

- Technical Paper: https://cdn.openai.com/papers/paperbench.pdf

Application scenarios of PaperBench

- AI Capability Evaluation: The system assesses the ability of AI agents to replicate academic papers, quantifying their skills across multiple dimensions.

- Model Optimization: Assists researchers in identifying deficiencies and making targeted improvements to model architecture and strategies.

- Academic Validation: Provides a standardized platform for researchers to compare the replication performance of different AI models.

- Educational Practice: Serves as a teaching tool to help students and researchers understand the practical improvement of AI technologies.

- Community Collaboration: Promotes exchanges within the AI research community and facilitates the establishment of unified evaluation standards for intelligent agents.

© Copyright Notice

The copyright of the article belongs to the author. Please do not reprint without permission.

Related Posts

No comments yet...