GPT-5 Pauses, O3 and O4-mini Launch in Haste—Can OpenAI’s Move Pay Off?

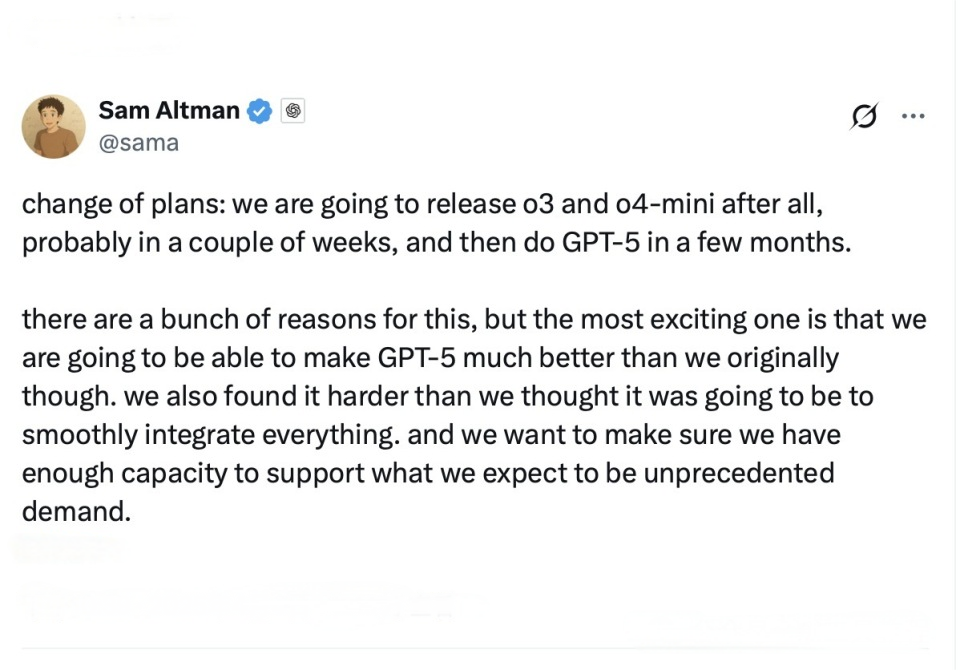

OpenAI has made new moves these days. Sam Altman, the CEO of OpenAI, posted a message on X (formerly Twitter), saying that there are changes to the original plan: GPT-5 won’t be launched in a hurry for now. Let’s first roll out O3 and O4-mini, which will go online within a few weeks. We’ll have to wait several months for GPT-5.

This move is indeed a bit unexpected. After all, just a few months ago, OpenAI had laid out a roadmap, confidently stating that they were going to merge GPT-5 with the “O-series” models into a unified, all-in-one large model system. Now, they suddenly say, “Well, let’s just release O3 separately instead,” which is rather puzzling.

However, Ultraman himself also said that integrating GPT-5 is much more difficult than expected, especially considering the ever-increasing demand for computing power. If launched hastily, it might not be able to handle the enthusiasm of users. So they plan to release a few interim products first, take it one step at a time, and buy time for GPT-5 to “bulk up” before making its official debut.

What on earth are O3 and O4-mini?

O3 and O4-mini might sound like new smartphone models, but in fact, they are transitional versions before GPT-5 is “fully developed”.

Judging from the little information leaked by OpenAI at present:

- O3: Originally said to be integrated into GPT – 5, but now it has gone solo and will be prioritized for deployment. Rumor has it that it performs well in the field of scientific research, especially being more friendly to data-intensive tasks.

- O4-mini: Its performance is more advanced than that of O3. It can be regarded as a “quick iterative” version paving the way for GPT-5 in advance.

These two models can not only satisfy some users’ sense of novelty, but also enable OpenAI to continue exploring performance bottlenecks and tool integration, and incidentally test the market response.

What do netizens and industry insiders say?

Seeing this news, netizens and industry bigwigs have rushed to voice their opinions.

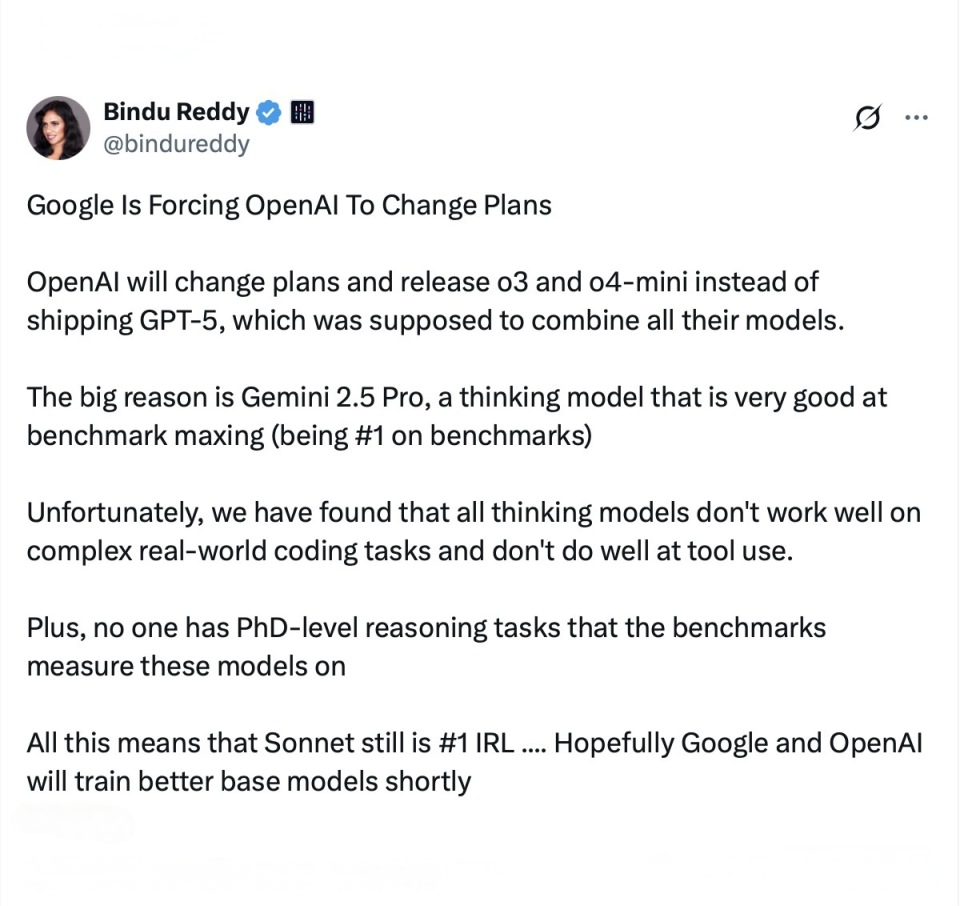

The CEO of Abacus.AI, Bindu Reddy, directly pointed out that this was forced by Google. Google recently released Gemini 2.5 Pro, which is extremely powerful, and OpenAI has to send a signal of counterattack. She also complained that the performance of these thinking models in real-world scenarios is far from what was imagined, especially in areas such as coding and tool usage, where they often fail.

Well-known tech blogger Wes Roth speculated that OpenAI was buying time to “catch their breath”. He believed O3 and O4-mini were just being used as a mid-game warm-up.

Ren Hongyu from the O3-mini development team spoke more specifically. He said that the O3-mini-high, a sub-model, is very useful in the field of scientific research. In particular, it has already made obvious breakthroughs in automatic literature summarization and experimental auxiliary reasoning.

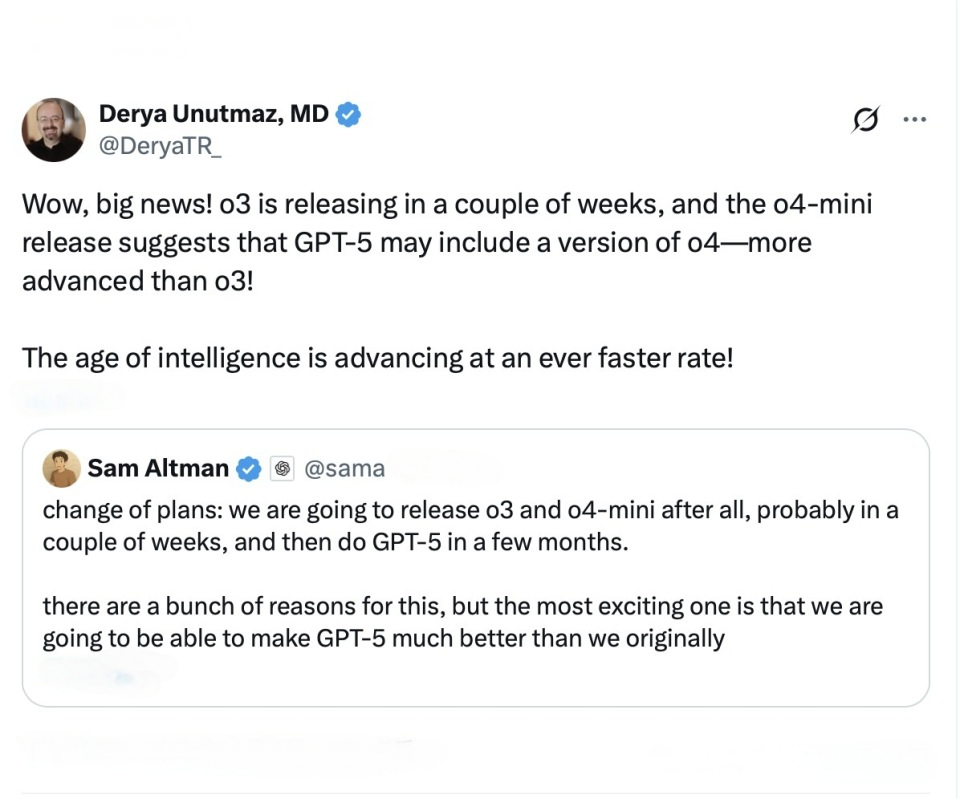

Derya Unutmaz from the Jackson Laboratory of Genomics went so far as to exclaim, “This is big news.” He believes that the emergence of O4-mini indicates that GPT-5 might be stronger than we expect and could even contain a complete version of the O4 model.

How long do we still have to wait for GPT-5?

Judging from OpenAI’s pace, GPT-5 might not arrive as soon as expected. The official statement says “a few months,” but looking at the release cycle of GPT-4, it took at least half a year from the teaser stage to its actual launch. Based on this pattern, GPT-5 is likely to be released in the second half of 2025, or even by the end of the year.

However, judging from the functions announced so far, GPT-5 is still highly anticipated:

- Support multi-modal inputs such as voice, image, and text;

- Integrate Canvas digital drawing board and real-time online search.

- It has a deep reasoning function specifically for academic research.

- There are also three levels of service modes, ranging from ordinary users to Pro subscribers, progressing step by step.

It does sound like an “AI Swiss Army knife”.

Summary

Personally, I think instead of rushing to launch an all-in-one ‘super model’, it”s better to build solid foundations step by step. The O3 and O4-mini are essentially helping GPT-5 clear obstacles. For us average users, getting quicker access to new model features is actually more practical than waiting a year for some ‘ultimate version’.

The updates of these large AI models nowadays are just like smartphone manufacturers releasing new generations—you can never catch up with the next iteration, but you don’t have to get the latest and greatest right away either. Isn’t it better to start using them now, enjoy the enhanced experience, and boost your efficiency? That sounds pretty sweet, doesn’t it?

Related Posts