FantasyTalking – Alibaba and BUPT Jointly Launch a Framework for Generating Controllable Digital Humans from Static Portraits

What is FantasyTalking?

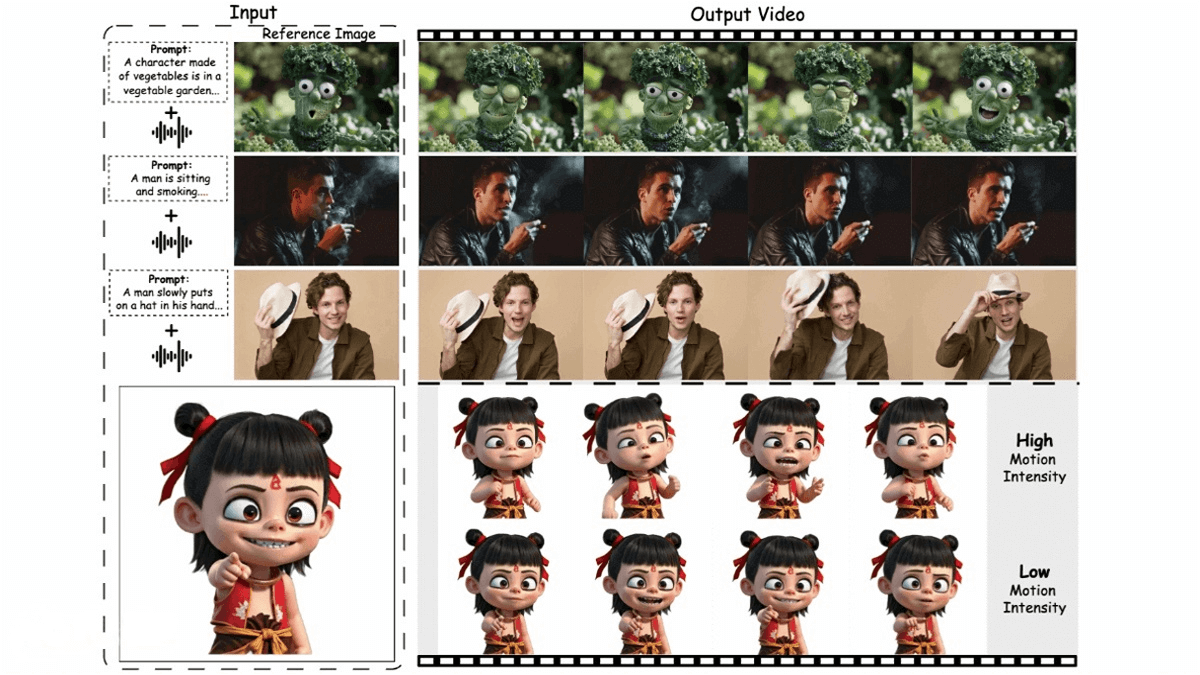

FantasyTalking is a novel framework proposed jointly by the Alibaba AMAP team and Beijing University of Posts and Telecommunications. It is designed to generate realistic and animatable virtual avatars from a single static portrait. Based on a pre-trained video diffusion transformer model, it adopts a two-stage audio-visual alignment strategy. In the first stage, a coherent global motion through a clip-level training scheme. In the second stage, lip motion is refined at the frame level using a lip-tracking mask to ensure precise synchronization with the audio signal. The framework incorporates a face-focused cross-attention module to maintain facial consistency and employs a motion intensity modulation module to control the intensity of facial expressions and body movements.

The main functions of FantasyTalking

- Lip Sync: Accurately recognizes and synchronizes the lip movements of virtual characters with the input speech, ensuring that the character’s lip movements are completely consistent with the speech content. This enhances the realism and credibility of the character.

- Facial Motion Generation: Generates corresponding facial expressions such as blinking, frowning, smiling, etc., based on the speech content and emotional information, making the virtual character’s expressions more rich and vivid.

- Full-body Motion Generation: Capable of generating full-body movements and postures according to the needs of the scene and plot, such as walking, running, jumping, etc., making the virtual character appear more natural and smooth in animations.

- Motion Intensity Control: Through a motion intensity modulation module, users can explicitly control the intensity of facial expressions and body movements, achieving controllable manipulation of portrait motion beyond just lip movement.

- Multiple Style Support: Supports various styles of virtual avatars, including realistic and cartoon styles, capable of generating high-quality dialogue videos.

- Multiple Pose Support: Supports the generation of realistic speaking videos with various body ranges and orientations, including close-up portraits, half-body, full-body, as well as frontal and side poses.

The Technical Principles of FantasyTalking

- Two-stage Audio-Visual Alignment Strategy

◦ Fragment-level Training: In the first stage, through a fragment-level training scheme, the model captures the weak correlation between audio and the entire scene (including reference portraits, contextual objects, and background) to establish a global audio-visual dependency relationship, achieving holistic feature fusion. This enables the model to learn non-verbal cues related to audio (such as eyebrow movements, shoulder actions) and lip dynamics that are strongly synchronized with audio.

◦ Frame-level Training: In the second stage, the model focuses on refining frame-level visual features that are highly correlated with audio, particularly lip movements. By utilizing lip-tracking masks, the model ensures precise alignment between lip movements and audio signals, improving the quality of the generated video. - Identity Preservation: Traditional reference network methods usually impose limitations on large-scale natural changes of characters and backgrounds in videos. FantasyTalking employs a face-focused cross-attention module, concentrating on modeling the facial region and decoupling identity preservation from motion generation through a cross-attention mechanism. It is more lightweight, freeing restrictions on the natural movement of backgrounds and characters, and ensuring the preservation of the character’s identity throughout the generated video sequence.

- Sports Intensity Adjustment: FantasyTalking has introduced a sports intensity modulation module, which can explicitly control the intensity of facial expressions and body movements. This allows users to controllably manipulate portrait movements, not limited to lip movements. By adjusting the movement intensity, more natural and diverse animations can be generated.

- Based on the Pre-trained Video Diffusion Transformer Model: FantasyTalking is built upon the Wan2.1 Video Diffusion Transformer Model. Leveraging its spatiotemporal modeling capabilities, it generates high-fidelity and coherent talking portrait videos. The model can effectively capture the relationships between audio signals and lip movements, facial expressions, as well as body motions, producing high-quality dynamic portraits.

The project address of FantasyTalking

- Project official website: https://fantasy-amap.github.io/fantasy-talking/

- Github repository: https://github.com/Fantasy-AMAP/fantasy-talking

- arXiv technical paper: https://arxiv.org/pdf/2504.04842

Application scenarios of FantasyTalking

- Game Development: In game development, FantasyTalking can be used to generate dialogue animations and combat animations for game characters. It can generate accurate lip synchronization, rich facial expressions, and natural full-body movements based on voice content, making game characters more vivid and realistic, enhancing the visual effects of the game and the player’s immersion.

- Film and Television Production: In film and television production, it can be used to generate performance animations and special effect animations for virtual characters. Through FantasyTalking, virtual characters with complex expressions and movements can be quickly generated, reducing the manpower and time costs in traditional animation production and adding more creativity and imagination to film and television works.

- Virtual Reality and Augmented Reality: In virtual reality (VR) and augmented reality (AR) applications, FantasyTalking can generate interactive animations and guiding animations for virtual characters.

- Virtual Hosts: FantasyTalking can be used to generate animation videos of virtual hosts. It supports a variety of virtual avatar styles. Virtual hosts can be used in multiple scenarios such as news reporting, live streaming sales, and online education, featuring high practicality and flexibility.

- Intelligent Education: In the field of intelligent education, FantasyTalking can generate animation videos of virtual teachers or virtual teaching assistants.

Related Posts