Neural4D 2o – DreamTech Launches 3D Models Supporting Multimodal Interaction

What is Neural4D 2o?

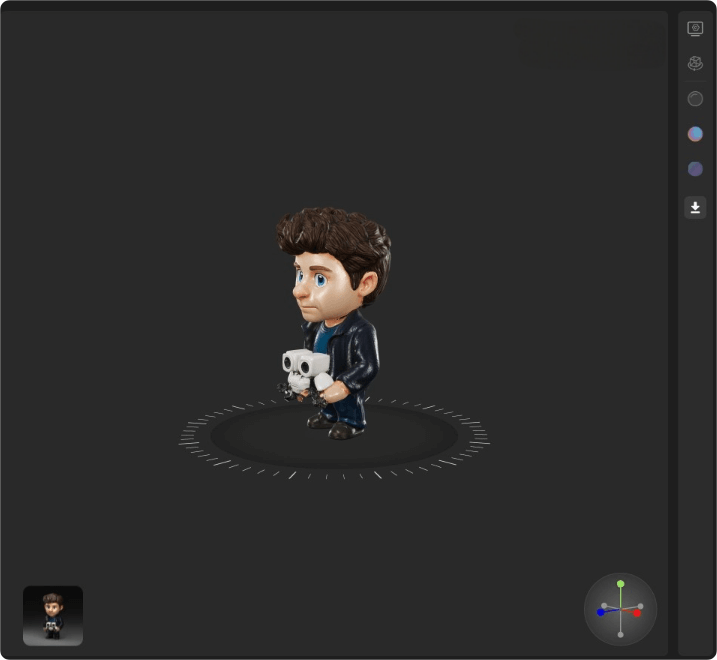

Neural4D 2o is the world’s first 3D large model supporting multimodal interaction, launched by DreamTech. The model is trained on a combination of text, images, 3D data, and motion data, enabling features such as contextual consistency in 3D generation, high-precision local editing, character ID preservation, costume swapping, and style transfer. It allows users to create high-quality 3D content based on natural language instructions. Neural4D 2o natively supports the MCP protocol and has introduced the Neural4D Agent (alpha version) based on MCP, providing users with a smarter, more convenient, and higher-quality 3D content creation experience. Neural4D 2o brings significant convenience to 3D designers and creators, lowering the barrier to entry, enhancing efficiency, and ushering in a new era where everyone can become a 3D designer.

The main functions of Neural4D 2o

- Multimodal Interaction: Supports input of text, images, 3D data, and motion data, enabling interactive editing based on natural language instructions.

- Context Consistency: Maintains the coherence of generated content while preserving the initial style and characteristics.

- High-Precision Local Editing: Allows for precise adjustments to specific details of a 3D model without affecting other parts.

- Character ID Preservation: Ensures the core characteristics and identity of the character remain consistent during editing.

- Clothing Change and Style Transfer: Supports changing costumes for characters or transferring style features.

- MCP Protocol Support: Enhances interaction convenience based on the Neural4D Agent.

The technical principles of Neural4D 2o

- Multimodal Joint Training: A joint training approach based on multiple modalities such as text, images, 3D models, and motion (motion). This enables the model to understand and process information from different modalities simultaneously, constructing a unified context understanding framework.

- Transformer Encoder: Encodes the input multimodal information, extracts key features, and constructs contextual relationships. It processes data from multiple modalities such as text and images, fusing the information together to provide a foundation for subsequent 3D model generation and editing.

- 3D DiT Decoder: Decodes the encoded information into specific 3D models. Based on user instructions and contextual information, it generates high-precision 3D models and supports local editing and complex operations, such as dressing, style transfer, etc.

- Native Support for MCP Protocol and Neural4D Agent: Neural4D 2o natively supports the MCP protocol and launches the Neural4D Agent (alpha version) based on MCP. This provides users with a smarter, more convenient, and higher-quality 3D content creation experience.

Project address of Neural4D 2o

- Project official website: https://www.neural4d.com/n4d-2o

Application scenarios of Neural4D 2o

- 3D Content Creation: Quickly generate and edit 3D models, supporting personalized customization to enhance creation efficiency.

- Game Development: Generate game characters, props, and scenes, supporting dynamic interaction and style transfer to enhance the gaming experience.

- Film and Animation: Quickly generate character and scene prototypes, supporting dynamic character and special effects generation to improve production efficiency.

- Education and Training: Create virtual teaching models and simulation training environments to enhance learning and training outcomes.

- E-commerce and Advertising: Generate 3D product models, providing virtual try-on and experience features to improve shopping experiences and conversion rates.

Related Posts